If you're trying to hire remote DevOps engineers, your old playbook won't work. Forget casting a wide net on generalist job boards. The key is to source talent where they contribute—in specific open-source projects, niche technical communities, and on specialized platforms like OpsMoon. This is an active search, not a passive "post and pray" exercise.

This guide provides a technical, actionable framework to help you identify, vet, and hire engineers with the proven, hands-on expertise you need, whether it's in Kubernetes, AWS security, or production-grade Site Reliability Engineering (SRE).

The New Landscape for Sourcing DevOps Talent

The days of posting a generic "DevOps Engineer" role and hoping for the best are over. The talent market is now defined by remote-first culture and deep specialization. The challenge isn't finding an engineer; it's finding the right engineer with a validated, specific skill set who can solve your precise technical problems.

Your sourcing strategy must evolve from broad outreach to surgical precision. Need to harden your EKS clusters against common CVEs? Your search should focus on communities discussing Kubernetes security RBAC policies or contributing to tools like Falco or Trivy. Looking for an expert to scale a multi-cluster observability stack? Find engineers active in the Prometheus or Grafana maintainer channels who are discussing high-cardinality metrics and federated architectures.

The Remote-First Reality

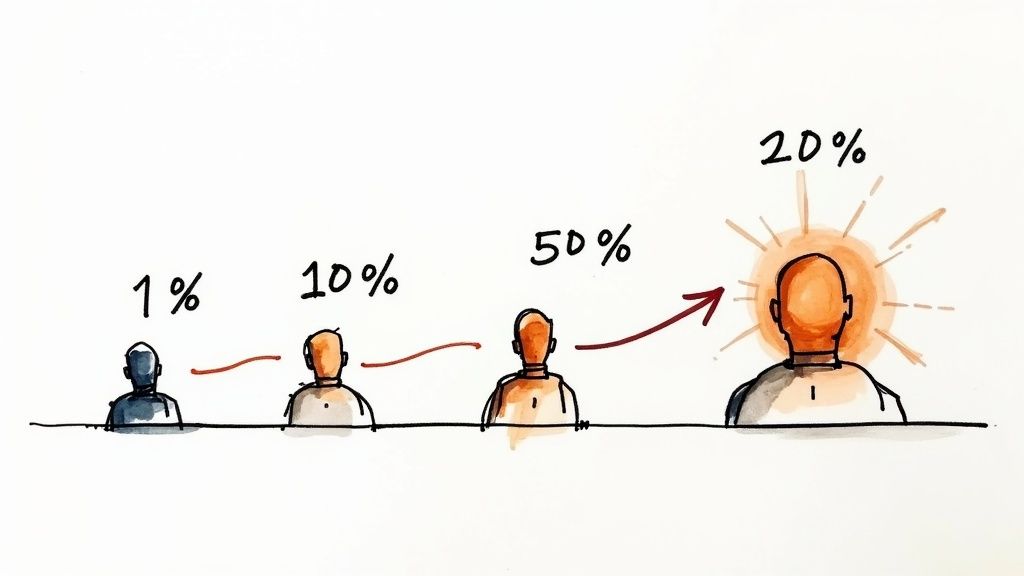

Remote work is no longer a perk; it's the operational standard in DevOps. The data confirms this shift. A staggering 77.1% of DevOps job postings now offer remote flexibility, with fully remote roles outnumbering on-site positions by a ratio of 7 to 1.

This is a fundamental change, making remote work the default. Specialization is equally critical. While DevOps Engineers still represent 36.7% of the demand, roles like Site Reliability Engineers (SREs) at 18.7% and Platform Engineers at 16.3% are rapidly closing the gap.

This infographic visualizes how specialized remote roles—like Kubernetes networking specialists, AWS IAM experts, and distributed systems SREs—are globally interconnected.

It’s a clear reminder that top-tier expertise is globally distributed, making a remote-first hiring strategy non-negotiable if you want to access a deep talent pool.

Where Specialists Congregate

Top-tier remote DevOps engineers aren't browsing generic job boards. They are solving complex technical problems and sharing knowledge in highly specialized communities. To find them, you must engage with them on their turf.

- Niche Online Communities: Go beyond LinkedIn. Immerse yourself in specific Slack and Discord channels dedicated to tools like Terraform, Istio, or Cilium. These are the real-time hubs for advanced technical discourse.

- Open-Source Contributions: An engineer's GitHub profile is a more accurate resume than any PDF. Analyze their pull requests to projects relevant to your stack. This provides direct evidence of their coding standards, problem-solving methodology, and asynchronous collaboration skills.

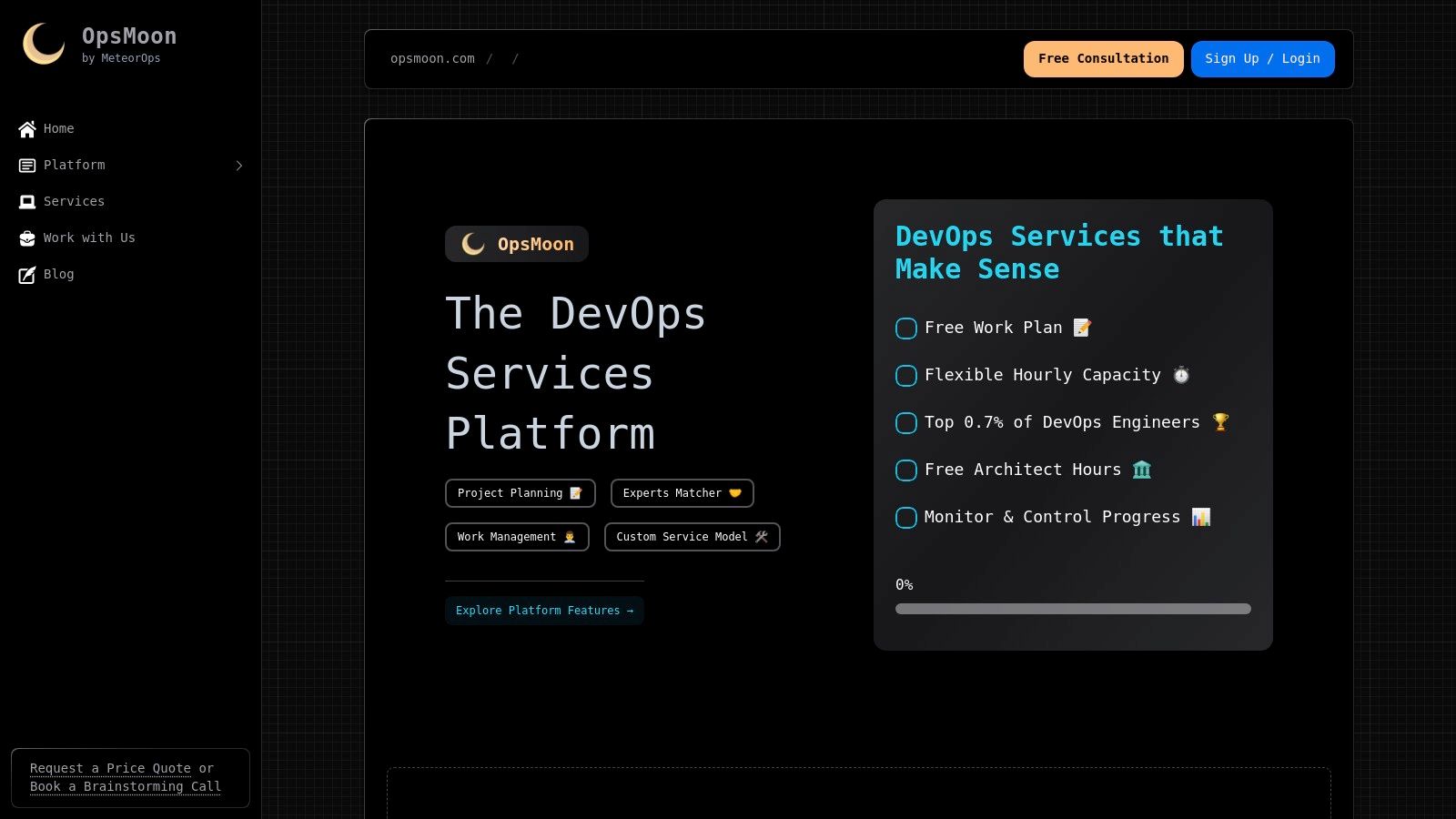

- Specialized Platforms: Platforms like OpsMoon perform the initial vetting, connecting companies with a pre-qualified pool of elite remote DevOps talent. You can assess the market by reviewing current remote DevOps engineer jobs.

To target your search, it's essential to understand the distinct specializations within the DevOps landscape.

Key DevOps Specializations and Where to Find Them

The "DevOps" title now encompasses a wide spectrum of specialized roles. Differentiating between them is crucial for writing an effective job description and sourcing the right talent. This table breaks down common specializations and their primary sourcing channels.

| DevOps Specialization | Core Responsibilities & Technical Focus | Primary Sourcing Channels |

|---|---|---|

| Platform Engineer | Builds and maintains Internal Developer Platforms (IDPs). Creates "golden paths" using tools like Backstage or custom portals. Standardizes CI/CD, Kubernetes deployments, and observability primitives for development teams. | Kubernetes community forums (e.g., K8s Slack), CNCF project contributors (ArgoCD, Crossplane), PlatformCon speakers and attendees. |

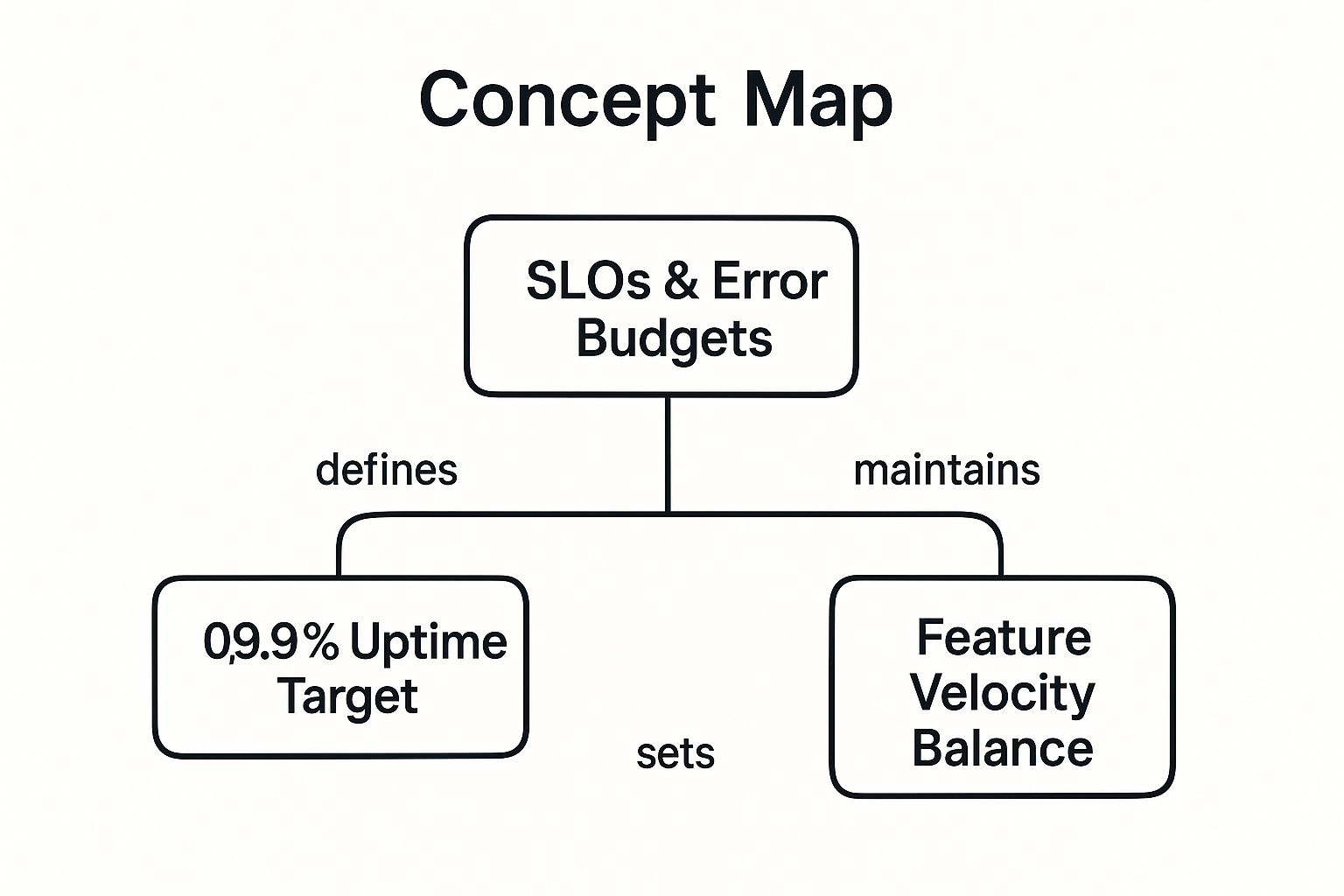

| Site Reliability Engineer (SRE) | Owns system reliability, availability, and performance. Defines and manages Service Level Objectives (SLOs) and error budgets. Leads incident response, conducts blameless post-mortems, and automates toil reduction. | SREcon conference attendees, Google SRE book discussion groups, communities around observability tools (Prometheus, Grafana, OpenTelemetry). |

| Cloud Security (DevSecOps) | Integrates security into the CI/CD pipeline (SAST, DAST, SCA). Manages Cloud Security Posture Management (CSPM) and automates security controls with IaC. Focuses on identity and access management (IAM) and network security policies. | DEF CON and Black Hat attendees, OWASP chapter members, contributors to security tools like Falco, Trivy, or Open Policy Agent (OPA). |

| Infrastructure as Code (IaC) Specialist | Masters tools like Terraform, Pulumi, or Ansible to automate the provisioning and lifecycle management of cloud infrastructure. Develops reusable modules and enforces best practices for state management and code structure. | HashiCorp User Groups (HUGs), Terraform and Ansible GitHub repositories, contributors to IaC ecosystem tools like Terragrunt or Atlantis. |

| Kubernetes Administrator/Specialist | Possesses deep expertise in deploying, managing, and troubleshooting Kubernetes clusters. Specializes in areas like networking (CNI – Calico, Cilium), storage (CSI), and multi-tenancy. Manages cluster upgrades and security hardening. | Certified Kubernetes Administrator (CKA) directories, Kubernetes SIGs (Special Interest Groups), KubeCon participants and speakers. |

Understanding these distinctions allows you to craft a precise job description and focus your sourcing efforts for maximum impact.

The most valuable candidates are often passive; they aren't actively job hunting but are open to compelling technical challenges. Engaging them requires a thoughtful, personalized approach that speaks to their technical interests, not a generic recruiter template.

As you navigate this specialized terrain, remember that many principles overlap with other engineering roles. Reviewing expert tips for hiring remote software developers can provide a solid foundational framework. The core lesson remains consistent: specificity, technical depth, and community engagement are the pillars of modern remote hiring.

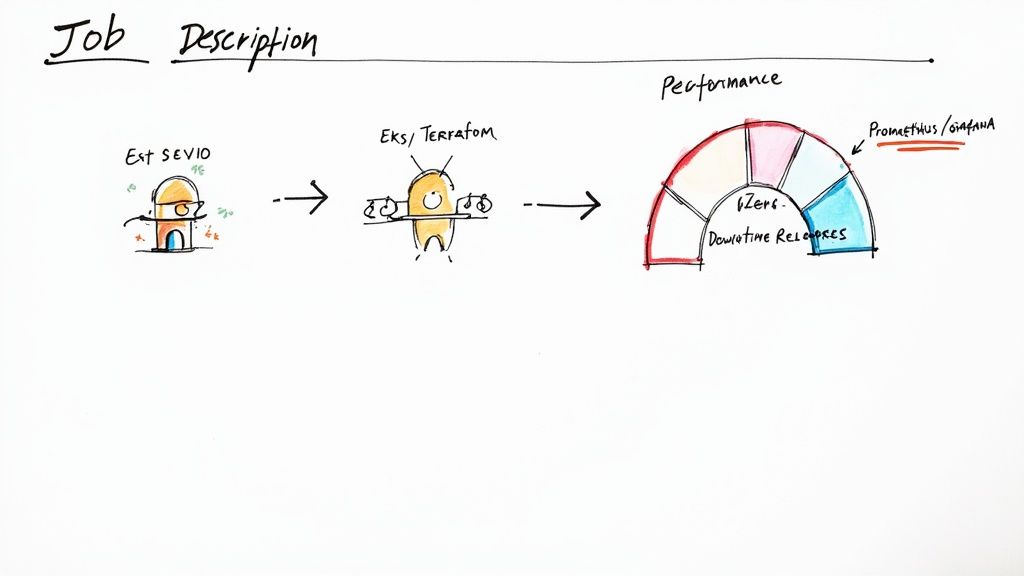

Crafting a Job Description That Attracts Senior Engineers

A generic job description is a magnet for unqualified candidates. If you're serious about hiring remote DevOps engineers with senior-level expertise, your job post must function as a high-fidelity technical filter. It should attract the right talent and repel those who lack the requisite experience.

This isn't about listing generic tasks. It's about articulating the deep, complex technical challenges your team is currently solving.

Vague requirements will flood your inbox. Instead of "experience with cloud platforms," be specific. Are you running "a multi-account AWS organization managed via Control Tower with service control policies (SCPs) for guardrails" or "a GCP environment leveraging BigQuery for analytics and GKE Autopilot for container orchestration"? This level of detail instantly signals to an expert that you operate a mature, technically interesting infrastructure.

This specificity is a sign of respect for their expertise. It enables them to mentally map their skills to your problems, making your opportunity far more compelling than a competitor’s vague wish list.

Detail Your Technical Ecosystem

Senior engineers need to know the technical environment they will inhabit daily. A detailed tech stack is non-negotiable, as it illustrates your environment's complexity, modernity, and the specific problems they will solve.

Provide context, not just a bulleted list. Show how the components of your stack interoperate.

- Orchestration: "We run microservices on Amazon EKS with Istio managing our service mesh for mTLS, traffic routing, and observability. You will help us optimize our control plane and data plane performance."

- Infrastructure as Code (IaC): "Our entire cloud footprint across AWS and GCP is defined in Terraform. We use Terragrunt to maintain DRY configurations and manage remote state across dozens of accounts and environments."

- CI/CD: "Our pipelines are built with GitHub Actions, utilizing reusable workflows and self-hosted runners. You will be responsible for improving pipeline efficiency, from static analysis with SonarQube to automated canary deployments using Argo Rollouts."

- Observability: "We maintain a self-hosted observability stack using Prometheus for metrics (with Thanos for long-term storage), Grafana for visualization, Loki for log aggregation, and Tempo for distributed tracing."

This transparency acts as a powerful qualifying tool. It tells an engineer exactly what skills are required and, just as importantly, what new technologies they will be exposed to. It makes the role tangible and challenging.

Frame Responsibilities Around Outcomes

Top engineers are motivated by impact, not a checklist of duties. A standard job description lists tasks like "manage CI/CD pipelines." A compelling one frames these responsibilities as measurable outcomes. This shift attracts candidates who think in terms of business value and engineering excellence.

Observe the difference:

| Task-Based (Generic) | Outcome-Driven (Compelling & Technical) |

|---|---|

| Maintain deployment scripts. | Automate and optimize our blue-green deployment process using Argo Rollouts to achieve zero-downtime releases for our core APIs, measured by a 99.99% success rate. |

| Monitor system performance. | Reduce P95 latency for our primary user-facing service by 20% over the next two quarters by fine-tuning Kubernetes HPA configurations and implementing proactive node scaling. |

| Manage cloud costs. | Implement FinOps best practices, including automated instance rightsizing with Karpenter and enforcing resource tagging via OPA policies, to decrease monthly AWS spend by 15% without impacting performance. |

This outcome-driven approach allows a candidate to see a direct line between their technical work and the company's success. It transforms a job from a set of chores into a series of meaningful engineering challenges.

A job description is your first technical document shown to a candidate. Treat it with the same rigor. Senior engineers will dissect it for clues about your engineering culture, technical maturity, and the caliber of the team they would be joining.

Address the Non-Negotiables for Remote Talent

When you aim to hire remote DevOps engineers, you are competing in a global talent market. The best candidates have multiple options, and the work environment is a decisive factor. Your job description must proactively address their key concerns.

Be transparent about the operational realities of the role:

- On-Call Schedule: Is there a follow-the-sun rotation with clear handoffs? What is the escalation policy (e.g., PagerDuty schedules)? How is on-call work compensated (stipend, time-in-lieu)? Honesty here builds immediate trust.

- Tooling & Hardware Budgets: Do engineers have the autonomy to select and purchase the tools they need? Mentioning a dedicated budget for software, hardware (e.g., M2 MacBook Pro), and conferences is a significant green flag.

- Level of Autonomy: Will they be empowered to make architectural decisions and own services end-to-end? Clearly define the scope of their ownership and influence over the infrastructure roadmap.

By addressing these questions upfront, you demonstrate a commitment to a healthy, engineer-centric remote culture. This transparency is often the tie-breaker that convinces an exceptional candidate to accept your offer.

Building a Vetting Process That Actually Works

When you need to hire remote DevOps engineers, you must look beyond the resume. You are searching for an engineer who not only possesses theoretical knowledge but can apply it to solve complex, real-world problems under pressure. A robust vetting process peels back the layers to reveal how a candidate actually thinks and executes.

This process is not about creating arbitrary hurdles; it is a series of practical evaluations designed to mirror the daily challenges of the role. Each stage should provide a progressively clearer signal of their technical and collaborative skills.

The Initial Technical Screen

The first step is about efficient, high-signal filtering. A concise technical questionnaire or a short, focused call is your best tool to assess foundational knowledge without committing hours of engineering time.

Avoid obscure command-line trivia. The goal is to probe their understanding of core, modern infrastructure concepts through open-ended questions that demand reasoned explanations.

Here are some example questions:

- Networking: "Describe the lifecycle of a network request from a user's browser to a pod running in a Kubernetes cluster. Detail the roles of DNS, Load Balancers, Ingress Controllers, Services (and kube-proxy), and the CNI plugin."

- Infrastructure as Code: "Discuss the trade-offs between using Terraform modules versus workspaces for managing multiple environments (e.g., dev, staging, prod). When would you use one over the other, and how do you handle secrets in that architecture?"

- Security: "What are the primary security threats in a containerized CI/CD pipeline? How would you mitigate them at different stages: base image scanning, static analysis of IaC, and runtime security within the cluster?"

The depth and nuance of their answers reveal far more than keyword matching. A strong candidate will discuss trade-offs, edge cases, and past experiences, demonstrating the critical thinking required for a senior role.

The Take-Home Automation Challenge

After a candidate passes the initial screen, it's time to evaluate their hands-on skills. A realistic, scoped take-home challenge is the most effective way to separate theory from practice. The key is to design a task that is relevant, respects their time (2-4 hours max), and reflects a real-world engineering problem.

Draw inspiration from your team's past projects or technical debt backlog.

A well-designed take-home assignment is a multi-faceted signal. It reveals their coding style, documentation habits, attention to detail, and ability to deliver a clean, production-ready solution.

For instance, provide a simple application (e.g., a basic Python Flask API) with a clear set of instructions.

Example Take-Home Challenge

"Given this sample web application, please:

- Write a multi-stage

Dockerfileto produce a minimal, secure container image. - Create a CI pipeline using GitHub Actions that builds and tests the application.

- The pipeline must run linting (e.g., Hadolint for Dockerfile) and unit tests on every pull request.

- Upon merging to the

mainbranch, the pipeline should build the Docker image, tag it with the Git SHA, and push it to Amazon ECR (Elastic Container Registry). - Provide a

README.mdthat explains your design choices, any assumptions made, and how to run the pipeline."

This single task tests proficiency with Docker, CI/CD syntax (YAML), testing integration, and cloud provider authentication—all core DevOps competencies. When reviewing, assess the quality of the solution: Is the Dockerfile optimized? Is the pipeline efficient and declarative? Is the documentation clear?

The Final System Design Interview

The final stage is a live, collaborative system design session. This is your opportunity to evaluate their architectural thinking, problem-solving under pressure, and consideration of non-functional requirements like scalability, reliability, and cost. For remote candidates, a virtual whiteboarding tool like Miro or Excalidraw is essential.

In this interview, the process is more important than the final diagram. There is no single "correct" answer. You are evaluating their thought process: how they decompose a complex problem, justify their technology choices, and anticipate failure modes.

Present a broad, open-ended scenario.

- Scenario 1: "Design a scalable and resilient logging system for a microservices application deployed across multiple Kubernetes clusters in different cloud regions. Focus on data ingestion, storage tiers, and providing developers with a unified query interface."

- Scenario 2: "Architect a CI/CD platform for an organization with 100+ developers. The system must support polyglot microservices, enable safe and frequent deployments to production, and provide developers with self-service capabilities."

As they architect their solution, probe their decisions. If they propose a managed service like AWS Elasticsearch, ask for the rationale versus a self-hosted ELK stack on EC2. This back-and-forth provides a definitive signal on a candidate's real-world problem-solving abilities, which is paramount when you hire remote DevOps engineers who must operate with high autonomy.

Running a High-Signal Systems Design Interview

The systems design interview is the crucible where you distinguish a good engineer from a great one. It moves beyond rote knowledge to assess how a candidate handles ambiguity, evaluates trade-offs, and designs for real-world constraints like scale, cost, and reliability. It is the single most effective tool to hire remote devops engineers capable of architectural ownership.

This is not a trivia quiz; it is a collaborative problem-solving session. For remote interviews, a tool like Excalidraw facilitates a natural whiteboarding experience, allowing you to observe their thought process as they sketch components, data flows, and failure boundaries.

The key is to provide a problem that is complex and open-ended, forcing them to ask clarifying questions to define the scope and constraints before proposing a solution.

Crafting the Right Problem Statement

The prompt should be broad enough to permit multiple valid architectural approaches but specific enough to include clear business constraints. You are evaluating their problem-solving methodology, not whether they arrive at a predetermined "correct" answer.

Examples of high-signal problems:

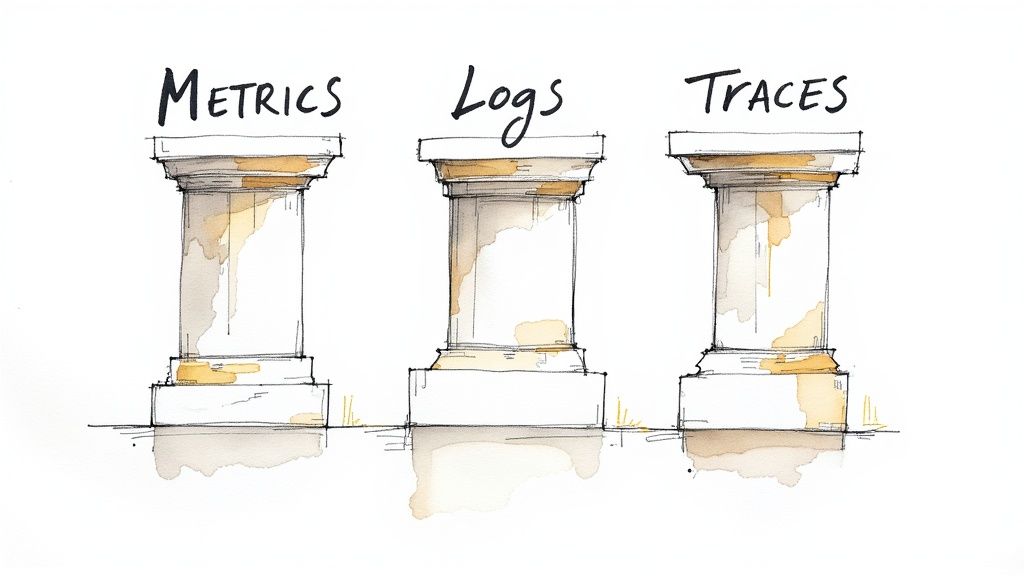

- Architect a resilient, multi-region logging and monitoring solution for a microservices platform. This forces them to consider data ingestion at scale (e.g., Fluentd vs. Vector), storage trade-offs (hot vs. cold tiers), cross-region data replication, and providing a unified query layer for developers (e.g., Grafana with multiple data sources).

- Design the infrastructure and CI/CD pipeline for a stateful application on Kubernetes. This is a deceptively difficult problem that moves beyond stateless 12-factor apps. It requires them to address persistent storage (CSI drivers), database replication and failover, automated backup/restore strategies, and managing schema migrations within a zero-downtime deployment pipeline.

A strong candidate will not start drawing immediately. They will first probe the requirements: What are the latency requirements? What is the expected scale (QPS, data volume)? What is the budget?

Evaluating the Thought Process

As they work through the design, your role is to probe their decisions and understand the why behind their choices. The most valuable signals come from how they justify trade-offs.

- Managed Services vs. Self-Hosted: If they propose Amazon Aurora for the database, challenge that choice. What are the advantages over a self-managed PostgreSQL cluster on EC2 (e.g., operational overhead vs. performance tuning flexibility)? What are the disadvantages (e.g., vendor lock-in, cost at scale)?

- Technology Choices: If they include a service mesh like Istio, dig deeper. What specific problem does it solve in this design (e.g., mTLS, traffic shifting, observability)? Could a simpler ingress controller and network policies achieve 80% of the goal with 20% of the complexity?

- Implicit Considerations: A senior engineer thinks holistically. Pay close attention to whether they proactively address these critical, non-functional requirements:

- Observability: How will this system be monitored? Where are the metrics, logs, and traces generated and collected?

- Security: How is data encrypted in transit and at rest? What is the identity and access management strategy?

- Cost: Do they demonstrate cost-awareness? Do they consider the financial implications of their design choices (e.g., data transfer costs between regions)?

The best systems design interviews feel like a collaborative design session with a future colleague. You are looking for an engineer who can clearly articulate their reasoning, incorporate feedback, and adapt their design when new constraints are introduced.

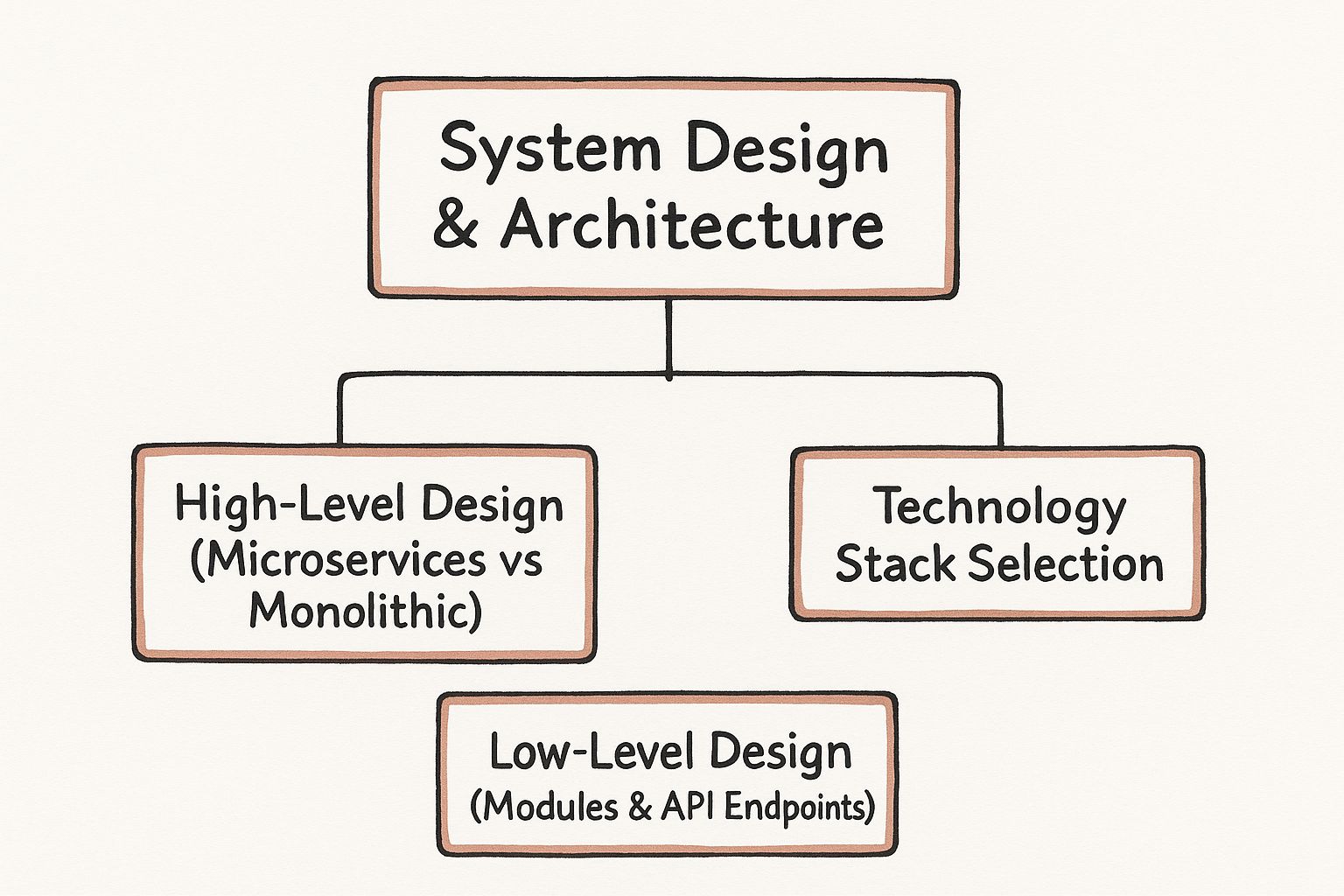

To conduct these interviews effectively, you must have a strong command of the fundamentals yourself. The newsletter post on System Design Fundamentals is an excellent primer. We also offer our own in-depth guide covering core system design principles to help you build a robust evaluation framework.

Using a Consistent Evaluation Rubric

To ensure fairness and mitigate bias, evaluate every candidate against a standardized rubric. This forces you to focus on objective signals rather than subjective "gut feelings." Your rubric should cover several key dimensions.

| Evaluation Area | What to Look For |

|---|---|

| Problem Decomposition | Do they ask clarifying questions to define scope and constraints (e.g., QPS, data size, availability targets)? Do they identify the core functional and non-functional requirements? |

| Technical Knowledge | Is their understanding of the proposed technologies deep and practical? Can they accurately explain how components interact and what their failure modes are? |

| Trade-Off Analysis | Do they articulate the pros and cons of their choices (e.g., cost vs. performance, consistency vs. availability)? Can they justify why their chosen trade-offs are appropriate for the given problem? |

| Communication | Can they clearly and concisely explain their design? Do they use the whiteboard effectively to illustrate complex ideas? Do they respond well to challenges and feedback? |

| Completeness | Does the final design address critical aspects like scalability, reliability (high availability, disaster recovery), security, and maintainability? |

This structured approach transforms the interview from a conversation into a powerful data-gathering exercise, giving you the high-confidence signal needed to make the right hiring decision.

Onboarding and Integrating Your New Remote Engineer

The interview is over and the offer is accepted—now the most critical phase begins. The first 90 days for a new remote DevOps engineer are a make-or-break period that will determine their long-term effectiveness and integration into your team.

A structured, deliberate onboarding process is not a "nice-to-have"; it is the mechanism that bridges the gap between a new hire feeling isolated and one who contributes with confidence and autonomy.

This initial period is about more than provisioning access. You must intentionally embed the new engineer into your team’s technical and cultural workflows. Without the passive knowledge transfer of an office environment, it is your responsibility to proactively build the context they need to succeed.

The Structured 30-60-90 Day Plan

A well-defined plan eliminates ambiguity and sets clear expectations from day one. It provides the new engineer with a roadmap for success, covering technical setup, cultural immersion, and initial project contributions.

The first 30 days are about building a solid foundation.

- Week 1: Setup and Immersion. The sole objectives for this week are to get their local development environment fully functional, grant access to core systems (AWS, GCP, GitHub), and immerse them in your communication tools (Slack, Jira). The most critical action: assign a dedicated onboarding buddy—a peer engineer who can answer tactical questions and explain the team's undocumented norms.

- Weeks 2-4: Learning the Landscape. Schedule a series of 30-minute introductory meetings with key engineers, product managers, and operations staff. Their primary technical task is to study the core infrastructure-as-code repositories (Terraform, Ansible) and, most importantly, your Architectural Decision Records (ADRs). The goal is for them to understand not just how the system is built, but why it was built that way.

This initial phase prioritizes knowledge absorption over feature delivery. You are building the context required for them to make intelligent, impactful contributions later.

Engineering an Early Win

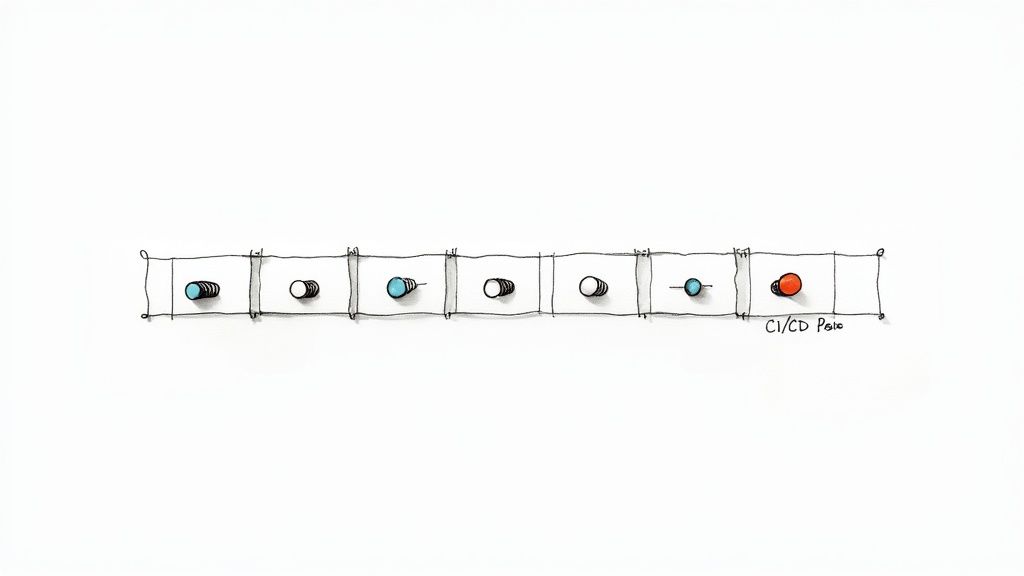

Nothing builds confidence faster than shipping code to production. A critical component of onboarding is engineering a "first commit" that provides a quick, tangible victory. This task must be small, well-defined, and low-risk. The purpose is to have them navigate the entire CI/CD pipeline, from pull request to deployment, in a low-pressure scenario.

The goal of the first ticket isn't to deliver major business value. It's to validate that the new engineer can successfully navigate your development and deployment systems end-to-end. A simple bug fix, a documentation update, or adding a new linter check is a perfect first win.

For example, a great first task might be adding a new check to a CI job in your GitHub Actions workflow or updating an outdated dependency in a shared Docker base image. This small achievement demystifies your deployment process and provides a significant psychological boost.

Cultural Integration and Communication Norms

Technical proficiency is only half the equation. For a remote team to function effectively, cultural integration must be a deliberate, documented process. It begins with clearly outlining your team's communication norms.

Create a living document in your team's wiki that specifies:

- Synchronous vs. Asynchronous: What is the bar for an "urgent" Slack message versus a Jira ticket or email? When is a meeting necessary versus a discussion in a pull request?

- Meeting Etiquette: Are cameras mandatory? How is the agenda set and communicated?

- On-Call Philosophy: What is the process for incident response? What are the expectations for acknowledging alerts and escalating issues?

Documentation is necessary but not sufficient. Proactive relationship-building is essential. The onboarding buddy plays a key role here, but managers must also facilitate informal interactions. These conversations build the social trust that is vital for effective technical collaboration. Our guide on remote team collaboration tools can help you establish the right technical foundation to support this.

By making cultural onboarding an explicit part of your process, you ensure your new remote DevOps engineer feels like an integrated team member, not just a resource logging in from a different time zone.

Common Questions About Hiring Remote DevOps Engineers

When you're looking to hire remote DevOps engineers, several key questions invariably arise. Addressing these directly—from compensation and skill validation to culture—is critical for a successful hiring process.

A primary consideration is compensation. What is the market rate for a qualified remote DevOps engineer? The market is highly competitive. In the US, for instance, the average hourly rate is approximately $60.53 as of mid-2025.

However, this is just an average. The realistic range for most roles falls between $50.72 and $69.47 per hour. This variance is driven by factors like specific expertise (e.g., CKA certification), depth of experience with your tech stack, and years of SRE experience in high-scale environments. To refine your budget, you can explore more detailed salary data based on location and skill set.

How Do You Actually Verify Niche Technical Skills?

A resume might list "expert in Kubernetes" or "proficient in Infrastructure as Code," but how do you validate this claim? Resumes can be aspirational. You need a practical method to assess hands-on capability.

This is where a well-designed, scoped take-home challenge is indispensable. Avoid abstract algorithmic puzzles. Assign a task that mirrors a real-world problem your team has faced.

For example, ask a candidate to containerize a sample application, write a Terraform module to deploy it on AWS Fargate with specific IAM roles and security group rules, and document the solution in a README. The quality of their code, the clarity of their documentation, and the elegance of their solution provide far more signal than any interview question.

What’s the Secret to a Great Remote DevOps Culture?

Building a cohesive team culture without a shared physical space requires deliberate, sustained effort. A new remote hire can easily feel isolated. The key to preventing this is fostering a culture of high trust and clear communication.

The pillars of a successful remote DevOps culture include:

- Default to Asynchronous Communication: Not every question requires an immediate Slack response. Emphasizing detailed Jira tickets, thorough pull request descriptions, and comprehensive documentation respects engineers' focus time, which is especially critical across time zones.

- Practice Blameless Post-Mortems: When an incident occurs, the focus must be on systemic failures, not individual errors. This psychological safety encourages honesty and leads to more resilient systems.

- Write Everything Down: Architectural Decision Records (ADRs), on-call runbooks, and team process documents are your single source of truth. This documentation empowers engineers to work autonomously and with confidence.

The bottom line is this: you must evaluate for autonomy and written communication skills as rigorously as you do for technical expertise. An engineer who documents their work clearly and collaborates effectively asynchronously is often more valuable than a lone genius who creates knowledge silos.

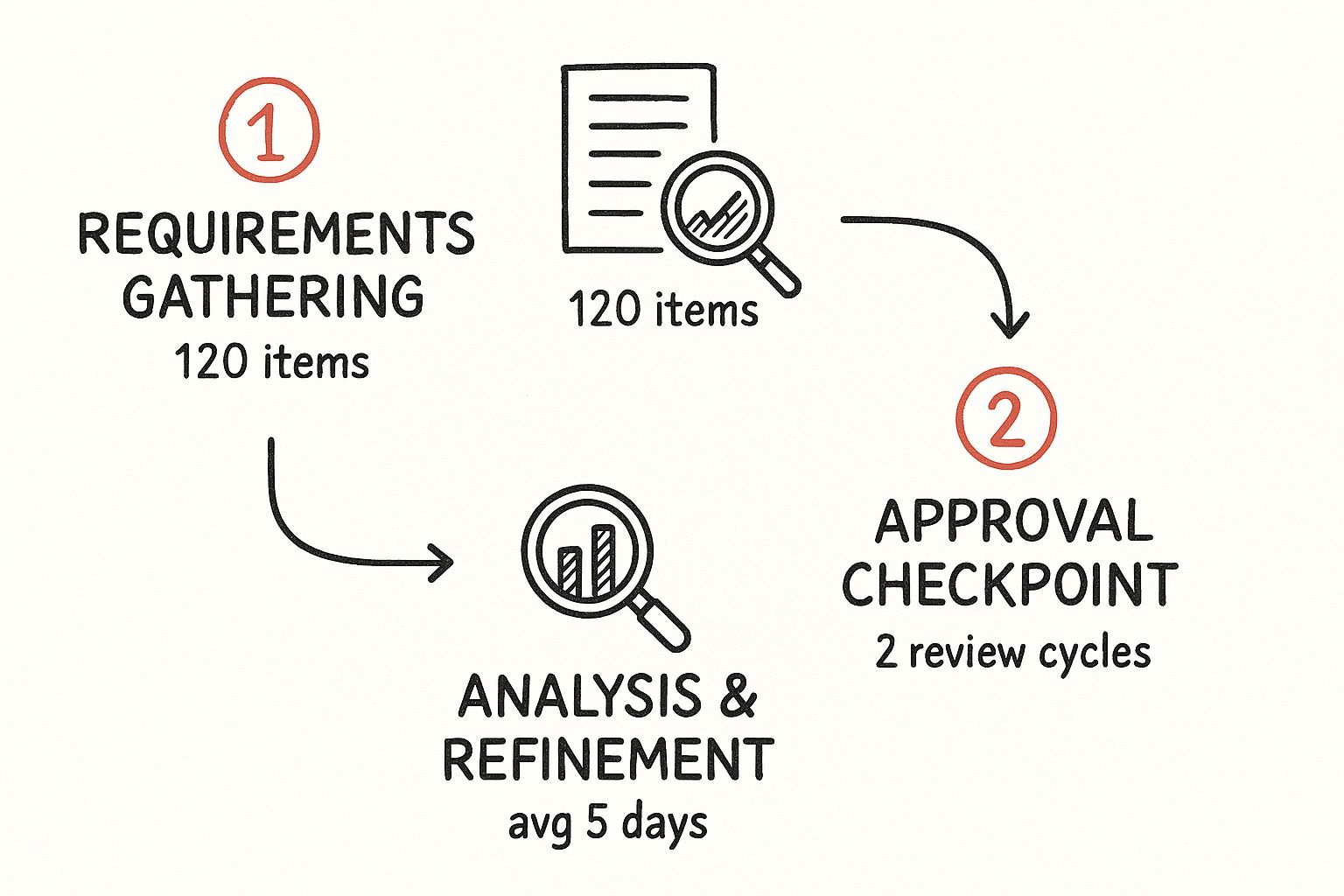

How Long Should This Whole Hiring Thing Take?

A protracted hiring process is the fastest way to lose top-tier candidates to more agile competitors. You must be nimble and decisive. Aim to complete the entire process, from initial contact to final offer, within three to four weeks.

This requires an efficient pipeline: a prompt initial screening, a take-home challenge with a clear deadline (e.g., 3-5 days), and a final "super day" of interviews. Respecting a candidate's time sends a powerful signal about the efficiency and professionalism of your engineering organization.

Ready to skip the hiring headaches and get straight to talking with elite, pre-vetted DevOps talent? OpsMoon uses its Experts Matcher technology to connect you with engineers from the top 0.7% of the global talent pool. We make sure you get the exact skills you're looking for. It all starts with a free work planning session to map out your needs.