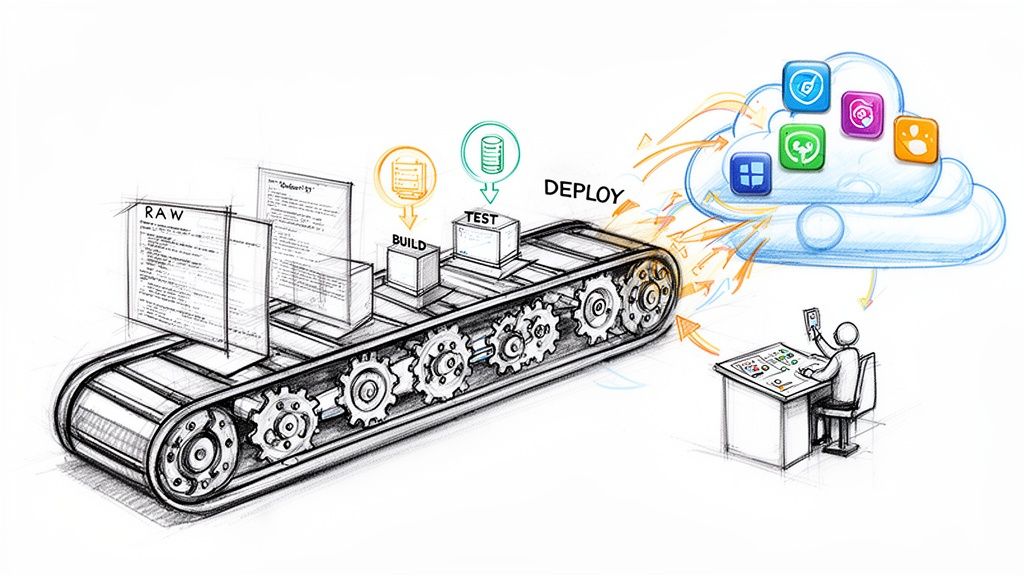

CI/CD as a Service is the use of a cloud-native platform to execute and manage the entire software delivery lifecycle—from code compilation and static analysis to artifact creation and deployment. Functionally, it's an API-driven, managed service that abstracts away the underlying build, test, and deployment infrastructure, allowing engineering teams to focus solely on defining pipeline logic.

This model delegates the operational burden of infrastructure provisioning, scaling, maintenance, and security to a third-party provider, transforming CI/CD from a self-managed infrastructure problem into a declarative, configuration-as-code challenge.

What Is CI/CD as a Service and Why Is It Critical

In a traditional setup, developers spend a significant portion of their time managing CI/CD infrastructure—patching Jenkins servers, managing build agent capacity, resolving dependency conflicts in build environments, and debugging esoteric plugin failures. This is undifferentiated heavy lifting that directly detracts from core development activities.

CI/CD as a Service provides a fully managed, multi-tenant or hybrid architecture where the pipeline execution environment is provisioned on-demand. Developers define their pipeline logic in a YAML file (e.g., .github/workflows/main.yml or .gitlab-ci.yml), commit it to their repository, and the service handles the rest. This declarative, GitOps-centric approach ensures the pipeline itself is version-controlled, auditable, and reproducible.

Breaking Down the Core Concepts

The service model is built upon two fundamental DevOps practices, delivered through a managed platform:

- Continuous Integration (CI): This is the practice of frequently merging feature branches into a central

mainordevelopbranch. Each merge triggers an automated pipeline that executes a series of validation steps: code compilation, static analysis (linting), and running unit and integration test suites. The objective is to detect integration errors, regressions, and code quality issues within minutes of a commit, providing rapid feedback to the developer. You can find a deeper technical breakdown in our guide on what is Continuous Integration. - Continuous Delivery/Deployment (CD): This practice automates the release of validated code. It extends the CI process by packaging the application into a deployable artifact (e.g., a Docker container image, a Java JAR file) and deploying it to one or more environments. Continuous Delivery ensures an artifact is always in a deployable state after passing all automated tests, with a manual gate for production deployment. Continuous Deployment removes the manual gate, automatically promoting builds to production if all preceding stages in the pipeline succeed.

A CI/CD as a Service platform provides the orchestration engine, execution agents (runners), and artifact storage required to execute these practices without requiring teams to manage the underlying compute, storage, and networking infrastructure.

The Technical Impact of Pipeline Automation

The primary technical benefit is a drastic reduction in the commit-to-deploy latency. Manual release processes, characterized by hand-offs between development, QA, and operations teams, often take days or weeks. An automated pipeline can execute the entire sequence in minutes. This velocity is a strategic enabler for implementing agile methodologies and reacting to market demands with high frequency.

By codifying the path to production in a declarative format, CI/CD as a Service makes software delivery a deterministic and repeatable engineering process. It eliminates configuration drift and "works on my machine" syndromes, translating directly to higher deployment success rates and improved system stability.

Consider a typical developer workflow transformation. Instead of a developer manually running npm test, building a Docker image, pushing it to a registry, and then requesting a deployment, the entire workflow is triggered by a git push:

- A webhook from the Git provider notifies the CI/CD service of a new commit.

- The service orchestrator reads the pipeline YAML file and schedules a job on a runner.

- The runner clones the repository, installs dependencies (

npm install), and executes a series of pre-defined script blocks. - It runs unit tests (

npm test), static analysis, and security scans. - If successful, it builds a container image (

docker build -t ...) and pushes it to a container registry. - A subsequent job deploys the new image hash to a staging environment using

kubectl applyor a similar tool.

This "shift-left" automation provides immediate, contextual feedback, catching bugs and security vulnerabilities at the source. This leads to a more resilient codebase, higher developer confidence, and a more robust final product.

Dissecting the Architecture of Modern CI/CD Platforms

To effectively leverage a CI/CD as a service platform, it's crucial to understand its underlying architecture. It is a distributed system designed for orchestrating and executing automated workflows. This architecture is the engine that transforms raw source code from a Git repository into a production-ready, deployed application.

Think of it as a serverless function platform specifically designed for software delivery. You provide the code (your pipeline definition), and the platform provides the execution environment.

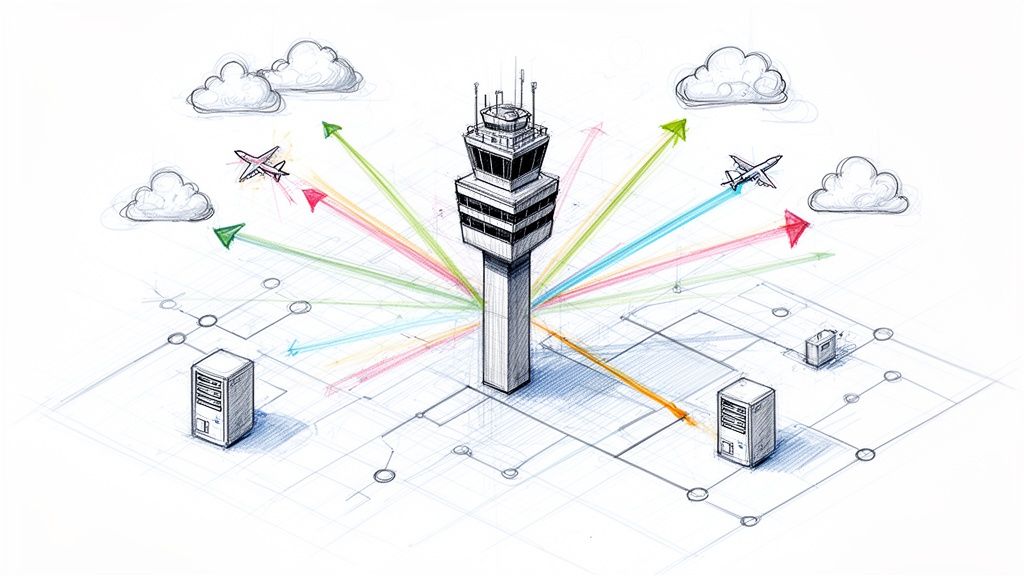

At its heart, this architecture comprises several key components working in concert.

Core Architectural Components

Modern CI/CD platforms are architecturally composed of four primary components, each with a distinct role in the pipeline execution process.

- Version Control System (VCS) Integration: This is the trigger mechanism. Platforms like GitHub Actions or GitLab CI/CD are deeply integrated with their respective Git hosting services. The entire pipeline is initiated by a VCS event, such as a

git pushto a specific branch or the creation of a pull request, communicated via webhooks. - The Orchestrator (Control Plane): This is the centralized scheduler and state machine. The control plane parses the pipeline configuration file (e.g.,

pipeline.yml), constructs a Directed Acyclic Graph (DAG) of stages and jobs, and manages the execution flow. It queues jobs, dispatches them to available runners, and aggregates logs and exit codes. - Runners or Agents (Execution Plane): These are the ephemeral compute instances that perform the actual work. Runners are typically lightweight virtual machines or containers that execute the commands defined in your pipeline jobs—compiling code, running

make test, or executingdocker build. They receive job instructions from the orchestrator, stream logs back in real-time, and are terminated upon job completion. - Artifact Registry: When a build produces an immutable output—a compiled binary, a compressed tarball, or a Docker image—this artifact must be stored and versioned. The artifact registry serves as a repository for these build outputs, ensuring that the exact binary that passed all quality gates is the one deployed to subsequent environments.

At its core, the CI/CD architecture separates the "what" from the "how." The control plane decides what to do based on your configuration, while the execution plane provides the resources for how to do it.

This architectural decoupling enables flexible deployment models, allowing organizations to balance convenience, security, and computational control.

Comparing SaaS vs Hybrid CI/CD Architectures

The primary architectural distinction among CI/CD services lies in the hosting of the execution plane (the runners). This choice dictates the security posture, customization capabilities, and operational overhead of the solution.

This choice between fully managed and hybrid models creates a fundamental trade-off. The table below breaks down the key differences to help you decide which architecture best fits your team's needs for security, scalability, and control.

| Attribute | Fully Managed SaaS | Hybrid Model |

|---|---|---|

| Control Plane Hosting | Provider Hosted & Managed | Provider Hosted & Managed |

| Execution Plane (Runners) | Provider Hosted & Managed | Self-Hosted (On-Prem / Your Cloud) |

| Infrastructure Overhead | None; fully abstracted | Requires managing runner infrastructure (VMs, Kubernetes clusters) |

| Setup & Onboarding | Near-instant; configure YAML and run | Requires agent installation, network configuration, and IAM setup |

| Security & Compliance | Relies on provider's security posture and certifications (e.g., SOC 2) | Maximum control; source code and artifacts remain within your network boundary |

| Customization | Limited to provider's VM images and installed software | Full control over runner OS, specs, installed tooling, and network access |

| Best For | Startups and teams prioritizing speed and simplicity with public codebases. | Enterprises with strict data sovereignty, compliance, or custom environment needs. |

A fully managed SaaS model offers the lowest friction. The provider manages the entire stack, including the runners. Users simply define their pipeline YAML, and the provider provisions ephemeral environments to execute the jobs. This model is ideal for teams who want to minimize operational complexity.

The hybrid model provides a balance of managed service convenience and security control. The provider continues to host the control plane, but the user deploys and manages the runners within their own infrastructure (e.g., an AWS VPC or an on-premise data center). These self-hosted runners poll the control plane for jobs.

This architecture is critical for organizations handling sensitive data or operating under strict regulatory frameworks (e.g., PCI-DSS, HIPAA), as it ensures that proprietary source code, credentials, and build artifacts never traverse the public internet or third-party infrastructure.

This choice is shaping the entire market. The Continuous Delivery market was valued at $3.67 billion in 2023 and is expected to hit $12.25 billion by 2030. Right now, cloud deployments hold a dominant 63.3% share because they're so agile. However, on-premise and hybrid solutions are gaining serious traction, especially in security-focused industries like banking and healthcare. You can dig into more of this data in a Grand View Research report on the Continuous Delivery market.

Ultimately, the decision between SaaS and hybrid is a technical trade-off: SaaS optimizes for velocity and operational simplicity, while the hybrid model optimizes for security, control, and environmental customization.

The Strategic Benefits and Inescapable Trade-Offs

Adopting CI/CD as a Service is an architectural decision with significant engineering and business implications. It fundamentally alters development workflows and resource allocation.

While the benefits are substantial, it is not a universally perfect solution. The decision involves clear trade-offs around control, cost, and platform dependency that technical leaders must carefully evaluate.

The Clear-Cut Business and Technical Benefits

Migrating to a managed CI/CD platform creates a positive feedback loop: faster deployments enable quicker validation of hypotheses, leading to better product decisions and higher code quality, which in turn boosts developer morale and productivity.

The primary technical benefits include:

- Accelerated Deployment Frequency: By automating the entire build-test-deploy sequence, release cycles can shrink from weeks to hours or even minutes. This allows for high-frequency deployments, enabling teams to ship features, bug fixes, and security patches on demand.

- Radically Improved Code Quality: Automated quality gates—such as static analysis (SAST), unit tests, and dependency vulnerability scanning (SCA)—are enforced on every commit. This "shift-left" approach identifies defects and security flaws at the earliest possible stage, dramatically reducing the cost of remediation.

- Enhanced Developer Productivity: Abstracting away CI/CD infrastructure management frees up engineers from non-differentiating tasks like patching Jenkins, managing build agent capacity, or debugging plugin dependency hell. This reclaimed time is reinvested directly into building features and creating business value.

This shift is fueling a massive market. The Continuous Deployment Solution market is on track to hit $15 billion by 2025, because more and more companies are chasing these efficiencies. In fact, organizations that fully automate their deployments see up to a 40% faster time-to-market. You can check out the full breakdown of this explosive market growth on Data Insights Market.

Examining the Inescapable Trade-Offs

Despite the compelling advantages, adopting a managed service introduces dependencies and constraints that must be acknowledged. A clear-eyed assessment of these trade-offs is crucial to avoid future architectural limitations and cost overruns.

Adopting a managed service is a strategic partnership. You gain speed and focus by delegating infrastructure management, but in return, you cede a degree of control and become dependent on the provider's roadmap, security posture, and pricing model.

Key trade-offs to consider:

- Risk of Vendor Lock-In: Pipeline configurations are written in a provider-specific YAML dialect, utilizing proprietary features, actions, or orbs. Migrating hundreds of complex pipelines from one platform to another, such as from GitHub Actions to GitLab CI/CD, is a significant and costly re-engineering effort, not a simple lift-and-shift.

- Data Sovereignty and Security: In a pure SaaS model, source code, environment variables, and build artifacts are processed on the provider's multi-tenant infrastructure. While providers offer robust security controls, this may conflict with stringent regulatory requirements (e.g., GDPR, CCPA) or corporate data residency policies, often necessitating a hybrid architecture with self-hosted runners.

- Total Cost of Ownership (TCO): The pricing model (typically based on build minutes, concurrency, and user seats) can be complex. The subscription fee is only the baseline. True TCO must account for costs related to artifact storage, data egress, and the engineering time required to optimize pipelines for performance and cost-efficiency on a specific platform.

How to Evaluate and Select the Right CI/CD Provider

Selecting a CI/CD as a service platform is a foundational architectural decision. The choice directly impacts developer velocity, operational overhead, and long-term technical debt. A methodical, engineering-led evaluation is critical to ensure the platform aligns with your technical stack and strategic goals, preventing costly migrations and vendor lock-in down the road.

The right platform acts as a force multiplier for your engineering organization; the wrong one becomes a persistent source of friction.

Aligning the Platform with Your Tech Stack

The initial and most critical evaluation criterion is deep, first-class support for your specific technology stack. Superficial compatibility listed on a marketing page is insufficient. The platform must provide optimized, native-feeling workflows for the languages, frameworks, and tools your team uses daily.

Demand specifics on:

- Language and Framework Support: Does the platform offer pre-configured environments and optimized caching for your primary stack (e.g., Node.js, Go, Python, or .NET)? Lacking this, you will be forced to build, host, and maintain custom Docker images for your build environments, negating much of the "as-a-service" benefit.

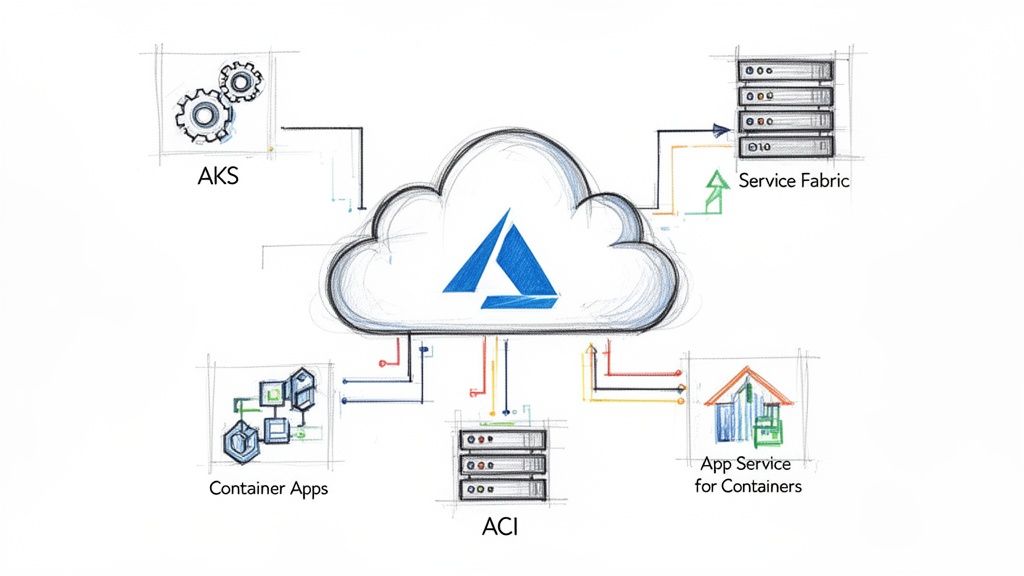

- Containerization Workflow: Evaluate the depth of Docker and Kubernetes integration. Look for built-in features like container layer caching, integrated vulnerability scanning (e.g., Trivy, Snyk), and seamless authentication to your container registries (ECR, GCR, ACR). The platform should simplify, not complicate, the process of building and deploying container images.

- Service Dependencies: How does the platform facilitate integration testing with service dependencies? A mature platform offers a simple

servicesstanza in its YAML configuration to spin up ephemeral containers for dependencies like PostgreSQL or Redis for the duration of a job. A clunky, manual setup process for this is a major red flag indicating a brittle and slow testing experience.

Evaluating the Integration Ecosystem

A CI/CD platform is the connective tissue of your DevOps toolchain. Its value is magnified by the breadth and depth of its integration ecosystem. A platform with a rich marketplace of pre-built integrations reduces the need for custom glue code and brittle shell scripts.

For many teams, a deep-dive GitLab vs GitHub comparison is a natural starting point, since these all-in-one platforms offer powerful native integrations. But you also have to look beyond their walled gardens.

For a wider look at the market, our guide to the best CI/CD tools covers a broader range of specialized and all-in-one solutions.

Scrutinize these critical integration points:

- Cloud Providers: How does the platform authenticate with your cloud provider (AWS, Azure, GCP)? Modern, secure authentication via OIDC (OpenID Connect) is the gold standard. It allows pipelines to assume IAM roles directly, eliminating the need to store and rotate long-lived, high-risk static access keys.

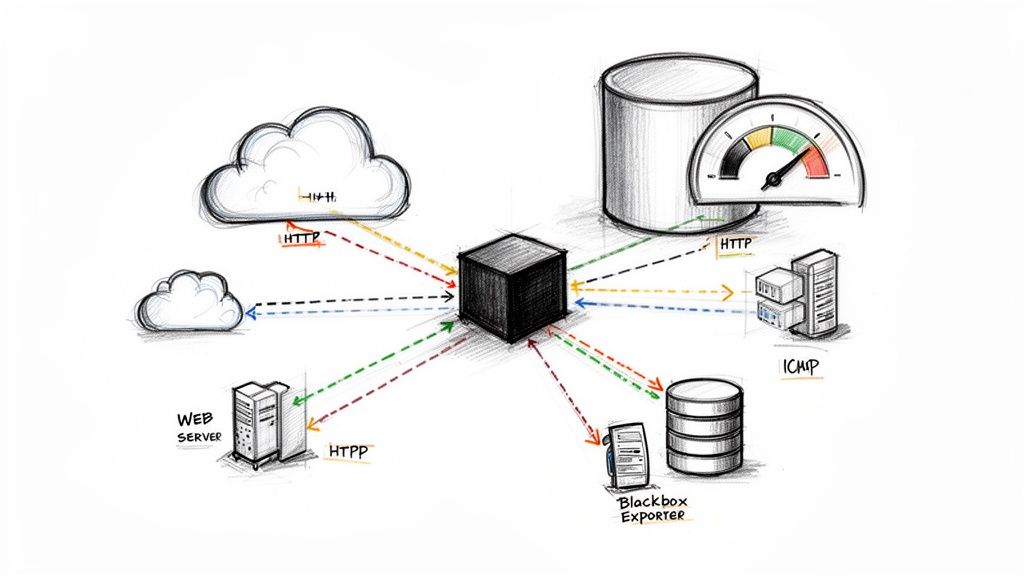

- Observability Tools: Is there a native integration to export pipeline metrics (e.g., duration, success rate, queue time) and deployment events to your observability platform (Datadog, New Relic, or Prometheus)? This is crucial for correlating deployment activity with application performance and system health (DORA metrics).

- Security Tooling: How easily can you integrate SAST (Static Application Security Testing), DAST (Dynamic Application Security Testing), and SCA (Software Composition Analysis) tools? The platform should have pre-built actions or orbs for popular tools, allowing security scans to be added to a pipeline with just a few lines of YAML.

Technical Evaluation Checklist for CI/CD Providers

Use this checklist to structure your technical due diligence during vendor demos and proof-of-concept trials. The answers will reveal the platform's architectural maturity and its suitability for enterprise-scale workloads.

| Evaluation Category | Key Technical Questions to Ask |

|---|---|

| Tech Stack & Workflow | What specific caching mechanisms (e.g., dependency, layer, distributed) are supported to optimize build times for our stack? |

| Demonstrate the YAML syntax for running service containers (e.g., a database) for integration testing. | |

| What are the options and limitations for using custom-built VM or container images for our runners? | |

| Integrations | What is your recommended method for passwordless authentication to cloud providers like AWS or GCP? Do you support OIDC? |

| Show me an example of integrating a third-party SAST/SCA scanner into a pipeline. | |

| Is there a comprehensive and versioned REST or GraphQL API for programmatically managing all platform resources? | |

| Security & Compliance | What is the mechanism for managing secrets? Is there a built-in vault, or does it integrate with external ones like HashiCorp Vault or AWS Secrets Manager? |

What is the granularity of your Role-Based Access Control (RBAC)? Can we create custom roles with specific permissions (e.g., pipeline-operator)? |

|

| Are you SOC 2 Type II compliant? Can we review the full attestation report under NDA? | |

| Performance & Scale | How does runner auto-scaling work? What is the P95 spin-up time for a new runner? |

| What caching strategies (e.g., layer, dependency) do you support out-of-the-box? | |

| What are the hard and soft limits on job concurrency, and how does the pricing model scale with increased concurrency? | |

| Cost & Support | Provide a detailed breakdown of your pricing model (e.g., cost per build-minute, concurrency tiers, data transfer fees). |

| Are there additional costs for artifact storage, network egress, or advanced features like OIDC? | |

| What are the defined SLAs for platform uptime and support response times? What does the technical onboarding process entail? |

Use this table as your guide during demos and trials. The answers will reveal a lot about a provider's maturity and whether they are truly built for enterprise-grade workloads.

Scrutinizing Security and Performance at Scale

A CI/CD system that is performant for a small team can become a productivity bottleneck for a large engineering organization. You must evaluate any platform through the lens of your future scale, not your current needs.

A provider's approach to security is a direct reflection of its maturity. Key features like granular role-based access control (RBAC), built-in secrets management, and transparent compliance certifications (like SOC 2 Type II) are non-negotiable for any serious enterprise.

Slow pipelines impose a direct "developer productivity tax." Every minute a developer waits for build feedback is wasted. Performance-enhancing features are therefore critical.

Intelligent caching (e.g., dependency, Docker layer), job parallelization, and auto-scaling runners are essential for maintaining tight feedback loops. Caching, in particular, can slash build times by reusing artifacts and dependencies from previous runs, yielding significant savings in both time and cost.

Your Step-by-Step Plan for Rolling Out CI/CD

Adopting CI/CD as a service is an incremental process, not a "big bang" cutover. A phased rollout minimizes risk, builds momentum, and allows the team to develop best practices along the way. Attempting to automate everything at once is a common failure pattern.

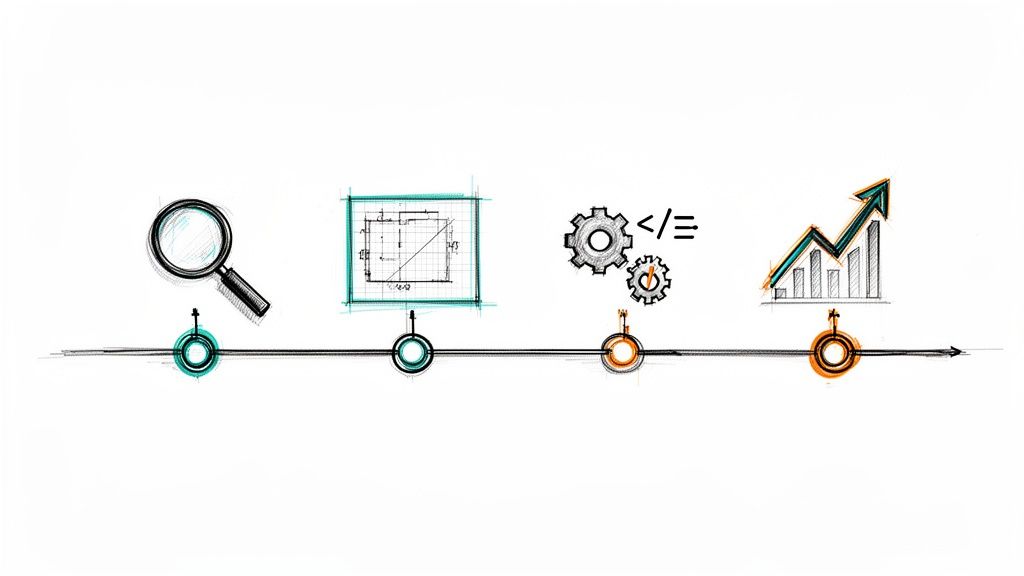

This four-phase roadmap provides a structured approach, starting with a low-risk pilot and progressively building toward a fully automated, production-grade delivery pipeline.

The key is to start small with a well-chosen project to demonstrate value and secure buy-in for broader adoption.

Phase 1: Pick a Pilot Project

The objective of the pilot is to serve as a proof-of-concept and generate internal advocacy. Select a project that is low-risk but offers high visibility, making the benefits of automation immediately apparent.

Ideal pilot project characteristics:

- Well-Defined Scope: A single microservice or a "greenfield" project with minimal legacy dependencies is ideal.

- Motivated Team: Choose an engineering team that is enthusiastic about adopting new tools and improving their workflow.

- Measurable Impact: Select a service where improvements in deployment frequency and stability (e.g., reduced change failure rate) are easily quantifiable.

A successful pilot provides the technical template and the political capital necessary to scale the initiative across the organization.

Phase 2: Build the Core Pipeline

With a pilot project selected, the next step is to implement a foundational CI pipeline. The goal is to establish a basic, reliable automation loop that executes on every git commit. This pipeline should perform the essential validation steps for any code change.

The core pipeline must automate the following stages:

- Code Checkout: Clone the specific commit hash from the version control system.

- Dependency Installation: Install required libraries and packages using a lock file (e.g.,

package-lock.json,go.mod) for reproducibility. - Code Compilation: If applicable, compile the application from source.

- Unit Testing: Execute the full suite of unit tests to validate individual components in isolation.

Success at this stage is defined by providing rapid, binary feedback (a "green" or "red" build status) to the developer within minutes of their push.

Phase 3: Add Automated Quality Gates

With a stable CI pipeline established, you can begin to layer in more sophisticated automated checks. These "quality gates" act as automated, non-negotiable checkpoints that prevent low-quality or insecure code from progressing down the pipeline.

This is the practical application of "shifting left"—embedding quality and security validation directly into the development workflow.

A mature CI pipeline doesn't just build code; it builds confidence. By codifying your quality and security standards into automated gates, you create a system where every commit is vetted against the team's best practices, making deployments a predictable and low-stress event.

Integrate the following key gates:

- Static Code Analysis (SAST): Integrate a linter or static analysis tool (e.g., SonarQube, ESLint) to enforce coding standards and detect common bugs and security anti-patterns.

- Software Composition Analysis (SCA): Use a tool like Snyk or Trivy to scan open-source dependencies for known vulnerabilities (CVEs).

- Integration Testing: Execute tests that verify the interaction between different components or between the application and external services like a database or API.

Phase 4: Automate Staging and Production Deployments

This final phase connects the CI pipeline to your deployment environments. The goal is to make deployment to staging and production a fully automated or one-click, low-risk process.

Implement modern deployment strategies to de-risk this process:

- Blue-Green Deployment: Deploy the new application version to an identical "green" production environment. After validation, cut over traffic from the old "blue" environment. This allows for near-instantaneous rollback by simply redirecting traffic back to blue.

- Canary Release: Route a small percentage of production traffic (e.g., 1%) to the new version. Monitor error rates and key performance indicators. Gradually increase traffic to the new version as confidence grows, eventually reaching 100%.

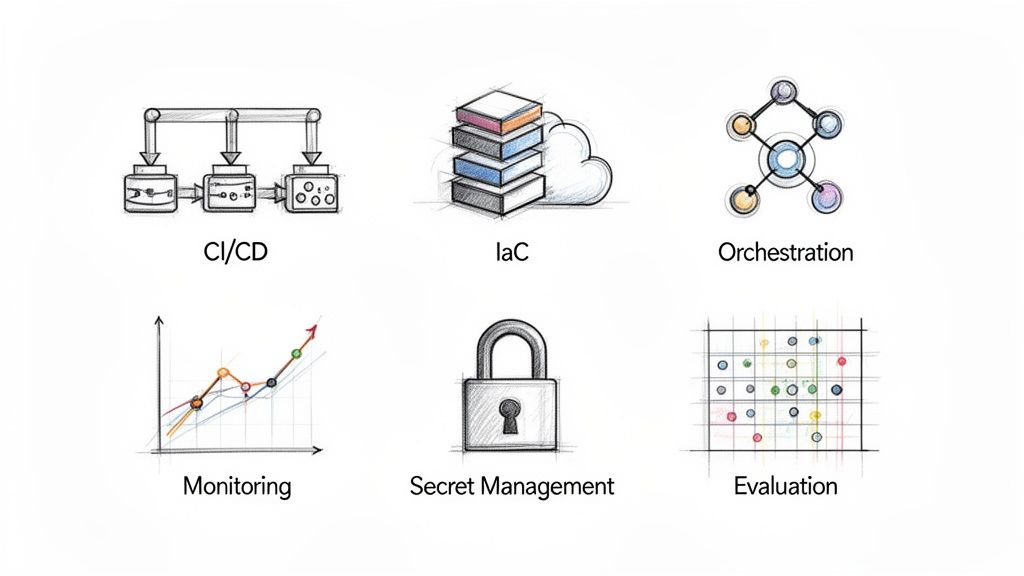

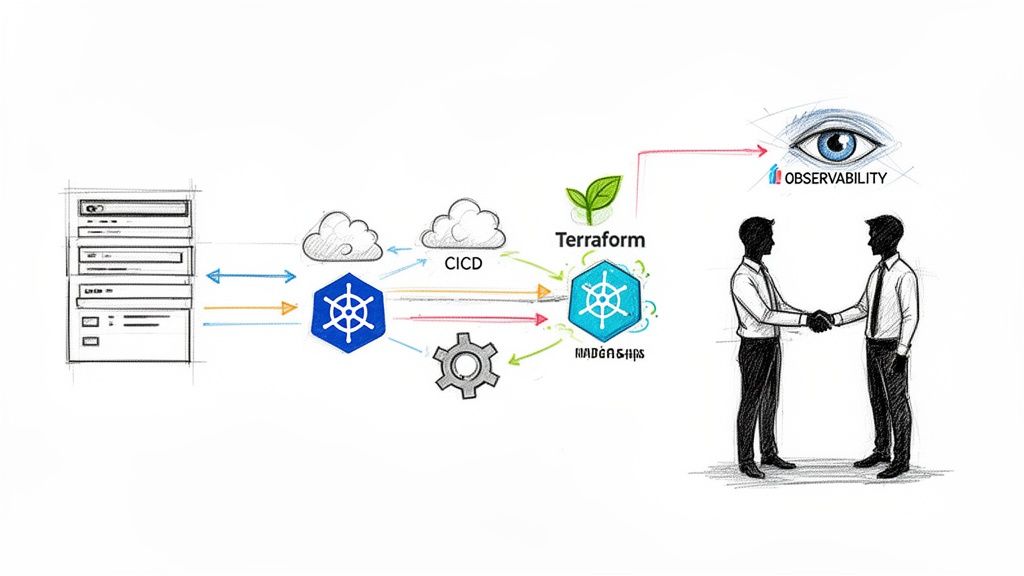

Two foundational practices are essential for this phase: Infrastructure as Code (IaC), using tools like Terraform to version-control and automate the provisioning of environments, and Observability, which provides the telemetry (logs, metrics, traces) needed to monitor pipeline performance and validate the health of deployments.

Advanced Strategies for Security Scale and Cost Control

Once your pipelines are operational, the focus shifts from implementation to optimization. For senior engineers and Site Reliability Engineers (SREs), this involves hardening the security posture, engineering for performance at scale, and implementing FinOps practices to manage the cost of your CI/CD as a service platform.

This is about evolving from a basic software delivery pipeline to a highly optimized, secure, and cost-efficient software factory.

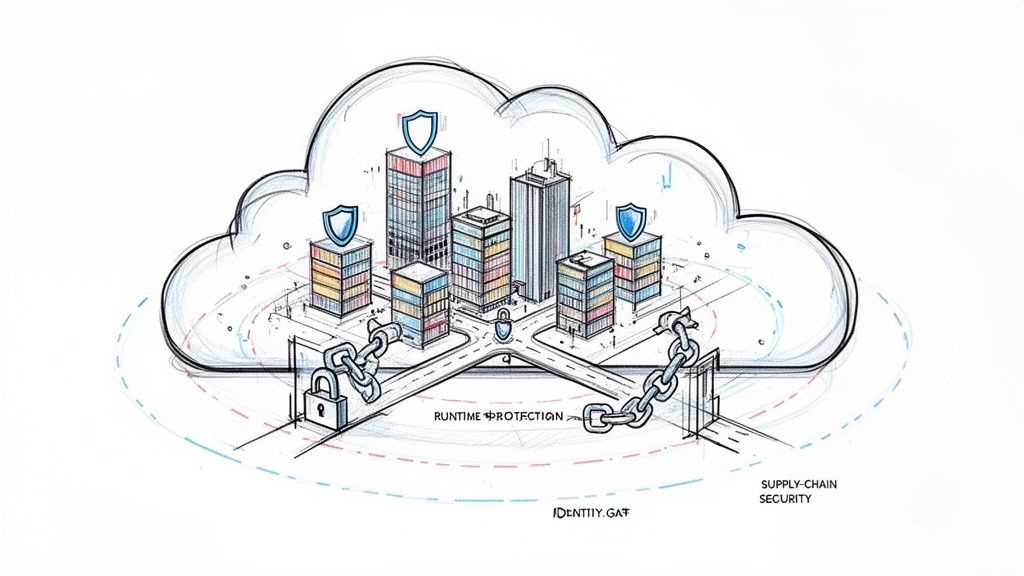

Fortifying Your Software Supply Chain

Modern application security extends beyond the application code to the entire software supply chain. Attackers now target the CI/CD pipeline itself, aiming to inject malicious code or steal credentials. Securing this pipeline is a critical defense-in-depth measure.

The SLSA (Supply-chain Levels for Software Artifacts) framework provides a concrete model for this. Implementing SLSA principles involves several technical controls:

- Signed Builds: Integrate tools like Sigstore's Cosign to cryptographically sign build artifacts (e.g., container images). This generates a verifiable attestation, proving the artifact's provenance and ensuring it has not been tampered with since its creation in a trusted pipeline.

- Dynamic Secrets Injection: Eliminate static, long-lived credentials from your pipeline configuration. Use your CI/CD provider's OIDC integration or a secrets management plugin to fetch credentials dynamically from a secure store like AWS Secrets Manager or HashiCorp Vault. Secrets are injected into the job environment just-in-time and are short-lived.

- Least-Privilege Access Controls: Apply the principle of least privilege to pipeline execution roles. The temporary credentials used by a job should have the minimum permissions necessary to complete its task—for example,

s3:PutObjectto a specific bucket prefix, nots3:*.

We dive deeper into this topic in our guide on DevSecOps in CI/CD.

Designing for Performance at Scale

As developer headcount and commit frequency grow, pipeline execution time becomes a significant factor in overall engineering productivity. A slow pipeline is a drag on the entire development cycle. Optimizing for speed is a core SRE function.

A slow pipeline is a tax on every developer. At scale, optimizing build performance with advanced caching, parallelization, and intelligent runner management is not a luxury—it's a core requirement for maintaining high-velocity development.

To improve pipeline performance, implement these technical strategies:

- Advanced Caching Strategies: Go beyond simple dependency caching. Implement multi-level caching for Docker layers, test results, and intermediate build artifacts. A well-configured distributed cache (e.g., using S3 or a dedicated cache service) can reduce build times by over 50% by avoiding redundant computations across different pipeline runs.

- Build Parallelization: Decompose monolithic pipeline jobs into smaller, independent tasks that can be executed concurrently in a DAG (Directed Acyclic Graph). For example, run linting, unit tests, and security scanning in parallel to provide developers with the fastest possible feedback.

- Auto-Scaling Runner Pools: For self-hosted runners, implement auto-scaling based on job queue depth. Use technologies like Kubernetes-based runners (e.g., Actions Runner Controller for GitHub) or custom EC2 Auto Scaling Groups to dynamically provision and de-provision runner capacity, matching compute resources to demand.

Pinpointing and Eliminating Cost Inefficiencies

The pay-as-you-go model of managed CI/CD services can lead to significant and unexpected costs if not properly governed. A FinOps approach, combining data analysis with technical optimization, is essential for cost control.

Start by using the provider's analytics dashboards to identify the most expensive jobs (by build-minute consumption). Then, apply targeted optimizations:

- Leverage Spot Instances: Configure your self-hosted runner auto-scaling groups to use spot or preemptible instances. Given that CI jobs are typically stateless and idempotent, they are highly tolerant of interruptions, making them an ideal workload for spot capacity, which can offer savings of up to 90% over on-demand pricing.

- Right-Size Compute: Analyze the CPU and memory consumption of your pipeline jobs. Many jobs, like linting or running small test suites, do not require large, expensive runners. Create multiple runner pools with different instance sizes and route jobs to the appropriately-sized pool using labels or tags.

- Optimize Your YAML: Profile your pipeline configuration to identify inefficient scripts, redundant steps, or suboptimal caching. A single poorly configured step that consistently misses the cache can have an outsized impact on your monthly bill.

The CI tools market reflects this need for flexibility, with hybrid deployments becoming a key reason for growth. This is part of a larger trend, with the Continuous Integration Tools market expected to grow from $2.09 billion in 2026 to $5.36 billion by 2031. For a closer look at these trends, you can check out the full continuous integration market analysis on Mordor Intelligence.

Frequently Asked Questions

Let's address some of the most common technical and practical questions that arise when teams evaluate and adopt CI/CD as a Service.

How Is This Different from Running Our Own Jenkins Server?

The fundamental difference is the operational model. A self-hosted Jenkins server provides maximum control and customization but imposes a significant operational burden: you are responsible for provisioning, patching, scaling, and securing the entire stack (master, agents, plugins, and underlying infrastructure).

With CI/CD as a Service, you offload this undifferentiated heavy lifting. The provider manages the control plane and (in a SaaS model) the execution plane. Your team's responsibility shifts from infrastructure management to defining pipeline logic as code. This often results in a lower Total Cost of Ownership (TCO) when factoring in engineering hours spent on maintenance.

Can We Use CI/CD as a Service with Strict Compliance Needs?

Yes. This is a primary driver for the adoption of the hybrid model. For organizations in regulated industries like finance (PCI-DSS), healthcare (HIPAA), or government (FedRAMP), a pure SaaS model is often a non-starter due to data residency and processing requirements.

The solution is a hybrid architecture where the provider manages the control plane, but the build agents (runners) are self-hosted within your own secure network (e.g., a VPC or on-premise data center). This architecture ensures that your source code, secrets, and build artifacts never leave your network boundary, satisfying most compliance and security auditors.

What Is the Most Common Mistake Teams Make When Starting?

The most common failure pattern is attempting a "big bang" migration of a complex, legacy monolithic application as the first project. These projects are often fraught with undocumented build processes, flaky tests, and deep-seated environmental dependencies, making them poor candidates for an initial pilot.

The recommended approach is to start with a "greenfield" project or a well-encapsulated microservice. This allows the team to learn the new platform, establish best practices, and achieve a quick win. This initial success builds momentum and provides a proven template that can then be adapted and rolled out to more complex applications across the organization.

To make your deployments even more solid, you'll eventually want to bring in detailed regression testing. Seeing things like Improvements in Regression Testing API for CI/CD Integration shows just how critical continuous quality checks are for keeping things secure and scalable without letting costs run wild.

Ready to build a world-class CI/CD practice without the operational headache? At OpsMoon, we connect you with elite DevOps engineers to design and implement the perfect CI/CD strategy for your business. Start with a free work planning session and get matched with top-tier talent to accelerate your software delivery. Explore our DevOps services at OpsMoon.