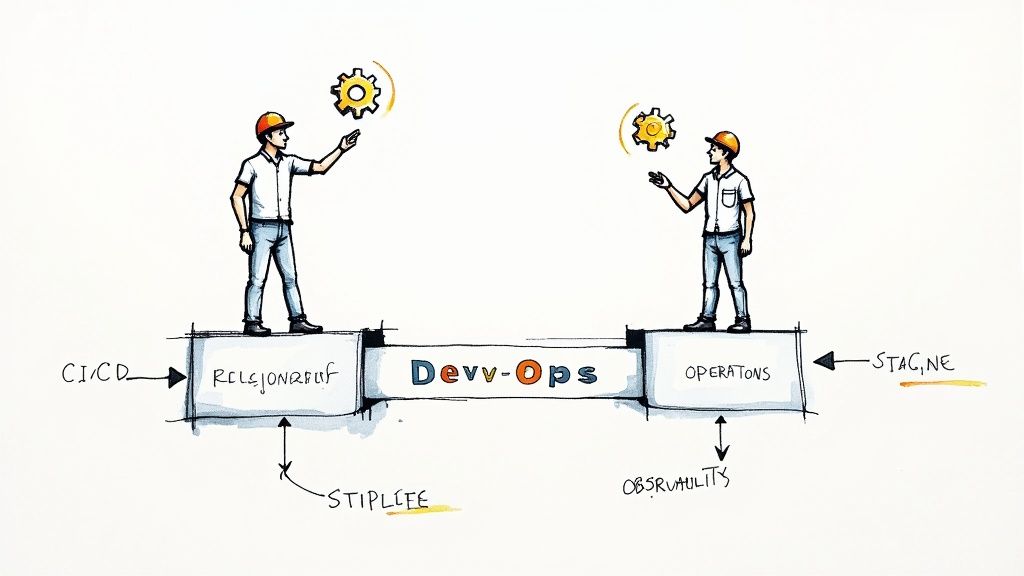

A DevOps consulting firm is a specialized engineering partner that architects and implements automated software delivery pipelines. Their primary function is to integrate development (Dev) and operations (Ops) teams by introducing automation, codified infrastructure, and a culture of shared ownership. The objective is to increase deployment frequency while improving system reliability and security.

This is achieved by systematically re-engineering the entire software development lifecycle (SDLC), from code commit to production monitoring, enabling organizations to release high-quality software with greater velocity.

What a DevOps Consulting Firm Actually Does

A DevOps consulting firm's core task is to transform a manual, high-latency, and error-prone software release process into a highly automated, low-risk, and resilient system. They achieve this by implementing a combination of technology, process, and cultural change.

Their engagement is not about simply recommending tools; it's about architecting and building a cohesive ecosystem where code can flow from a developer's integrated development environment (IDE) to a production environment with minimal human intervention. This involves breaking down organizational silos between development, QA, security, and operations teams to create a single, cross-functional team responsible for the entire application lifecycle.

Core Technical Domains of a DevOps Consultancy

To build this high-velocity engineering capability, a competent DevOps consultancy must demonstrate deep expertise across several interconnected technical domains. These disciplines are the foundational pillars for measurable improvements in deployment frequency, lead time for changes, mean time to recovery (MTTR), and change failure rate.

This table breaks down the key functions and the specific technologies they implement:

| Technical Domain | Strategic Objective | Common Toolchains |

|---|---|---|

| CI/CD Pipelines | Implement fully automated build, integration, testing, and deployment workflows triggered by code commits. | Jenkins, GitLab, GitHub Actions, CircleCI |

| Infrastructure as Code (IaC) | Define, provision, and manage infrastructure declaratively using version-controlled code for idempotent and reproducible environments. | Terraform, Ansible, Pulumi, AWS CloudFormation |

| Cloud & Containerization | Architect and manage scalable, fault-tolerant applications using cloud-native services and container orchestration platforms. | AWS, Azure, GCP, Docker, Kubernetes |

| Observability & Monitoring | Instrument applications and infrastructure to collect metrics, logs, and traces for proactive issue detection and performance analysis. | Prometheus, Grafana, Datadog, Splunk |

| Security (DevSecOps) | Integrate security controls, vulnerability scanning, and compliance checks directly into the CI/CD pipeline ("shifting left"). | Snyk, Checkmarx, HashiCorp Vault |

Each domain is a critical component of a holistic DevOps strategy, designed to create a feedback loop that continuously improves the speed, quality, and security of the software delivery process.

The Strategic Business Impact

The core technical deliverable of a DevOps firm is advanced workflow automation. This intense focus on automation is precisely why the DevOps market is experiencing significant growth.

The global DevOps market was recently valued at $18.4 billion and is on track to hit $25 billion. It is no longer a niche methodology; a staggering 80% of Global 2000 companies now have dedicated DevOps teams, demonstrating its criticality in modern enterprise IT.

A DevOps consulting firm fundamentally re-architects an organization's software delivery capability. The engagement shifts the operational model from infrequent, high-risk deployments to a continuous flow of validated changes, transforming technology from a cost center into a strategic business enabler.

Engaging a firm is an investment in adopting new operational models and engineering practices, not just procuring tools. For companies committed to modernizing their technology stack, this partnership is essential. You can explore the technical specifics in our guide on DevOps implementation services.

Evaluating Core Technical Service Offerings

When you engage a DevOps consulting firm, you are procuring expert-level engineering execution. The primary value is derived from the implementation of specific, measurable technical services. It is crucial to look beyond strategic presentations and assess their hands-on capabilities in building and managing modern software delivery systems.

A high-quality firm integrates these services into a cohesive, automated system, creating a positive feedback loop that accelerates development velocity and improves operational stability.

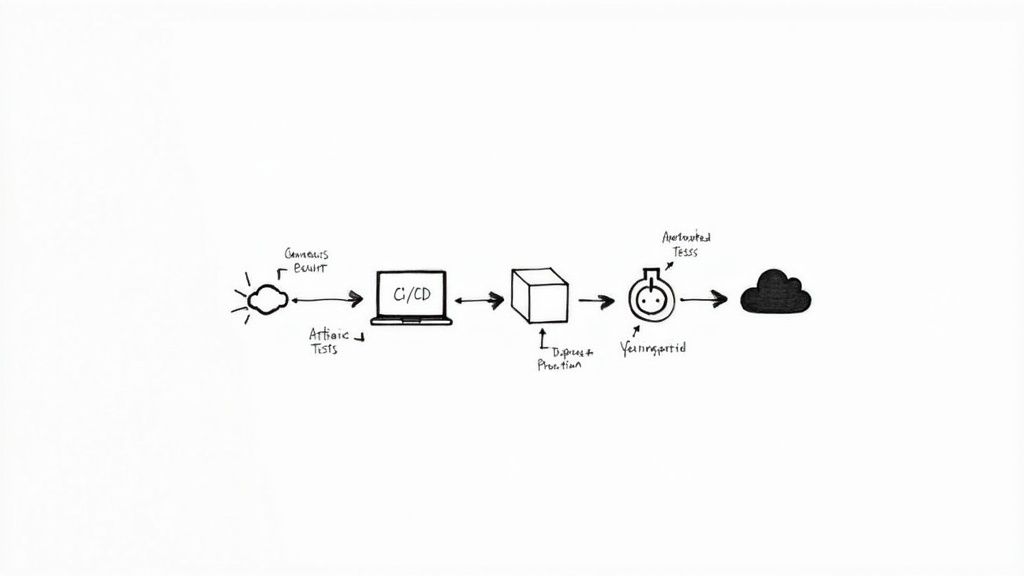

CI/CD Pipeline Construction and Automation

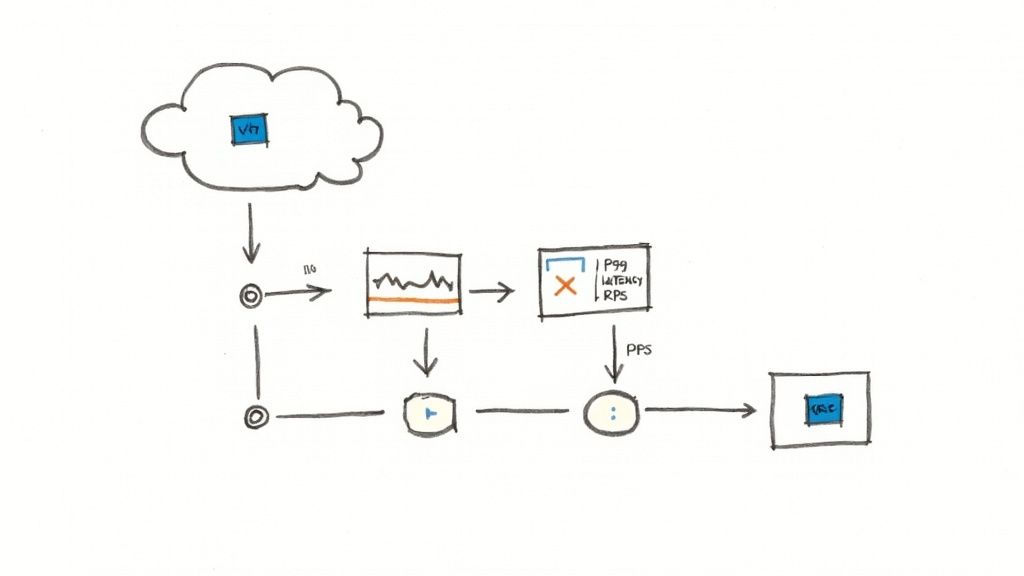

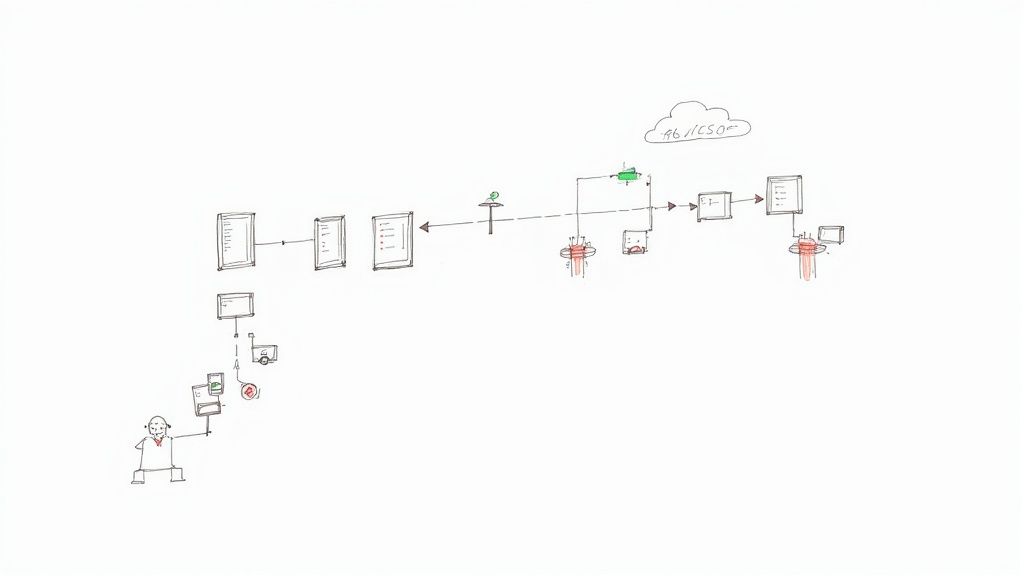

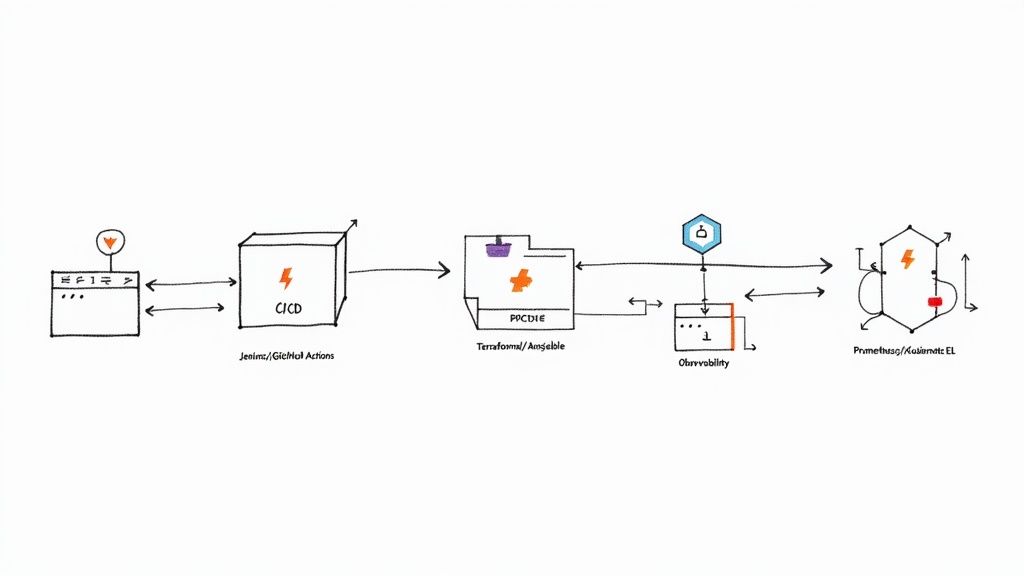

The Continuous Integration/Continuous Deployment (CI/CD) pipeline is the core engine of a DevOps practice. It's an automated workflow that compiles, tests, and deploys code from a source code repository to production. A proficient firm architects a multi-stage, gated pipeline, not merely a single script.

A typical implementation involves these technical stages:

- Source Code Management (SCM) Integration: Configuring webhooks in Git repositories (e.g., GitHub, GitLab) to trigger pipeline executions in tools like GitLab CI or GitHub Actions upon every

git pushormerge request. - Automated Testing Gates: Scripting sequential testing stages (unit, integration, SAST, end-to-end) that act as quality gates. A failure in any stage halts the pipeline, preventing defective code from progressing and providing immediate feedback to the developer.

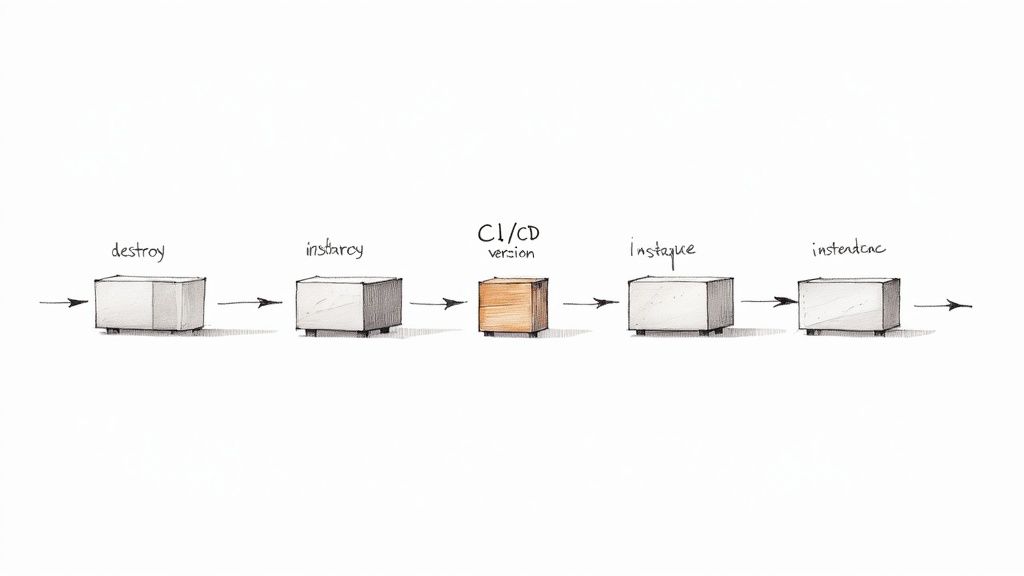

- Artifact Management: Building and versioning immutable artifacts, such as Docker images or JAR files, and pushing them to a centralized binary repository like JFrog Artifactory. This ensures every deployment uses a consistent, traceable build.

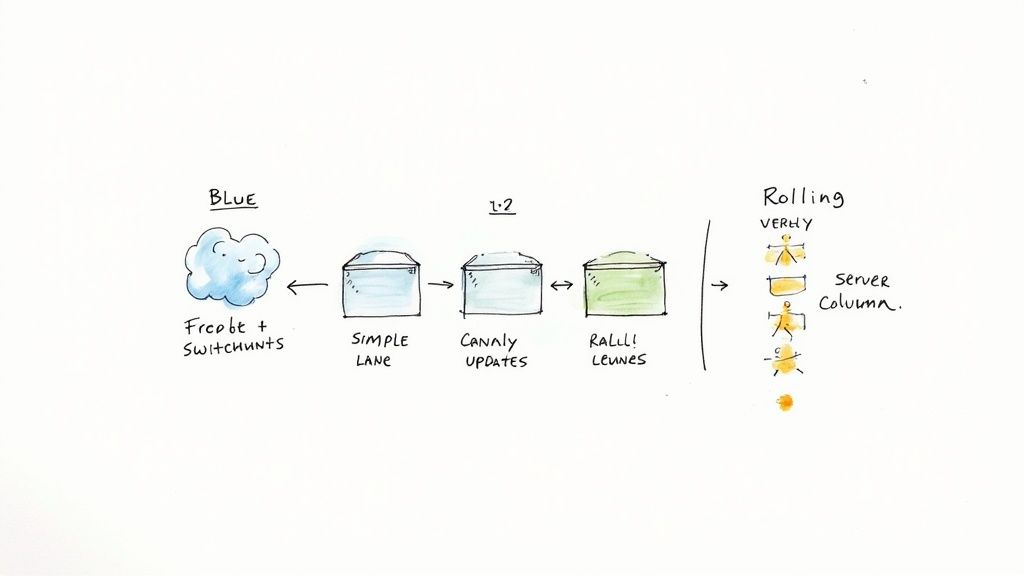

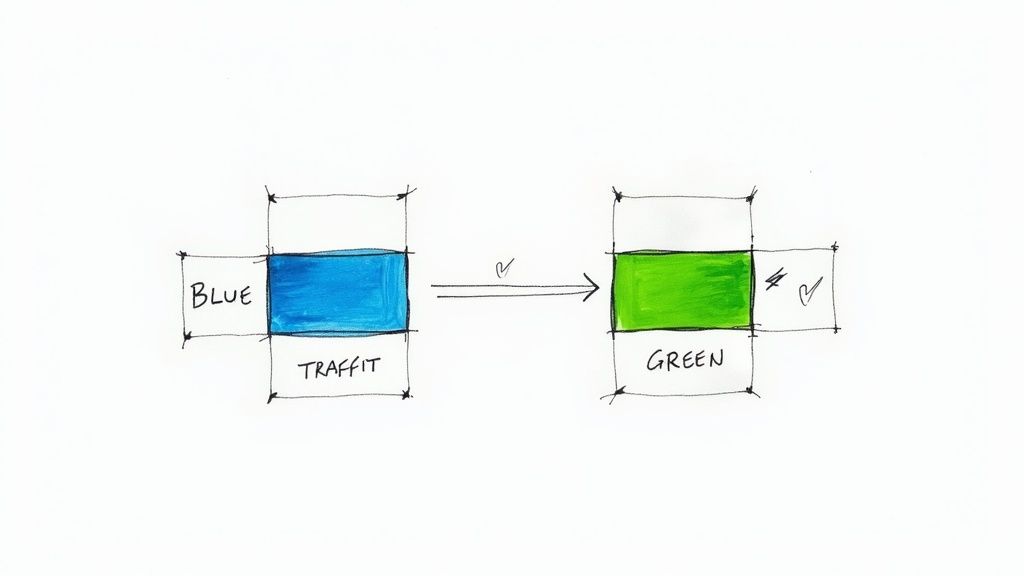

- Secure Deployment Strategies: Implementing deployment patterns like Blue/Green, Canary, or Rolling updates to release new code to production with zero downtime and provide a mechanism for rapid rollback in case of failure.

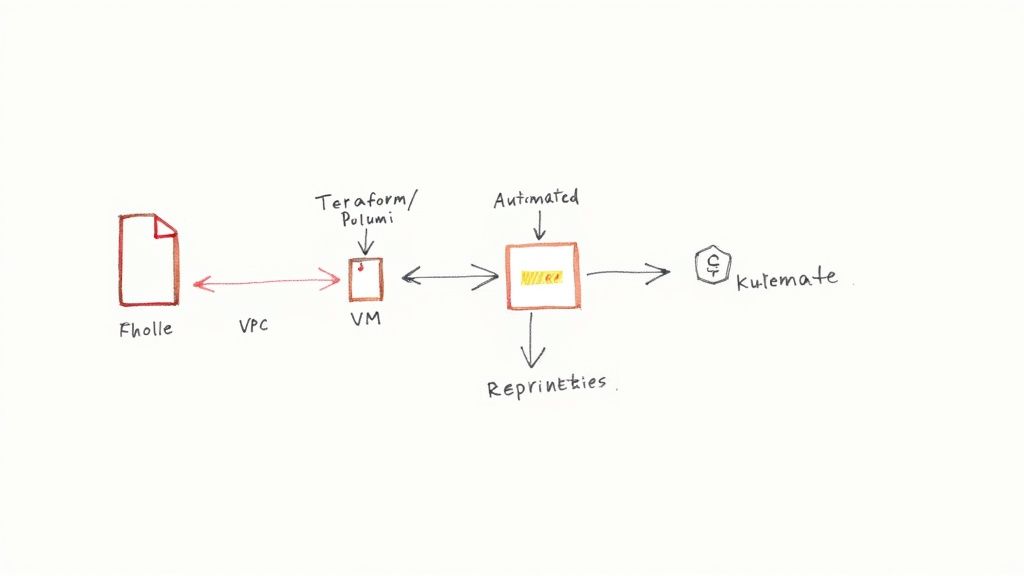

Infrastructure as Code Implementation

Manual infrastructure management is non-scalable, prone to human error, and a primary source of configuration drift. Infrastructure as Code (IaC) solves this by using declarative code to define and provision infrastructure. A DevOps consulting firm will use tools like Terraform or Ansible to manage the entire cloud environment—from VPCs and subnets to Kubernetes clusters and databases—as version-controlled code.

By treating infrastructure as software, IaC makes environments fully idempotent, auditable, and disposable. This eliminates the "it works on my machine" problem by ensuring perfect parity between development, staging, and production environments.

This technical capability allows a consultant to programmatically spin up an exact replica of a production environment for testing in minutes and destroy it afterward to control costs. IaC is the foundation for building stable, predictable systems on any major cloud platform (AWS, Azure, GCP).

Containerization and Orchestration

For building scalable and portable applications, containers are the de facto standard. Firms utilize Docker to package an application and its dependencies into a self-contained, lightweight unit. To manage containerized applications at scale, an orchestrator like Kubernetes is essential. Kubernetes automates the deployment, scaling, healing, and networking of container workloads.

A skilled firm designs and implements a production-grade Kubernetes platform, addressing complex challenges such as:

- Configuring secure inter-service communication and traffic management using a service mesh like Istio.

- Implementing Horizontal Pod Autoscalers (HPAs) and Cluster Autoscalers to dynamically adjust resources based on real-time traffic load.

- Integrating persistent storage solutions using Storage Classes and Persistent Volume Claims for stateful applications.

The Kubernetes ecosystem is notoriously complex, which is why specialized expertise is often required. Our guide to Kubernetes consulting services provides a deeper technical analysis.

Observability and DevSecOps Integration

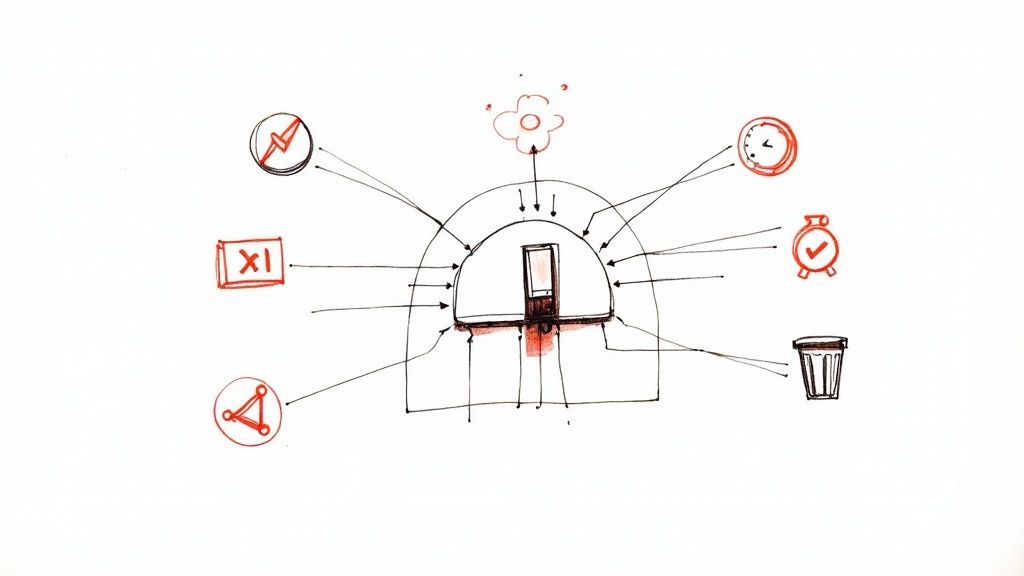

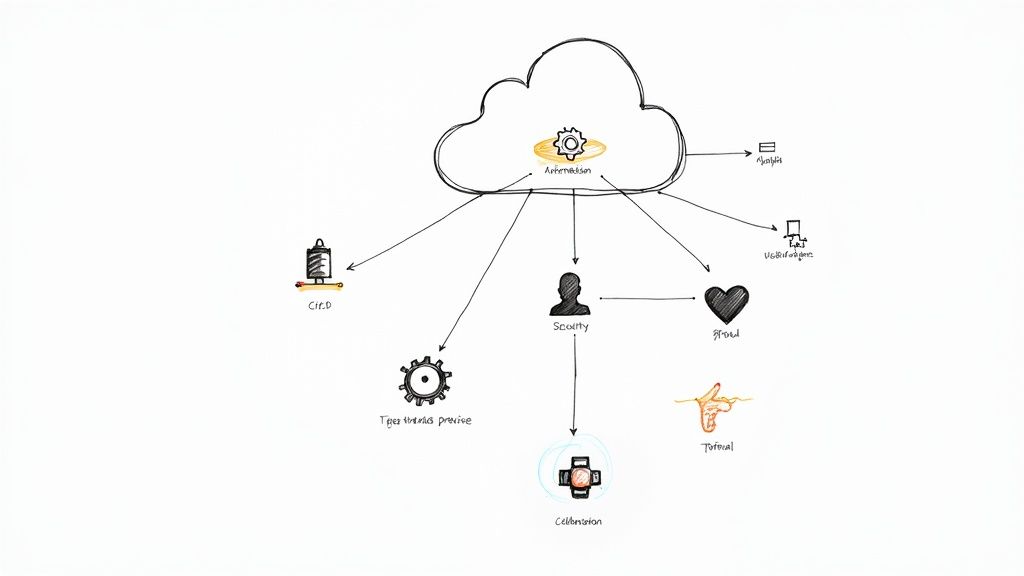

A system that is not observable is unmanageable. A seasoned DevOps consulting firm implements a comprehensive observability stack using tools like Prometheus for time-series metrics, Grafana for visualization, and the ELK Stack (Elasticsearch, Logstash, Kibana) for aggregated logging. This provides deep, real-time telemetry into application performance and system health.

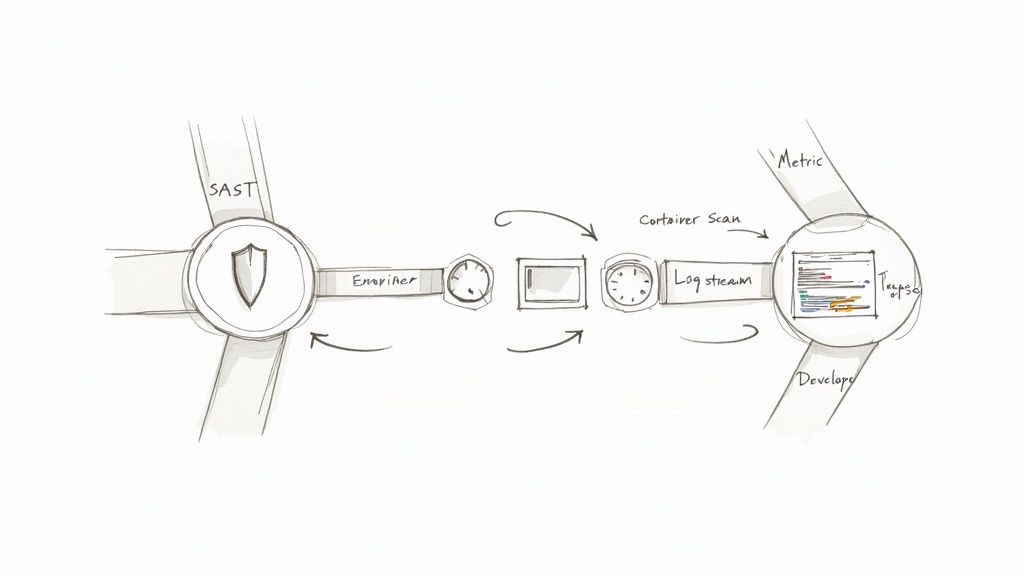

Simultaneously, they integrate security into the SDLC—a practice known as DevSecOps. This involves embedding automated security tooling directly into the CI/CD pipeline, such as Static Application Security Testing (SAST), Software Composition Analysis (SCA) for dependency vulnerabilities, and Dynamic Application Security Testing (DAST), making security a continuous and automated part of the development process.

A Technical Vetting Checklist for Your Ideal Partner

Selecting a DevOps consulting firm requires a rigorous technical evaluation, not just a review of marketing materials. Certifications are a baseline, but the ability to architect solutions to complex, real-world engineering problems is the true differentiator.

Your objective is to validate their hands-on expertise. This involves pressure-testing their technical depth on infrastructure design, security implementation, and collaborative processes. As you prepare your evaluation, it's useful to consult broader guides on topics like how to choose the best outsourcing IT company.

Assessing Cloud Platform and IaC Expertise

Avoid generic questions like, "Do you have AWS experience?" Instead, pose specific, scenario-based questions that reveal their operational maturity and architectural depth with platforms like AWS, Azure, or GCP.

Probe their expertise with targeted technical inquiries:

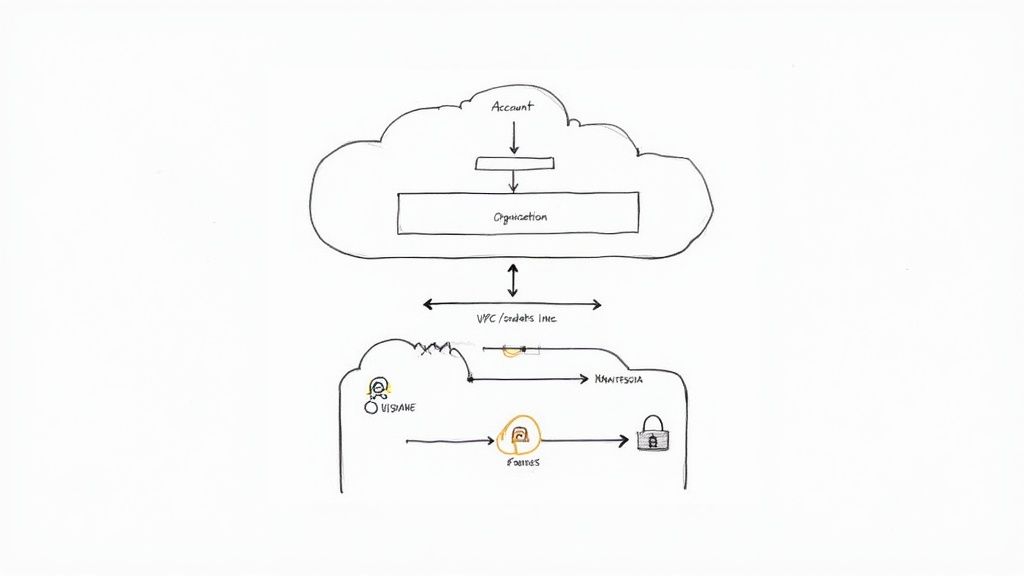

- Multi-Account Strategy: "Describe the Terraform structure you would use to implement a multi-account AWS strategy using AWS Organizations, Service Control Policies (SCPs), and IAM roles for cross-account access. How would you manage shared VPCs or Transit Gateway?"

- Networking Complexity: "Walk me through the design of a resilient hybrid cloud network using AWS Direct Connect or Azure ExpressRoute. How did you handle DNS resolution, routing propagation with BGP, and firewall implementation for ingress/egress traffic?"

- Infrastructure as Code (IaC): "Show me a sanitized example of a complex Terraform module you've written that uses remote state backends, state locking, and variable composition. How do you manage secrets within IaC without committing them to version control?"

Their responses should demonstrate a command of enterprise-grade cloud architecture, not just surface-level service configuration. For a deeper analysis, see our article on vetting cloud DevOps consultants.

Probing DevSecOps and Compliance Knowledge

Security must be an integrated, automated component of the SDLC, not a final-stage manual review. A credible DevSecOps firm will demonstrate a "shift-left" security philosophy, embedding controls throughout the pipeline.

Test their security posture with direct, technical questions:

- "Describe the specific stages in a CI/CD pipeline where you would integrate SAST, DAST, SCA (dependency scanning), and container image vulnerability scanning. Which open-source or commercial tools would you use for each, and how would you configure the pipeline to break the build based on vulnerability severity?"

- "Detail your experience in automating compliance for frameworks like SOC 2 or HIPAA. How have you used policy-as-code tools like Open Policy Agent (OPA) with Terraform or Kubernetes to enforce preventative controls and generate audit evidence?"

These questions compel them to provide specific implementation details, revealing whether DevSecOps is a core competency or an afterthought.

Evaluating Collaboration and Knowledge Transfer

A true partner enhances your team's capabilities, aiming for eventual self-sufficiency rather than long-term dependency. They should function as a force multiplier, upskilling your engineers through structured collaboration.

The DevOps consulting market varies widely. Some firms offer low-cost staff augmentation with global providers like eSparkBiz listing over 400 employees at rates from $12 to $25 per hour. Others position themselves as high-value strategic partners, with established firms of 250 employees charging premium rates between $25 and $99 for deep specialization. Top-rated firms consistently earn 4.6 to 5.0 stars on platforms like Clutch, indicating that client satisfaction and technical excellence are key differentiators.

The most critical question to ask is: "What is your specific methodology for knowledge transfer?" An effective partner will outline a clear process involving pair programming, architectural design reviews, comprehensive documentation in a shared repository (e.g., Confluence), and hands-on training sessions.

Their primary goal should be to empower your team to confidently operate and evolve the new systems long after the engagement concludes.

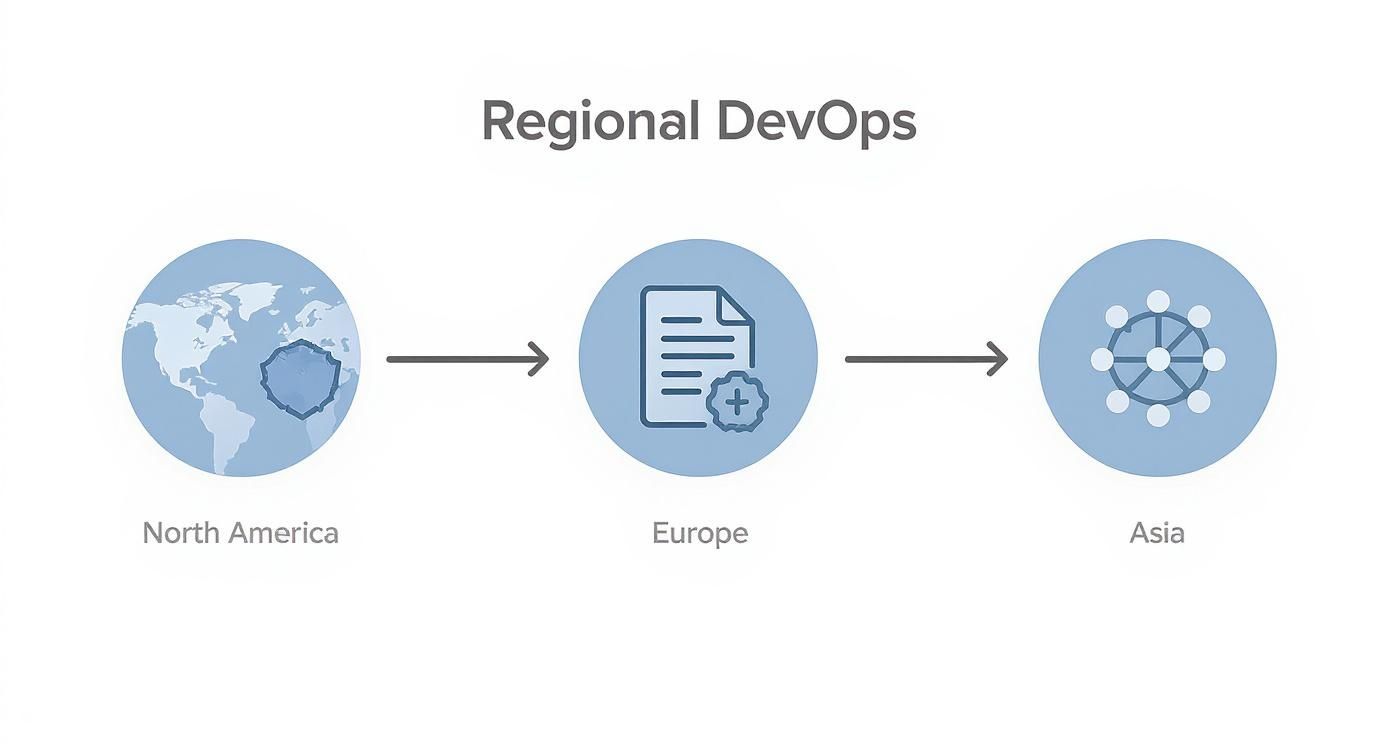

How Regional Specializations Impact Technical Solutions

A DevOps consulting firm's technical approach is often shaped by its primary region of operation. The regulatory constraints, market maturity, and dominant technology stacks in North America differ significantly from those in Europe or the Asia-Pacific.

Ignoring these regional nuances can lead to a mismatch between a consultant's standard playbook and your specific technical and compliance requirements. A consultant with deep regional experience possesses an implicit understanding of local data center performance, prevalent compliance frameworks, and industry-specific demands.

North America Focus on DevSecOps and Scale

In the mature North American market, many organizations have already implemented foundational CI/CD and cloud infrastructure. Consequently, consulting firms in this region often focus on advanced, second-generation DevOps challenges.

There is a significant emphasis on DevSecOps, moving beyond basic vulnerability scanning to integrating sophisticated security automation, threat modeling, and secrets management into the SDLC. North American consultants are typically experts in architecting for hyper-scale, designing multi-region, fault-tolerant systems capable of handling the massive, unpredictable traffic patterns of large consumer-facing applications.

Europe Expertise in Compliance as Code

In Europe, the regulatory environment, headlined by the General Data 'Protection Regulation (GDPR), is a primary driver of technical architecture. As a result, European DevOps firms have developed deep expertise in compliance-as-code.

This practice involves codifying compliance rules and security policies into automated, auditable controls within the infrastructure and CI/CD pipeline. They utilize tools like Open Policy Agent (OPA) to create version-controlled policies that govern infrastructure deployments and data access, ensuring that the system is "compliant by default."

This specialization makes them ideal partners for projects where data sovereignty, privacy, and regulatory adherence are non-negotiable architectural requirements.

Asia-Pacific Diverse and Dynamic Strategies

The Asia-Pacific (APAC) region is not a single market but a complex mosaic of diverse economies, each with unique technical requirements. In technologically advanced markets like Japan and South Korea, the focus is on AI-driven AIOps and edge computing for low-latency services in dense urban areas.

Conversely, in the rapidly growing markets of Southeast Asia, the primary driver is often cost optimization and rapid scalability. Startups and scale-ups require lean, cloud-native architectures that enable fast growth without excessive infrastructure spend. A global market report highlights these varied regional trends. A successful APAC engagement requires a partner with proven experience navigating the specific economic and technological landscape of the target country.

Your Phased Roadmap to DevOps Transformation

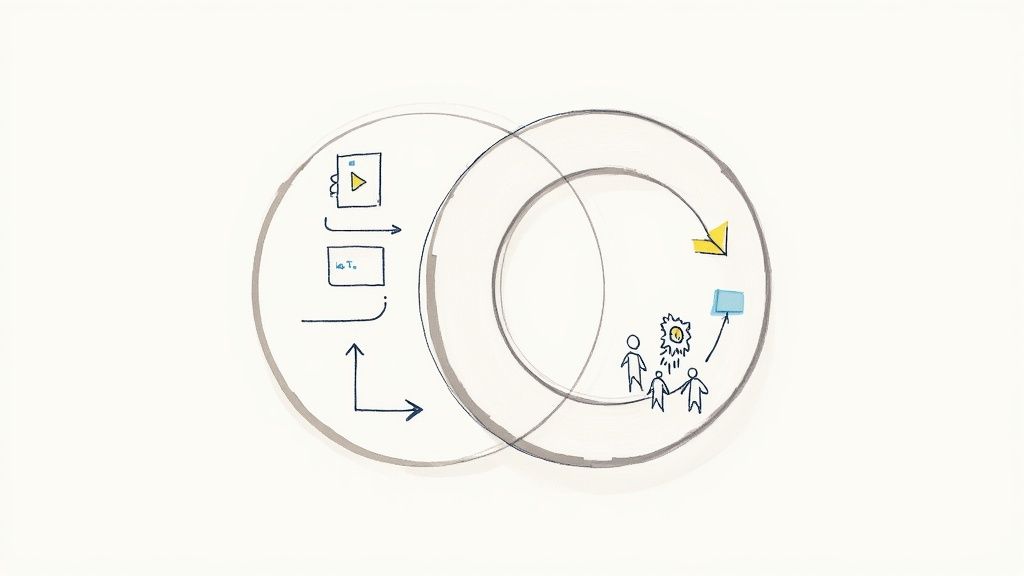

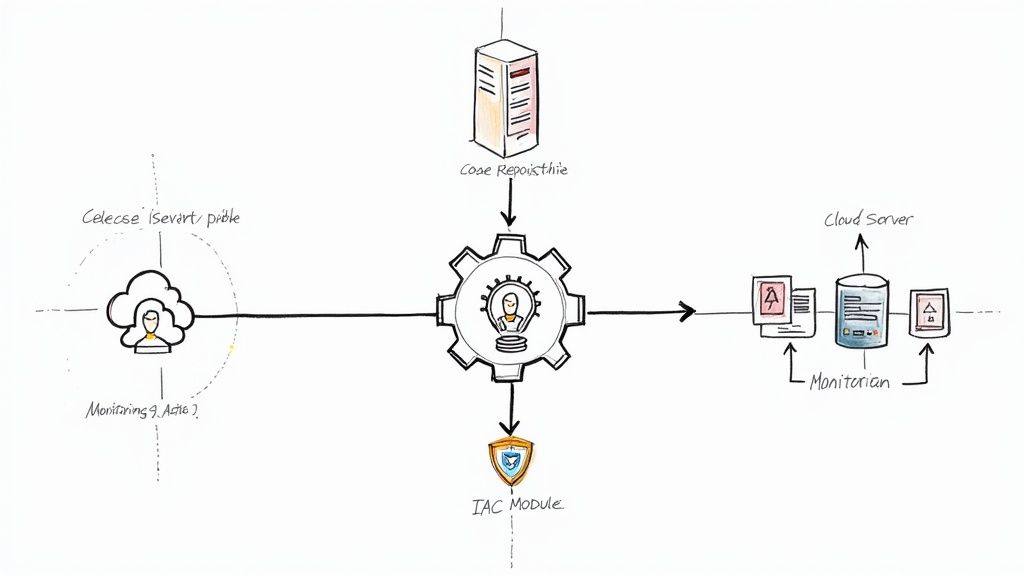

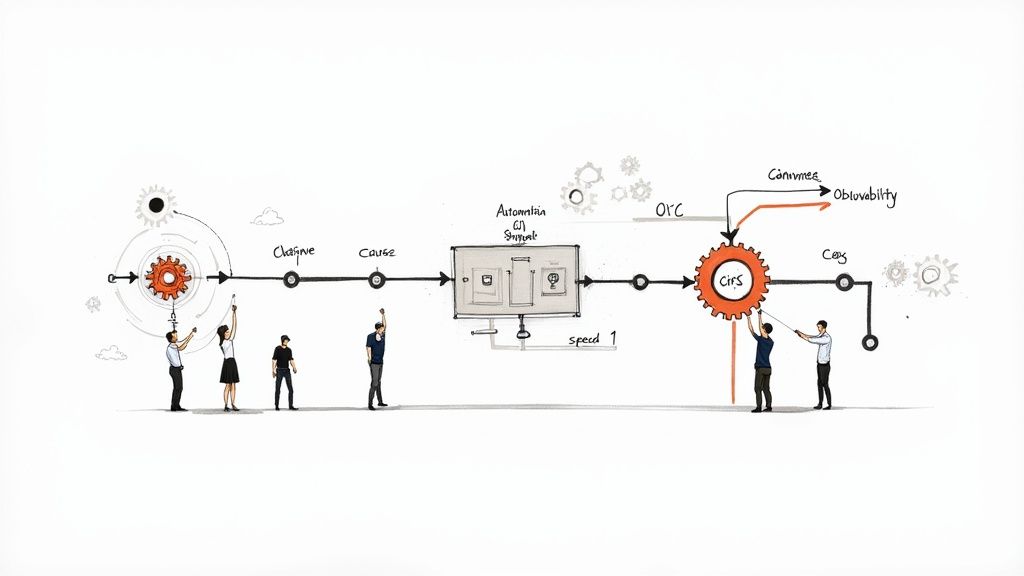

A successful engagement with a DevOps consulting firm follows a structured, phased methodology. This approach is designed to de-risk the transformation, deliver incremental value, and ensure alignment with business objectives at each stage.

Each phase builds logically on the previous one, establishing a solid technical foundation before scaling complex systems. This methodical process manages stakeholder expectations and delivers measurable, data-driven results.

Phase 1: Technical Assessment and Discovery

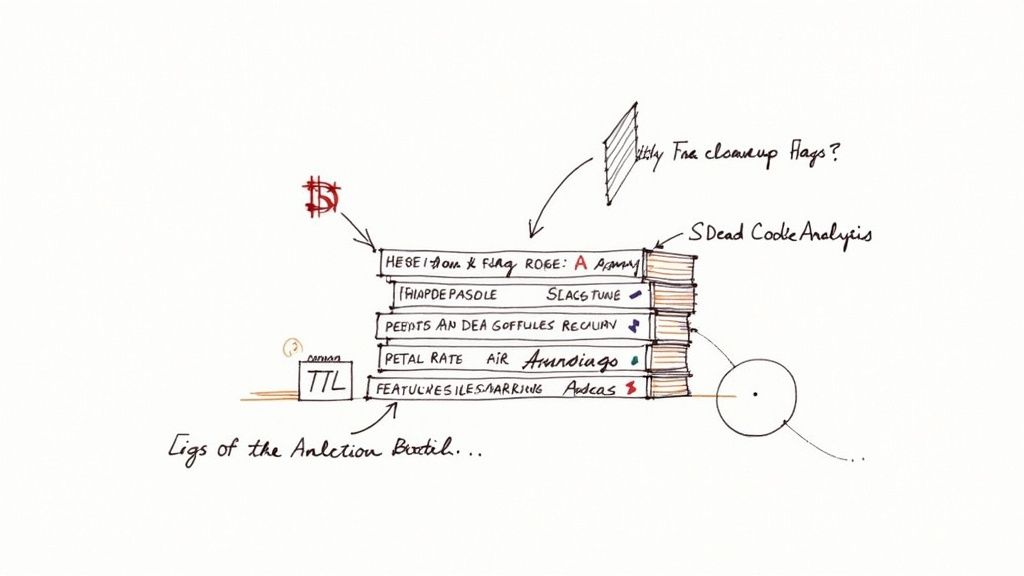

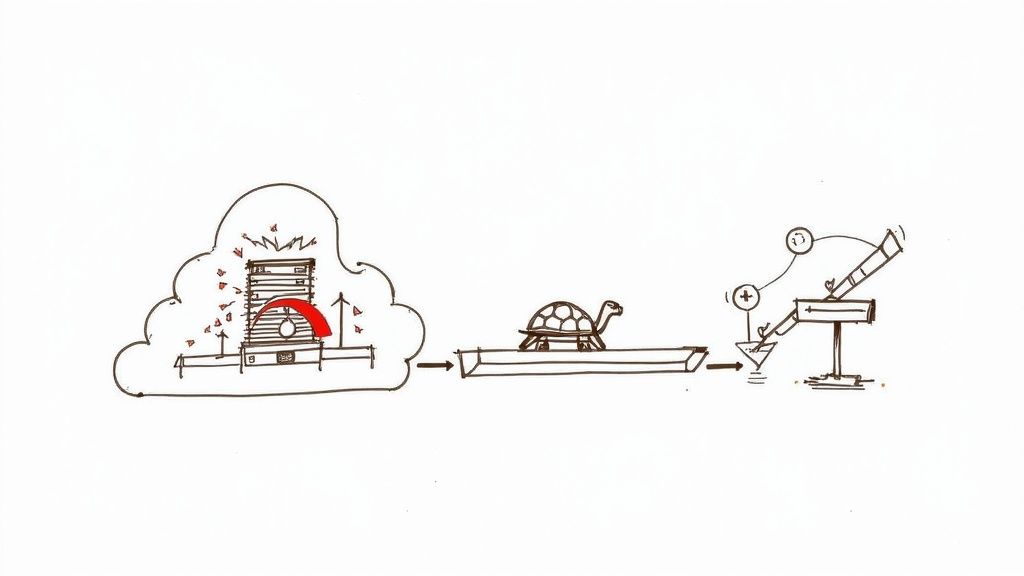

The engagement begins with a deep-dive technical audit of the current state. Consultants perform a comprehensive analysis of existing infrastructure, application architecture, source code repositories, and release processes.

This involves mapping CI/CD workflows (or lack thereof), reverse-engineering manual infrastructure provisioning steps, and using metrics to identify key bottlenecks in the software delivery pipeline. The objective is to establish a quantitative baseline of current performance (e.g., deployment frequency, lead time).

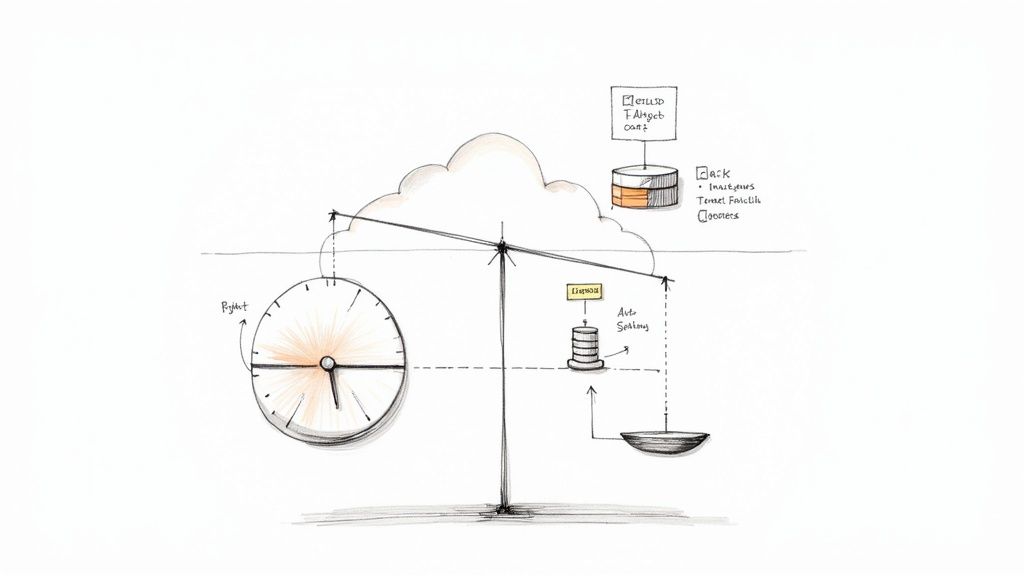

Phase 2: Strategic Roadmap and Toolchain Design

With a clear understanding of the "as-is" state, the consultants architect the target "to-be" state. They produce a strategic technical roadmap that details the specific initiatives, timelines, and required resources.

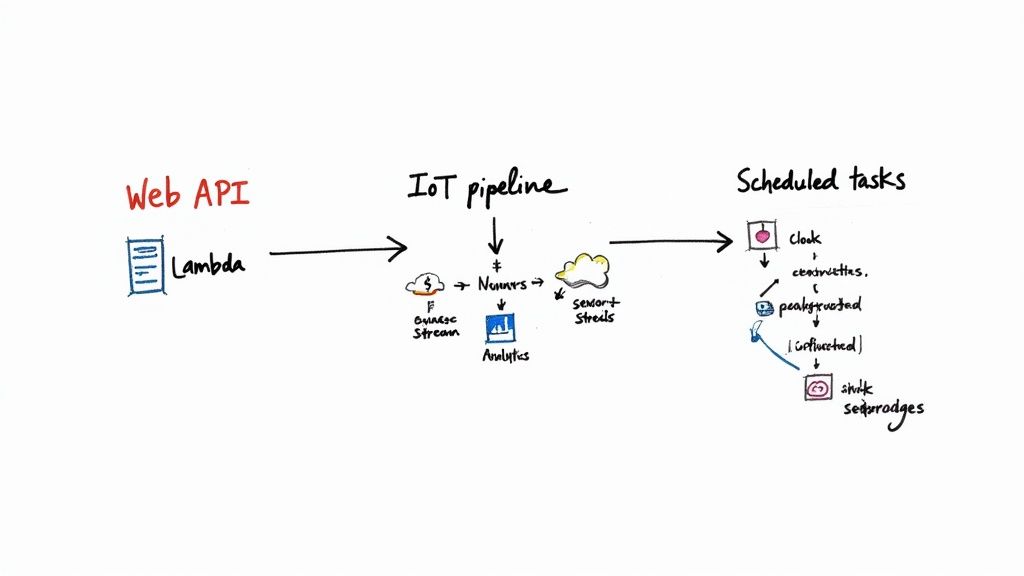

A critical deliverable of this phase is the selection of an appropriate toolchain. Based on the client's existing technology stack, team skills, and strategic goals, they will recommend and design an integrated set of tools for CI/CD (GitLab CI), IaC (Terraform), container orchestration (Kubernetes), and observability (Prometheus).

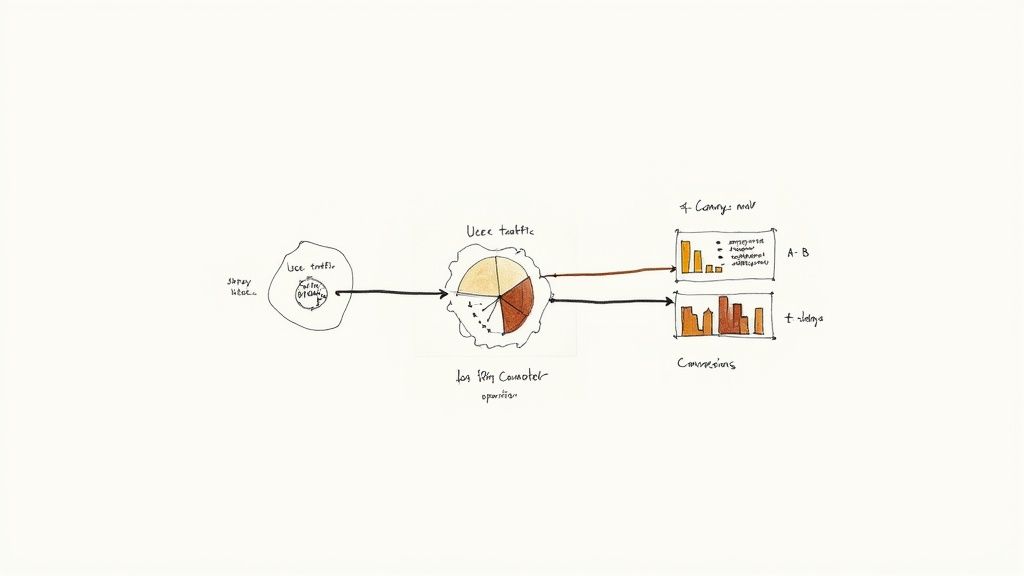

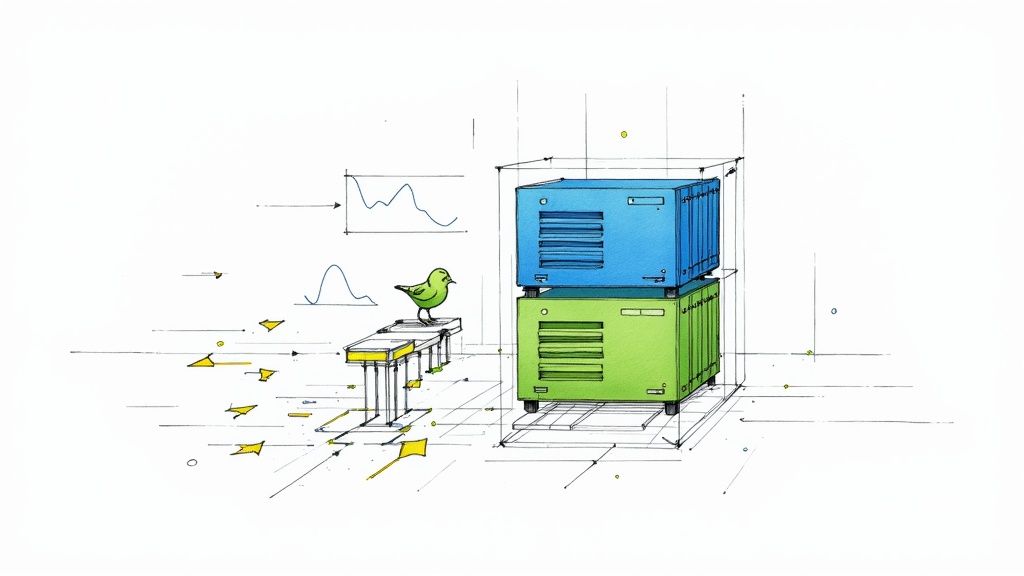

Phase 3: Pilot Project Implementation

To demonstrate value quickly and mitigate risk, the strategy is first implemented on a self-contained pilot project. The firm selects a single, representative application or service to modernize using the new architecture and toolchain.

The pilot serves as a proof-of-concept, providing tangible evidence of the benefits—such as reduced deployment times or improved stability—in a controlled environment. A successful pilot builds technical credibility and secures buy-in from key stakeholders for a broader rollout.

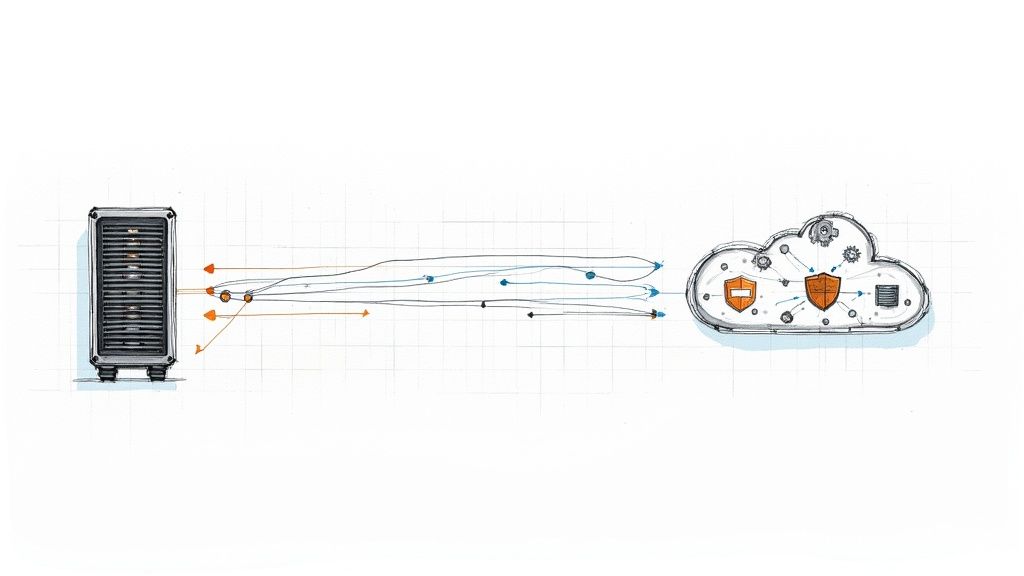

The infographic below illustrates how regional priorities can influence the focus of a pilot project. For example, a North American pilot might prioritize automated security scanning, while a European one might focus on implementing compliance-as-code.

The pilot must align with key business drivers to be considered a success, whether that is improving security posture or automating regulatory compliance.

Phase 4: Scaling and Organizational Rollout

Following a successful pilot, the next phase is to systematically scale the new DevOps practices across the organization. The technical patterns, IaC modules, and CI/CD pipeline templates developed during the pilot are productized and rolled out to other application teams.

This is a carefully managed process. The consulting firm works directly with engineering teams, providing hands-on support, code reviews, and architectural guidance to ensure a smooth adoption of the new tools and workflows.

Phase 5: Knowledge Transfer and Governance

The final and most critical phase ensures the long-term success and self-sufficiency of the transformation. A premier DevOps consulting firm aims to make their client independent by institutionalizing knowledge. This is achieved through comprehensive documentation, a series of technical workshops, and pair programming sessions.

Simultaneously, they help establish a governance model. This includes defining standards for code quality, security policies, and infrastructure configuration to maintain the health and efficiency of the new DevOps ecosystem. The ultimate goal is to foster a self-sufficient, high-performing engineering culture that owns and continuously improves its processes.

Got Questions? We've Got Answers.

Engaging a DevOps consulting firm is a significant technical and financial investment. It is critical to get clear, data-driven answers to key questions before committing to a partnership.

Here are some of the most common technical and operational inquiries.

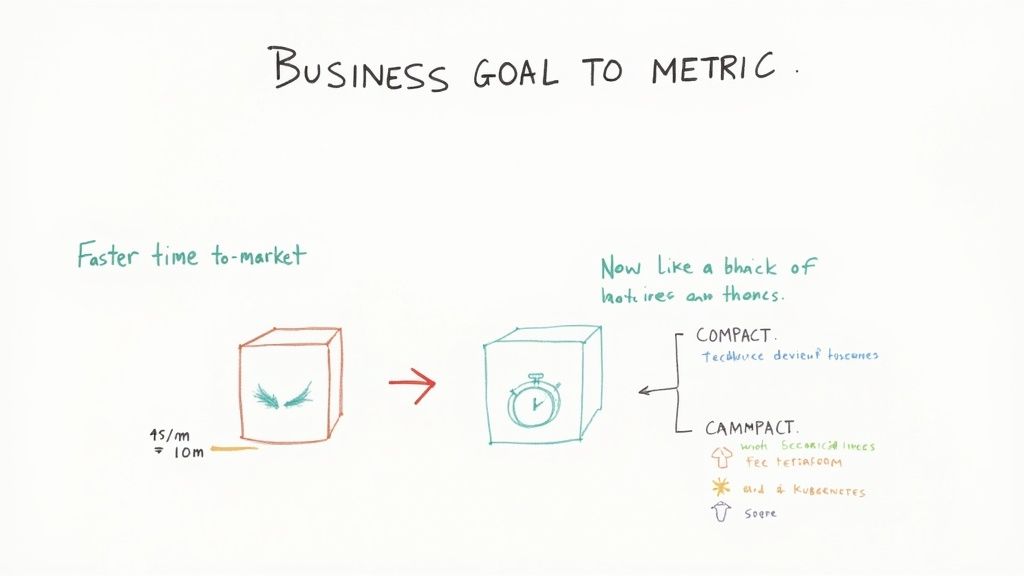

How Do You Actually Measure Success?

What's the real ROI of hiring a DevOps firm?

The return on investment is measured through specific, quantifiable Key Performance Indicators (KPIs), often referred to as the DORA metrics.

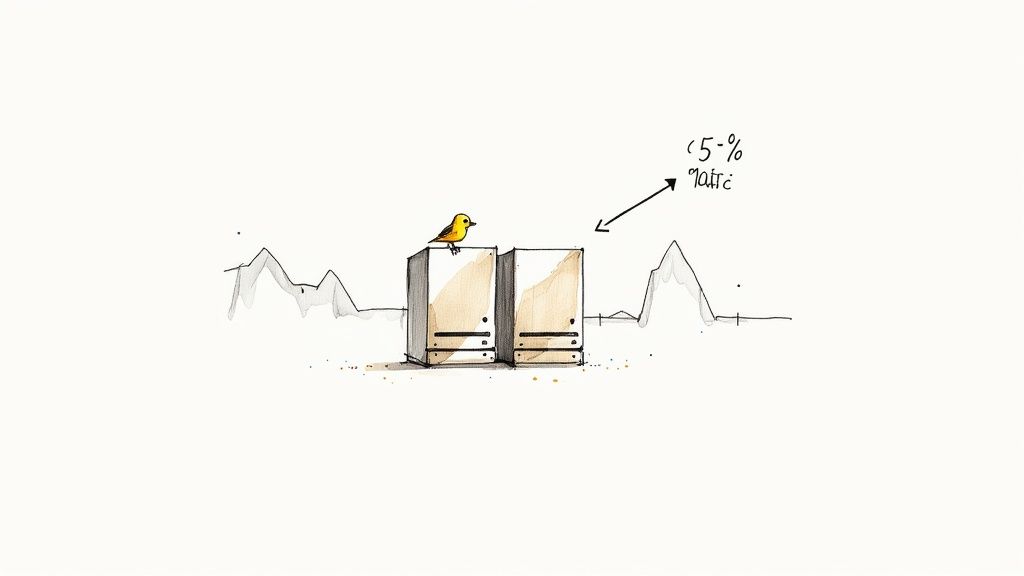

From an engineering standpoint, success is demonstrated by a significant increase in deployment frequency (from monthly to on-demand), a reduction in the change failure rate (ideally to <15%), and a drastically lower mean time to recovery (MTTR) following a production incident. You should also see a sharp decrease in lead time for changes (from code commit to production deployment).

These technical metrics directly impact business outcomes by accelerating time-to-market for new features, improving service reliability, and increasing overall engineering productivity.

How long does a typical engagement last?

The duration is dictated by the scope of work. A targeted, tactical engagement—such as a CI/CD pipeline audit or a pilot IaC implementation for a single application—can be completed in 4-8 weeks.

A comprehensive, strategic transformation—involving cultural change, legacy system modernization, and extensive team upskilling—is a multi-phase program that typically lasts from 6 to 18 months. A competent firm will structure this as a series of well-defined Sprints or milestones, each with clear deliverables.

Will This Work With Our Current Setup?

Is a consultant going to force us to use all new tools?

No. A reputable DevOps consulting firm avoids a "rip and replace" approach. The initial phase of any engagement should be a thorough assessment of your existing toolchain and processes to identify what can be leveraged and what must be improved.

The objective is evolutionary architecture, not a revolution. New tools are introduced only when they solve a specific, identified problem and offer a substantial improvement over existing systems. The strategy should be pragmatic and cost-effective, building upon your current investments wherever possible.

What’s the difference between a DevOps consultant and an MSP?

The roles are fundamentally different. A DevOps consultant is a strategic change agent. Their role is to design, build, and automate new systems and, most importantly, transfer knowledge to your internal team to make you self-sufficient. Their engagement is project-based with a defined endpoint.

A Managed Service Provider (MSP) provides ongoing operational support. They take over the day-to-day management, monitoring, and maintenance of infrastructure. An MSP manages the environment that a DevOps consultant helps build. One architects and builds; the other operates and maintains.

Ready to accelerate your software delivery with proven expertise? OpsMoon connects you with the top 0.7% of global DevOps engineers to build, automate, and scale your infrastructure. Start with a free work planning session to map your roadmap to success. Find your expert today.