Legacy systems, once the bedrock of an organization, often become a significant drain on resources, hindering innovation and agility. They accrue technical debt, increase operational costs, and expose businesses to security risks. However, the path forward is not always a complete overhaul. Effective legacy system modernization strategies are not one-size-fits-all; they require a nuanced approach tailored to specific business goals, technical constraints, and risk tolerance.

This guide provides a deep, technical dive into seven distinct strategies for modernizing your applications and infrastructure. We will analyze the specific implementation steps, technical considerations, pros, cons, and real-world scenarios for each, empowering you to make informed decisions. Moving beyond theoretical concepts, this article offers actionable blueprints you can adapt for your own technology stack.

You will learn about methods ranging from a simple 'Lift and Shift' (Rehosting) to the incremental 'Strangler Fig' pattern. We will cover:

- Rehosting

- Refactoring

- Replatforming

- Repurchasing (SaaS)

- Retiring

- Retaining

- The Strangler Fig Pattern

Our goal is to equip you with the knowledge to build a robust roadmap for your digital transformation journey, ensuring scalability, security, and long-term performance.

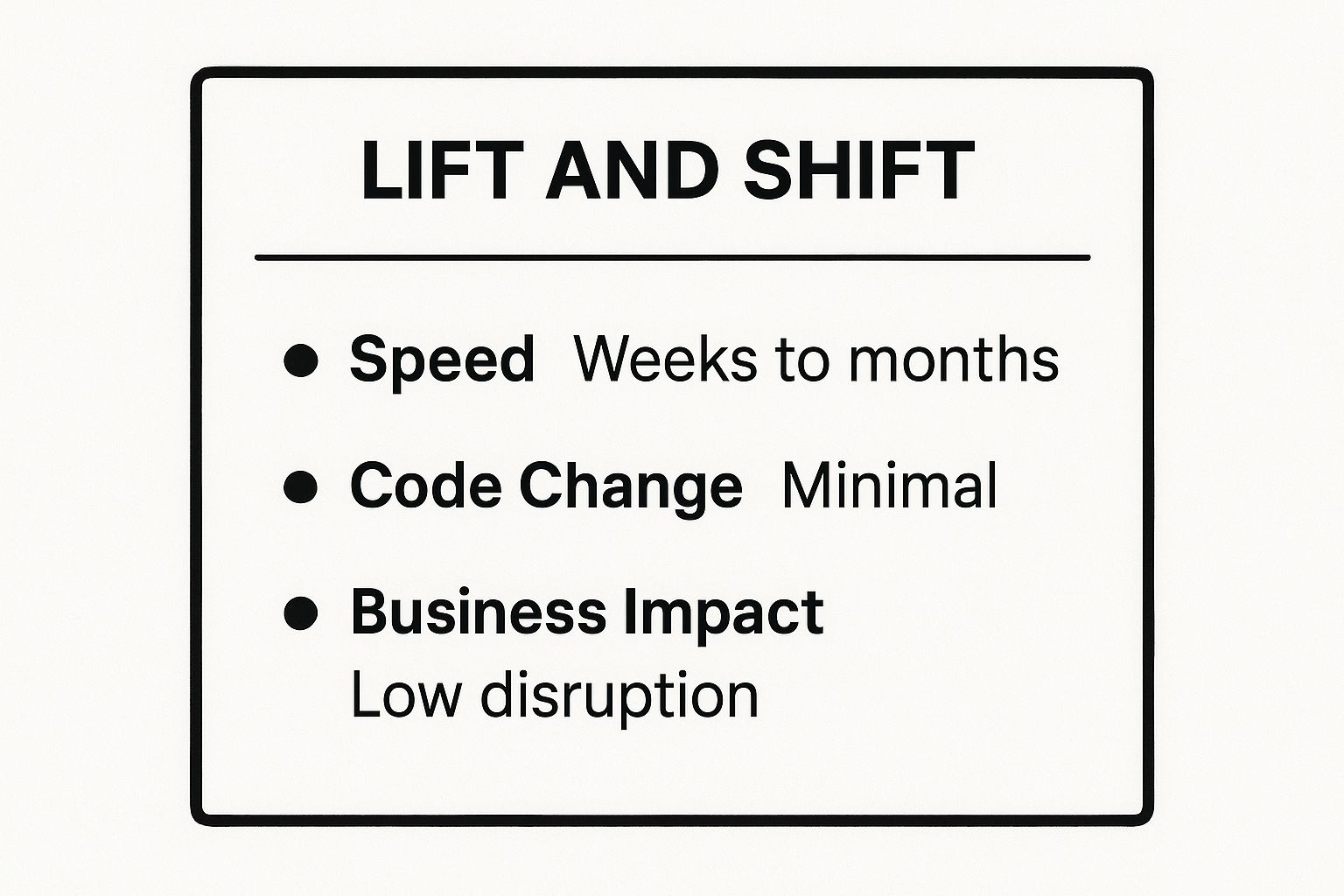

1. Lift and Shift (Rehosting)

The Lift and Shift strategy, also known as Rehosting, is one of the most direct legacy system modernization strategies available. It involves moving an application from its existing on-premise or legacy hosting environment to a modern infrastructure, typically a public or private cloud, with minimal to no changes to the application's core architecture or code. This approach prioritizes speed and cost-efficiency for the initial migration, essentially running the same system on a new, more capable platform.

A classic example is GE's massive migration of over 9,000 applications to AWS, where rehosting was a primary strategy to exit data centers quickly and realize immediate infrastructure savings. This allowed them to shut down 30 of their 34 data centers, demonstrating the strategy's power for rapid, large-scale infrastructure transformation.

When to Use This Approach

Lift and Shift is ideal when the primary goal is to quickly exit a physical data center due to a lease expiring, a merger, or a desire to reduce infrastructure management overhead. It's also a pragmatic first step for organizations new to the cloud, allowing them to gain operational experience before undertaking more complex modernization efforts like refactoring or rebuilding. If an application is a "black box" with lost source code or specialized knowledge, rehosting may be the only viable option to move it to a more stable environment.

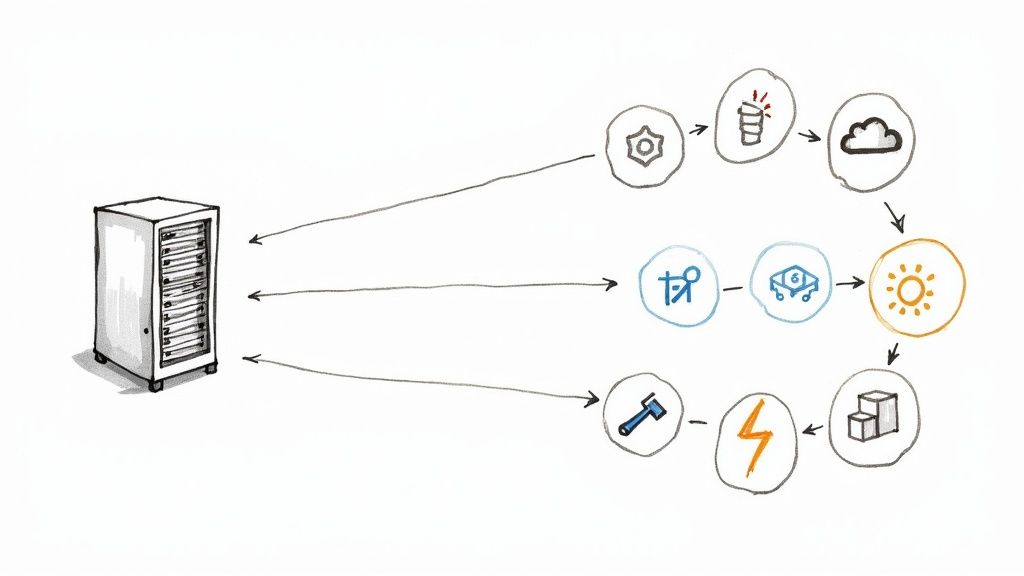

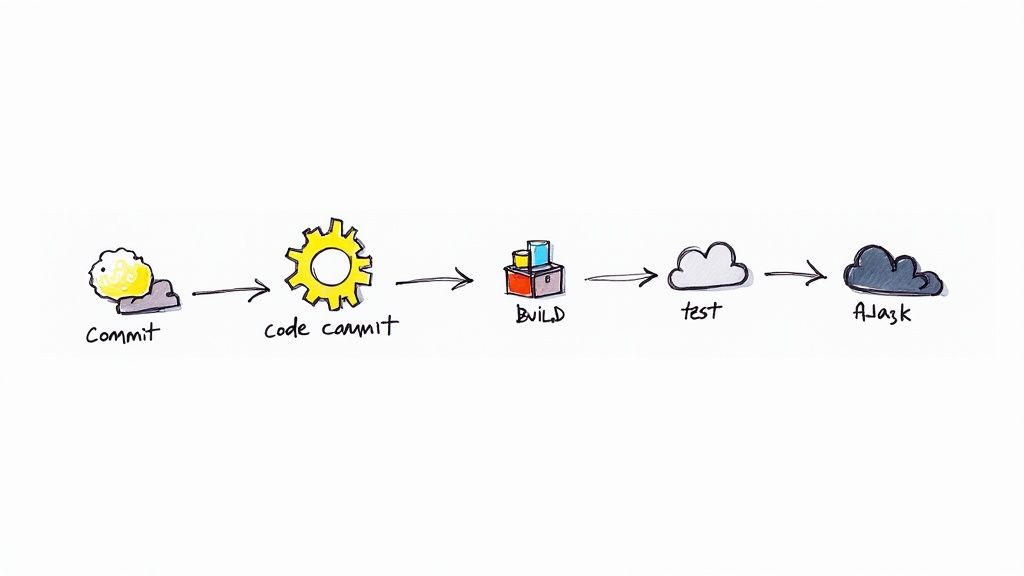

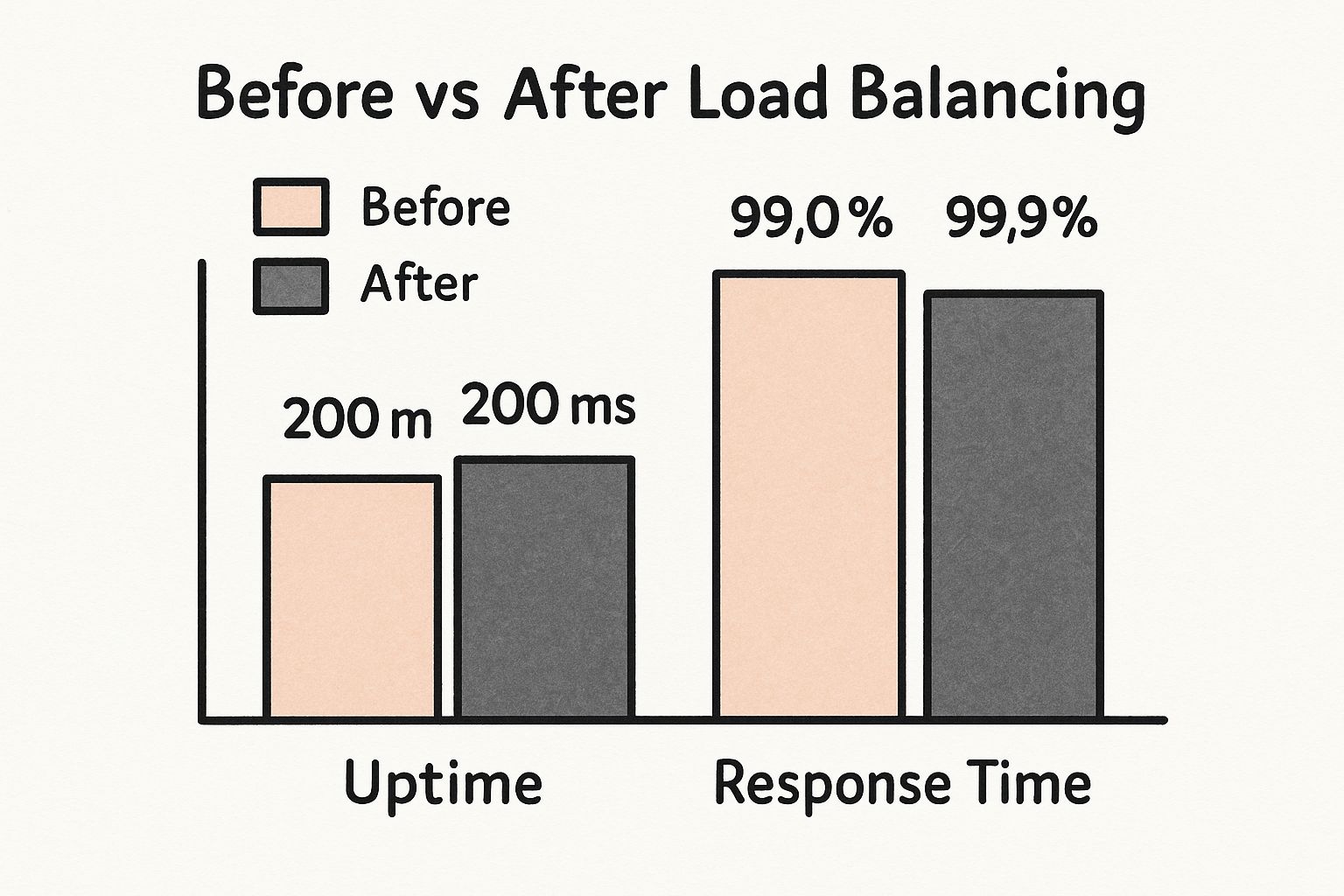

This infographic summarizes the key characteristics of a rehosting project.

As the data shows, the primary benefit is speed and minimal disruption, making it a low-risk entry into cloud adoption.

Implementation Considerations

Successful rehosting requires meticulous planning. Before the move, you must conduct thorough dependency mapping to identify all interconnected systems, databases, and network configurations. When considering the 'Lift and Shift' approach, moving existing on-premise systems can be streamlined with a comprehensive data center migration checklist to ensure no critical components are overlooked.

Key implementation tips include:

- Utilize Automated Tools: Employ tools like AWS Application Discovery Service, Azure Migrate, or Google Cloud's StratoZone to automatically map servers, dependencies, and performance baselines. Use migration services like AWS Server Migration Service (SMS) or Azure Site Recovery to replicate and move VMs with minimal downtime.

- Plan for Post-Migration Optimization: Treat rehosting as phase one. Budget and plan for subsequent optimization phases to right-size instances using cloud provider cost explorers and trusted advisor tools. The goal is to move from a static, on-premise capacity model to a dynamic, cloud-based one by implementing auto-scaling groups and load balancers.

- Implement Robust Testing: Create a detailed testing plan that validates functionality, performance, and security in the new cloud environment before decommissioning the legacy system. This should include integration tests, load testing with tools like JMeter or Gatling to match on-premise performance baselines, and security penetration testing against the new cloud network configuration.

2. Refactoring (Re-architecting)

Refactoring, also known as Re-architecting, is one of the more intensive legacy system modernization strategies. It involves significant code restructuring and optimization to align a legacy application with modern, cloud-native principles without altering its external behavior or core business logic. This approach goes beyond a simple migration, aiming to improve non-functional attributes like performance, scalability, and maintainability by fundamentally changing the internal structure of the code.

A prominent example is Spotify's journey from a monolithic architecture to a microservices model hosted on Google Cloud. This strategic re-architecture allowed their development teams to work independently and deploy features more rapidly, enabling the platform to scale and innovate at a massive pace. The move was crucial for handling their explosive user growth and complex feature set.

When to Use This Approach

Refactoring is the best choice when the core business logic of an application is still valuable, but the underlying technology is creating significant bottlenecks, incurring high maintenance costs, or hindering new feature development. This strategy is ideal for mission-critical applications where performance and scalability are paramount. You should consider refactoring when a simple lift and shift won't solve underlying architectural problems, but a full rewrite is too risky or expensive. It allows you to incrementally improve the system while it remains operational.

This approach is fundamentally about paying down technical debt. Addressing these underlying issues is a key part of successful modernization, and you can learn more about how to manage technical debt to ensure long-term system health.

Implementation Considerations

A successful refactoring project requires a deep understanding of the existing codebase and a clear vision for the target architecture. It's a significant engineering effort that demands meticulous planning and execution.

Key implementation tips include:

- Start with an Application Assessment: Begin with a thorough code audit and dependency analysis. Use static analysis tools like SonarQube to identify complex, tightly coupled modules ("hotspots") and calculate cyclomatic complexity. This data-driven approach helps prioritize which parts of the monolith to break down first.

- Adopt an Incremental Strategy: Avoid a "big bang" refactor. Use techniques like the Strangler Fig Pattern to gradually route traffic to new, refactored services. Decouple components by introducing message queues (e.g., RabbitMQ, Kafka) between services instead of direct API calls, de-risking the process and ensuring business continuity.

- Invest in Comprehensive Testing: Since you are changing the internal code structure, a robust automated testing suite is non-negotiable. Implement a testing pyramid: a strong base of unit tests (using frameworks like JUnit or PyTest), a layer of service integration tests, and a focused set of end-to-end tests to verify that the refactored code maintains functional parity.

- Integrate DevOps Practices: Use refactoring as an opportunity to introduce or enhance CI/CD pipelines using tools like Jenkins, GitLab CI, or GitHub Actions. Containerize the refactored services with Docker and manage them with an orchestrator like Kubernetes to achieve true deployment automation and scalability.

3. Replatforming (Lift-Tinker-Shift)

Replatforming, often called "Lift-Tinker-Shift," represents a strategic middle ground among legacy system modernization strategies. It goes beyond a simple rehost by incorporating targeted, high-value optimizations to the application while migrating it to a new platform. This approach allows an organization to start realizing cloud benefits, such as improved performance or reduced operational costs, without the significant time and expense of a complete architectural overhaul (refactoring or rebuilding).

This strategy involves making specific, contained changes to the application to better leverage cloud-native capabilities. For instance, a common replatforming move is migrating a self-managed, on-premise Oracle database to a managed cloud database service like Amazon RDS for PostgreSQL or Azure SQL Database. This swap reduces administrative overhead and improves scalability, delivering tangible benefits with minimal code modification.

As shown, replatforming offers a balanced approach, gaining cloud advantages without the full commitment of a rewrite.

When to Use This Approach

Replatforming is the ideal strategy when the core application architecture is fundamentally sound, but the organization wants to achieve measurable benefits from a cloud migration beyond infrastructure cost savings. It's perfect for situations where a full refactor is too costly or risky in the short term, but a simple lift-and-shift offers insufficient value. If your team has identified clear performance bottlenecks, such as database management or inefficient caching, replatforming allows you to address these "low-hanging fruit" during the migration process. It's a pragmatic step that unlocks immediate ROI while setting the stage for future, more in-depth modernization efforts.

Implementation Considerations

A successful replatforming project hinges on identifying the right components to "tinker" with. The goal is to maximize impact while minimizing the scope of change to prevent scope creep. When a key part of your replatforming initiative involves moving databases, a structured approach is critical. You can learn more about this by reviewing database migration best practices to ensure a smooth transition.

Key implementation tips include:

- Focus on High-Impact Optimizations First: Prioritize changes that deliver the most significant value. For example, replace a file-system-based session state with a distributed cache like Redis or Memcached. Swap a custom messaging queue with a managed cloud service like AWS SQS or Azure Service Bus. Implement auto-scaling groups to handle variable traffic instead of static server capacity.

- Leverage Platform-as-a-Service (PaaS): Actively seek opportunities to replace self-managed infrastructure components with managed PaaS offerings. This offloads operational burdens like patching, backups, and high availability to the cloud provider, freeing up engineering time for value-added work.

- Implement Cloud-Native Observability: Swap legacy monitoring and logging tools (e.g., Nagios, on-prem Splunk) for cloud-native solutions like Amazon CloudWatch, Azure Monitor, or Datadog. This provides deeper insights into application performance and health through integrated metrics, logs, and traces in the new environment.

- Document All Changes: Meticulously document every modification made during the replatforming process, including changes to connection strings, environment variables, and infrastructure configurations. Store this information in a version-controlled repository (e.g., Git) alongside your infrastructure-as-code scripts.

4. Repurchasing (Replace with SaaS)

Repurchasing, often referred to as Replace, is a legacy system modernization strategy that involves completely retiring a legacy application and replacing it with a third-party Software-as-a-Service (SaaS) or Commercial-Off-The-Shelf (COTS) solution. Instead of trying to fix or migrate custom-built software, this approach opts for adopting a market-proven, vendor-supported platform that delivers the required business functionality out of the box. This shifts the burden of development, maintenance, and infrastructure management to the SaaS provider, allowing the organization to focus on its core business.

Prominent examples include replacing a custom-built sales tracking system with Salesforce CRM, swapping a clunky on-premise HR platform for Workday, or modernizing an aging IT helpdesk with ServiceNow. The key is to find a commercial product whose functionality closely aligns with the organization's business processes, making it a powerful choice among legacy system modernization strategies.

When to Use This Approach

Repurchasing is the best strategy when a legacy application supports a common business function, such as finance, HR, or customer relationship management, for which robust SaaS solutions already exist. If an application is overly expensive to maintain, built on obsolete technology, or no longer provides a competitive advantage, replacing it is often more strategic than investing further resources into it. For certain functions like customer support, replacing legacy systems by repurchasing a modern SaaS offering, such as various virtual assistant solutions for customer service, can be a highly effective modernization path.

Implementation Considerations

A successful replacement project hinges on rigorous due diligence and change management. The focus shifts from technical development to vendor evaluation, data migration, and process re-engineering to fit the new system.

Key implementation tips include:

- Conduct Thorough Requirements Analysis: Develop a detailed requirements-gathering document (RGD) and use it to create a scoring matrix. Evaluate potential vendors against technical criteria (API capabilities, security certifications like SOC 2), functional requirements, and total cost of ownership (TCO), not just licensing fees.

- Plan a Comprehensive Data Migration Strategy: Data migration is a project in itself. Develop a detailed plan for data cleansing, transformation (ETL), and loading into the new platform's data schema. Use specialized ETL tools like Talend or Informatica Cloud, and perform multiple dry runs in a staging environment to validate data integrity before the final cutover.

- Invest Heavily in Change Management: Adopting a new SaaS solution means changing how people work. Invest in comprehensive user training, create clear documentation, and establish a support system to manage the transition and drive user adoption. Implement a phased rollout (pilot group first) to identify and address user friction points.

- Maintain Integration Capabilities: The new SaaS platform must coexist with your remaining systems. Prioritize solutions with well-documented REST or GraphQL APIs. Use an integration platform as a service (iPaaS) like MuleSoft or Boomi to build and manage the data flows between the new SaaS application and your existing technology stack.

5. Retiring (Decommissioning)

Among the most impactful legacy system modernization strategies, Retiring, or Decommissioning, is the deliberate process of shutting down applications that no longer provide significant business value. This approach involves a strategic decision to completely remove a system from the IT portfolio, often because its functionality is redundant, its technology is obsolete, or the cost of maintaining it outweighs its benefits. Rather than investing in a migration or update, decommissioning eliminates complexity and frees up valuable resources.

A powerful example is Ford's initiative to consolidate its numerous regional HR systems. By identifying overlapping capabilities, Ford was able to retire multiple legacy platforms in favor of a single, unified system, drastically reducing operational costs and simplifying its global HR processes. This demonstrates how decommissioning is not just about deletion but about strategic consolidation and simplification.

When to Use This Approach

Decommissioning is the optimal strategy when a thorough portfolio analysis reveals applications with low business value and high maintenance costs. It is particularly effective after mergers and acquisitions, where redundant systems for functions like finance or HR are common. This approach is also ideal for applications whose functionality has been fully absorbed by more modern, comprehensive platforms like an ERP or CRM system. If an application supports a business process that is no longer relevant, retiring it is the most logical and cost-effective action.

This strategy is a powerful way to reduce technical debt and simplify your IT landscape, allowing focus to shift to systems that drive genuine business growth.

Implementation Considerations

A successful decommissioning project requires more than just "pulling the plug." It demands a structured and communicative approach to minimize disruption and risk. Before removing a system, perform a comprehensive business impact analysis to understand exactly who and what will be affected by its absence.

Key implementation tips include:

- Implement a Data Archival Strategy: Do not delete historical data. Establish a clear plan for archiving data from the retired system into a secure, accessible, and cost-effective cold storage solution, such as Amazon S3 Glacier or Azure Archive Storage. Ensure the data format is non-proprietary (e.g., CSV, JSON) for future accessibility.

- Ensure Regulatory Compliance: Verify that the decommissioning process, especially data handling and archival, adheres to all relevant industry regulations like GDPR, HIPAA, or Sarbanes-Oxley. Document the entire process, including data destruction certificates for any decommissioned hardware, to create a clear audit trail.

- Communicate with Stakeholders: Develop a clear communication plan for all users and dependent system owners. Inform them of the decommissioning timeline, the rationale behind the decision, and any alternative solutions or processes they need to adopt. Provide read-only access for a set period before the final shutdown.

- Document the Decision Rationale: Formally document why the system is being retired, including the cost-benefit analysis (TCO vs. value), the results of the impact assessment, and the data archival plan. This documentation is invaluable for future reference and for explaining the decision to leadership and auditors.

6. Retaining (Revisit Later)

The Retaining strategy, often called "Revisit Later," is a conscious and strategic decision to do nothing with a specific legacy system for the time being. This approach acknowledges that not all systems are created equal, and modernization resources should be focused where they deliver the most significant business value. It involves actively choosing to keep an application in its current state, postponing any modernization investment until a more opportune time. This is not neglect; it's a calculated move within a broader portfolio of legacy system modernization strategies.

A prime example is found in the airline industry, where many core reservation systems, often decades-old mainframes running on COBOL, are retained. While customer-facing websites and mobile apps are continuously rebuilt and modernized, the underlying booking engine remains untouched due to its stability, complexity, and the sheer risk associated with changing it. The business value is created at the user-experience layer, making the modernization of the core system a lower priority.

When to Use This Approach

Retaining a system is the right choice when the cost and risk of modernization far outweigh the current business value it provides. This is common for systems with low usage, those slated for decommissioning in the near future, or applications that are stable and perform their function without causing significant issues. It's a pragmatic approach for organizations with limited budgets or technical teams, allowing them to concentrate their efforts on modernizing high-impact, customer-facing, or revenue-generating applications first. If a system "just works" and is not a direct impediment to business goals, retaining it can be the most sensible financial decision.

Implementation Considerations

Effectively retaining a system requires active management, not passive avoidance. The goal is to contain its risk and cost while you modernize elsewhere.

Key implementation tips include:

- Establish Clear Retention Criteria: Create a formal framework for deciding which systems to retain. This should include metrics like business criticality, maintenance cost (TCO), security vulnerability level (CVSS scores), and user count. This provides a data-driven basis for the decision.

- Implement "Ring-Fencing": Isolate the legacy system to prevent its issues from affecting other modernized components. Use an API gateway to create an anti-corruption layer (ACL) that exposes only necessary data and functions. This buffer layer allows modern applications to interact with the legacy system via clean, well-defined contracts without being tightly coupled to its outdated architecture.

- Schedule Regular Reassessments: The decision to retain is not permanent. Institute a mandatory review cycle, perhaps quarterly or annually, to re-evaluate if the conditions have changed. Key triggers for reassessment include new security vulnerabilities, a significant increase in maintenance costs, or a shift in business strategy that increases the system's importance.

- Maintain Knowledge and Documentation: As a system ages, institutional knowledge is a major risk. Document its architecture, dependencies, and operational procedures meticulously in a central wiki or knowledge base (e.g., Confluence). Ensure that at least two engineers are cross-trained on its maintenance and incident response to avoid a single point of failure (SPOF).

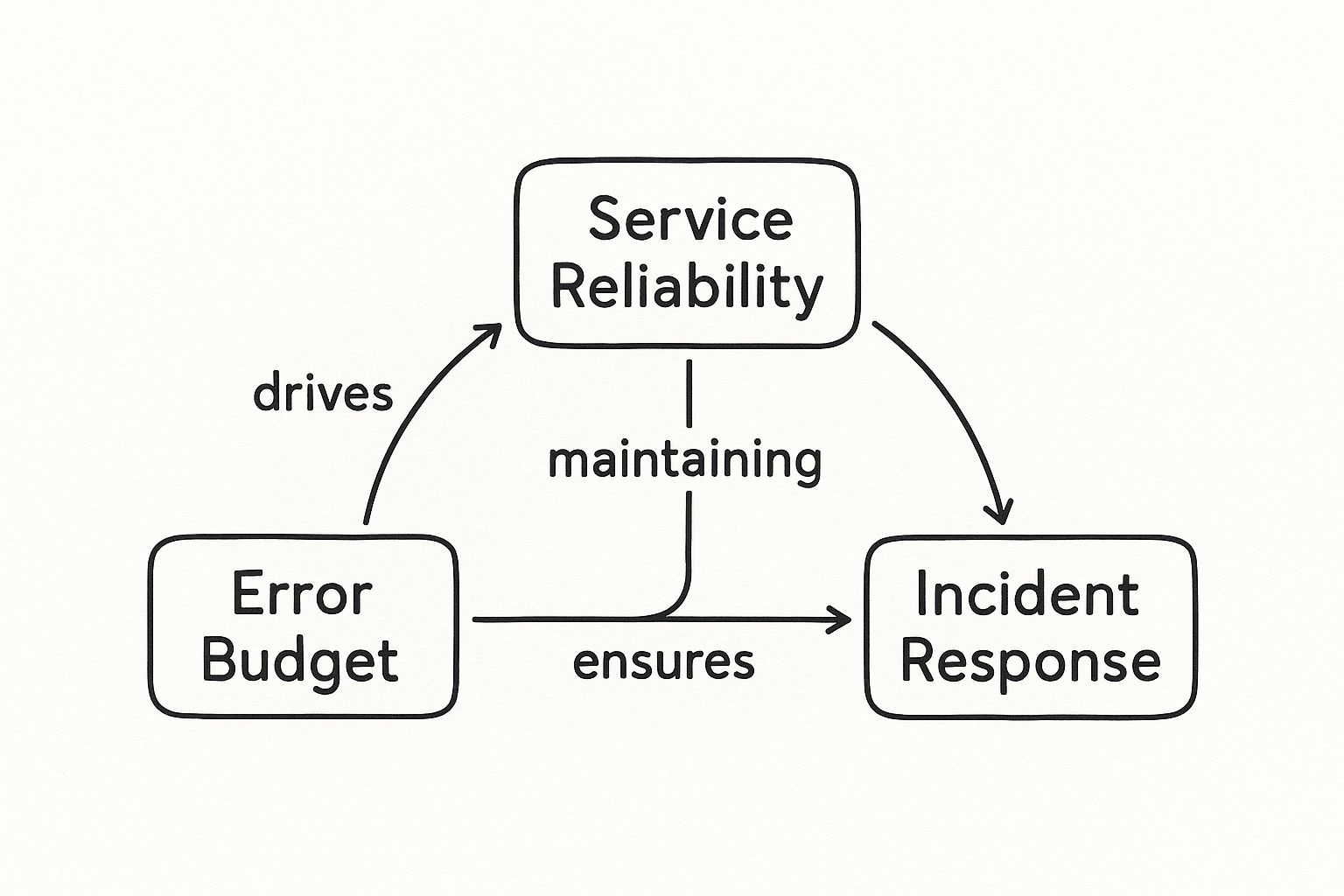

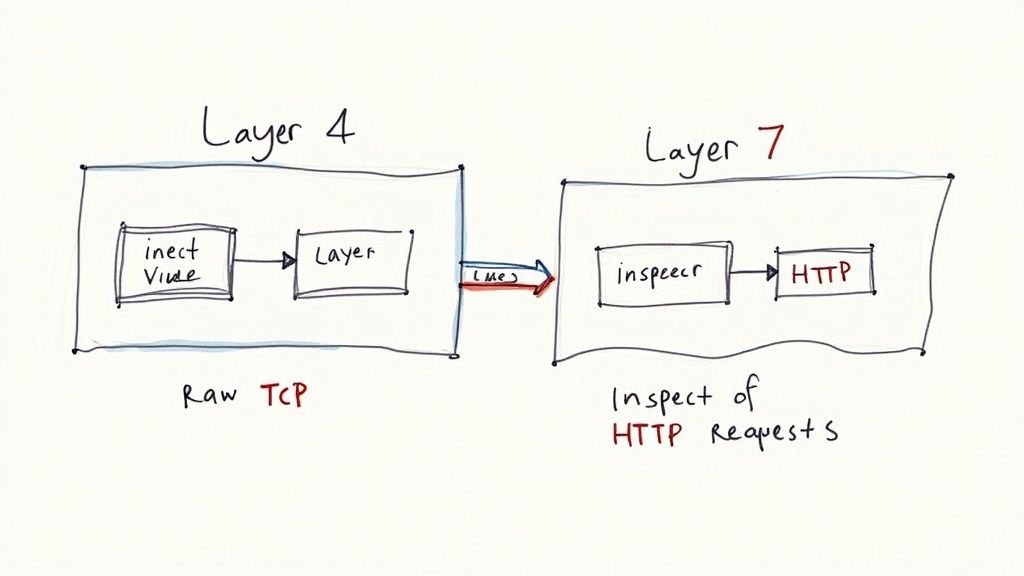

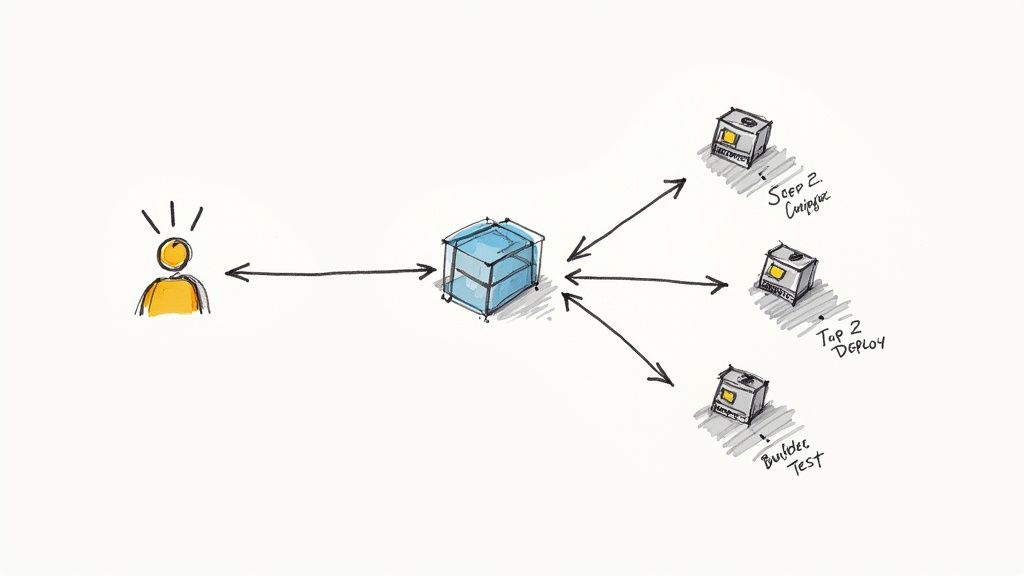

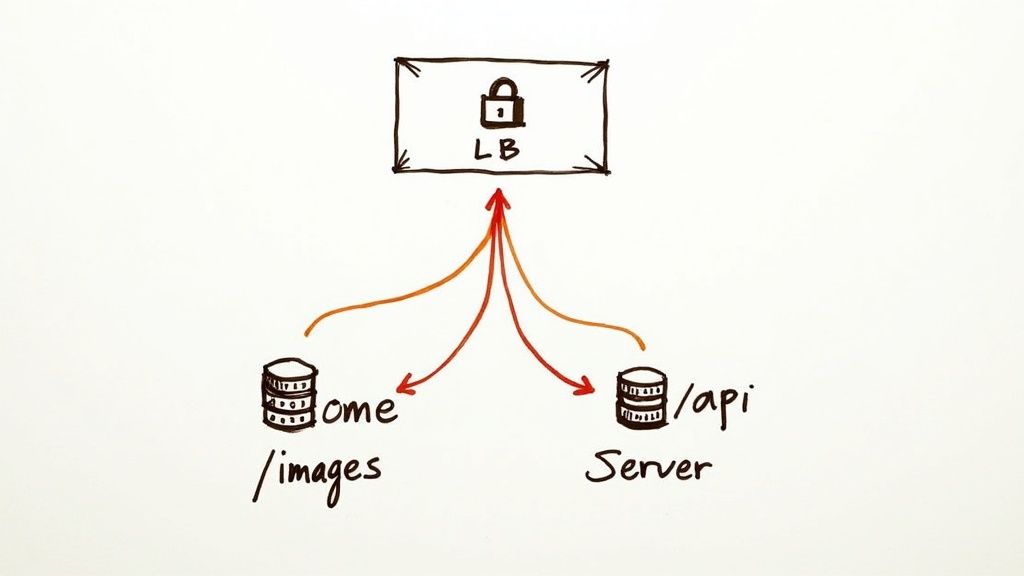

7. Strangler Fig Pattern

The Strangler Fig Pattern is one of the most powerful and risk-averse legacy system modernization strategies. Named by Martin Fowler, this approach draws an analogy from the strangler fig plant that grows around a host tree, eventually replacing it. Similarly, this pattern involves incrementally building new functionality around the legacy system, gradually intercepting and routing calls to new services until the old system is "strangled" and can be safely decommissioned. This method allows for a controlled, piece-by-piece transformation without the high risk of a "big bang" rewrite.

A prime example is Monzo Bank's transition from a monolithic core banking system to a distributed microservices architecture. By implementing the Strangler Fig Pattern, Monzo could develop and deploy new services independently, routing specific functions like payments or account management to the new components while the legacy core remained operational. This strategy enabled them to innovate rapidly while ensuring continuous service availability for their customers.

When to Use This Approach

The Strangler Fig Pattern is ideal for large, complex, and mission-critical legacy systems where a complete shutdown for replacement is not feasible due to business continuity risks. It's the perfect choice when modernization needs to happen over an extended period, allowing development teams to deliver value incrementally. This approach is particularly effective when migrating a monolith to a microservices architecture, as it provides a structured path for decomposing the application domain by domain.

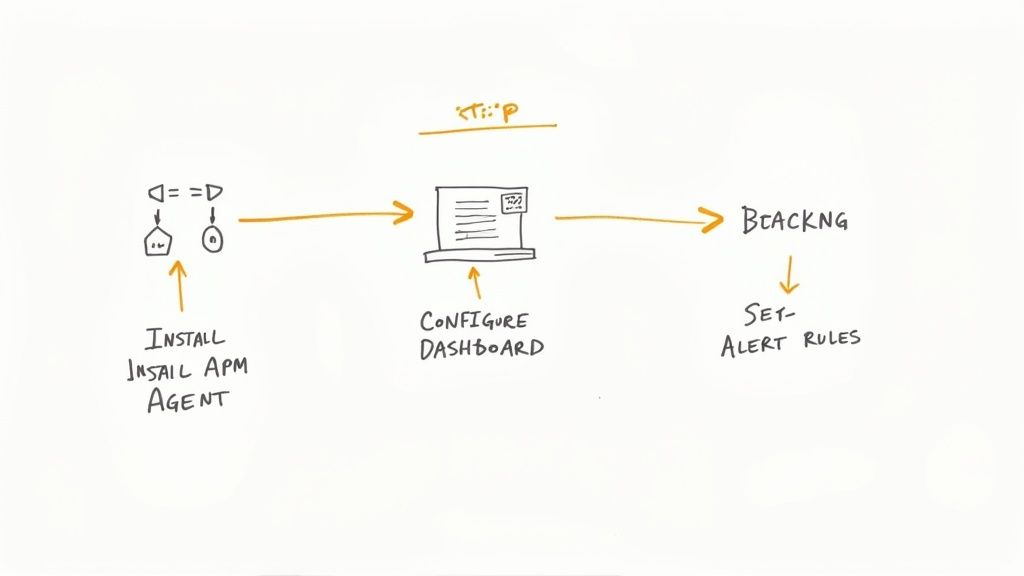

This video from Martin Fowler provides a detailed explanation of the pattern and its application.

As the pattern demonstrates, it mitigates risk by allowing for gradual, validated changes over time.

Implementation Considerations

Successful execution of the Strangler Fig Pattern hinges on an intelligent routing layer and careful service decomposition. This pattern is foundational to many successful microservices migrations; for a deeper dive, explore these common microservices architecture design patterns that complement this strategy.

Key implementation tips include:

- Use an API Gateway: Implement an API gateway (e.g., Kong, Apigee) or a reverse proxy (e.g., NGINX, HAProxy) to act as the "facade" that intercepts all incoming requests. This layer is critical for routing traffic, applying policies, and directing requests to either the legacy monolith or a new microservice based on URL path, headers, or other criteria.

- Start at the Edge: Begin by identifying and rebuilding functionality at the edges of the legacy system, such as a specific user interface module or a single API endpoint. These components often have fewer dependencies and can be replaced with lower risk, providing an early win and demonstrating the pattern's value.

- Maintain Data Consistency: Develop a robust data synchronization strategy. As you build new services that own their data, you may need temporary solutions like an event-driven architecture using Kafka to broadcast data changes, or a data virtualization layer to provide a unified view of data residing in both old and new systems.

- Implement Robust Monitoring: Establish comprehensive monitoring and feature flagging from day one. Use tools like Prometheus and Grafana to track the latency and error rates of new services. Employ feature flags (using services like LaunchDarkly) to dynamically control traffic routing, allowing you to instantly roll back to the legacy system if a new service fails.

Legacy Modernization Strategies Comparison

| Strategy | Implementation Complexity | Resource Requirements | Expected Outcomes | Ideal Use Cases | Key Advantages |

|---|---|---|---|---|---|

| Lift and Shift (Rehosting) | Low | Minimal code changes, automated tools | Fast migration, preserves legacy logic | Quick cloud migration, minimal disruption | Fastest approach, low initial risk |

| Refactoring (Re-architecting) | High | Significant development effort, expert skills | Optimized, scalable, cloud-native applications | Long-term modernization, performance boost | Maximum benefit, improved maintainability |

| Replatforming (Lift-Tinker-Shift) | Medium | Moderate coding and platform changes | Partial modernization with improved efficiency | Balanced modernization, ROI-focused | Better ROI than lift-and-shift, manageable risk |

| Repurchasing (Replace with SaaS) | Low to Medium | Vendor solution adoption, data migration | Eliminates custom code maintenance, SaaS benefits | When suitable SaaS solutions exist | Eliminates technical debt, rapid deployment |

| Retiring (Decommissioning) | Low to Medium | Analysis and archival resources | Cost savings by removing unused systems | Obsolete or redundant applications | Cost reduction, simplified IT environment |

| Retaining (Revisit Later) | Low | Minimal ongoing maintenance | Maintains legacy with potential future upgrade | Low-impact systems, resource constraints | Focus on high-priority modernization, cost-effective |

| Strangler Fig Pattern | High | Incremental development, complex routing | Gradual system replacement with minimal disruption | Gradual migration, risk-controlled modernization | Minimal disruption, continuous operation |

From Strategy to Execution: Partnering for Success

Navigating the landscape of legacy system modernization strategies requires more than just understanding the theory behind each approach. As we've explored, the path you choose, whether it's a straightforward Rehost, an intricate Refactor, or a gradual transition using the Strangler Fig pattern, carries significant implications for your budget, timeline, and future technical capabilities. The decision is not merely a technical one; it is a strategic business decision that directly impacts your ability to innovate, scale, and compete in a rapidly evolving digital marketplace.

A successful modernization project hinges on moving from a well-defined strategy to flawless execution. This transition is where many initiatives falter. The complexities of data migration, maintaining business continuity, managing stakeholder expectations, and orchestrating new cloud-native tooling demand specialized expertise and meticulous planning. Choosing the wrong path can lead to budget overruns and technical debt, while the right strategy, executed perfectly, unlocks immense value.

Key Takeaways for Your Modernization Journey

To ensure your efforts translate into tangible business outcomes, keep these core principles at the forefront:

- Align Strategy with Business Goals: The "best" modernization strategy is the one that most effectively supports your specific business objectives. Don't chase trends; select an approach like Replatforming or Repurchasing because it solves a concrete problem, such as reducing operational costs or accelerating feature delivery.

- Embrace Incremental Change: For complex systems, a phased approach like the Strangler Fig pattern is often superior to a "big bang" rewrite. It de-risks the project by delivering value incrementally, allowing for continuous feedback and adaptation while minimizing disruption to core business operations.

- Prioritize Data Integrity: Your data is one of your most valuable assets. Every strategy, from a simple Lift and Shift to a complete Rebuild, must include a robust plan for data migration, validation, and security to ensure a seamless and reliable transition.

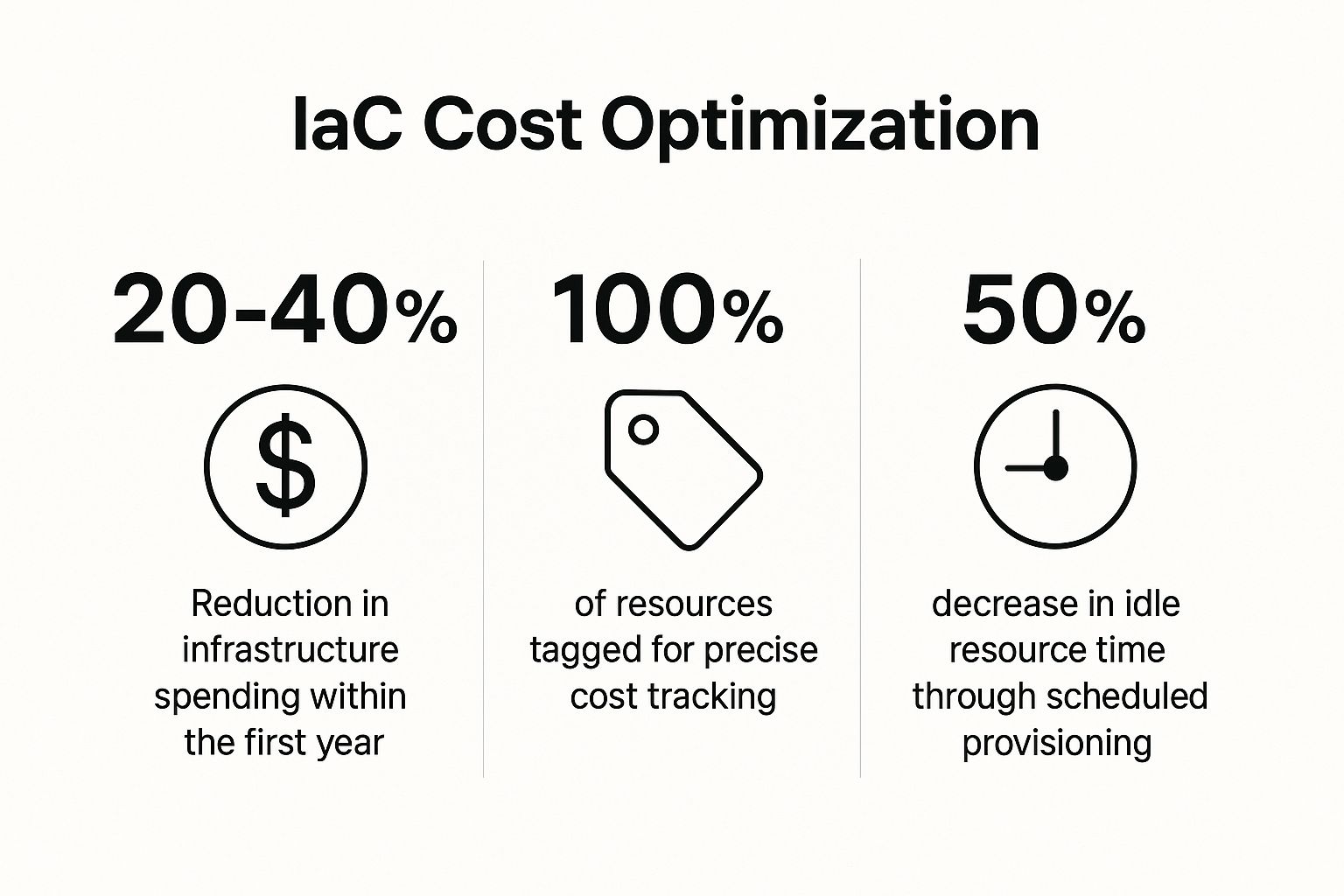

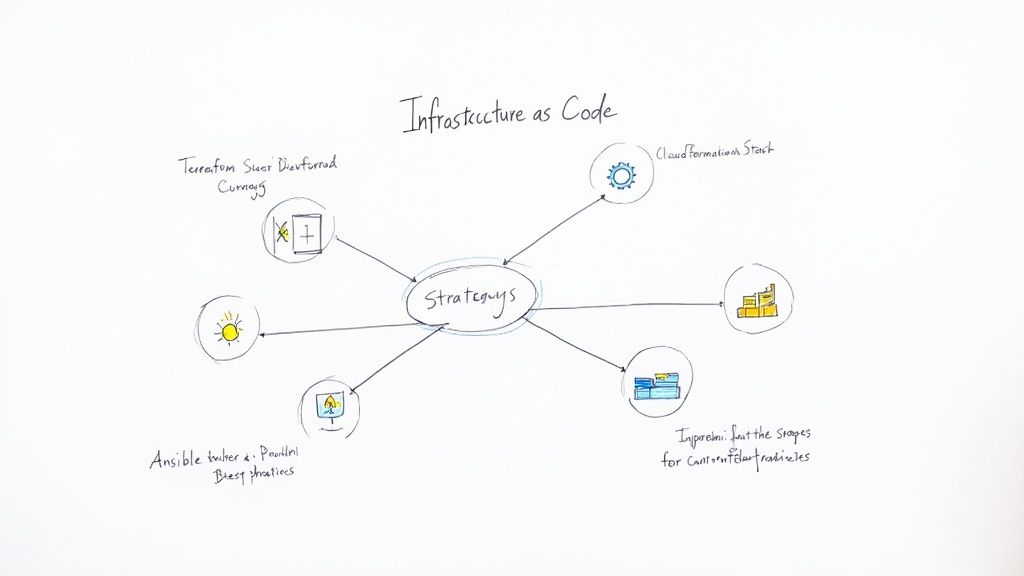

- Invest in Automation and Tooling: Modernization is an opportunity to build a foundation for future agility. Leverage Infrastructure as Code (IaC) tools like Terraform, containerization with Docker and Kubernetes, and robust CI/CD pipelines to automate deployment, enhance resilience, and empower your development teams.

Ultimately, mastering these legacy system modernization strategies is about future-proofing your organization. It's about transforming your technology from a constraint into a catalyst for growth. By carefully selecting and executing the right approach, you create a resilient, scalable, and adaptable technical foundation that empowers you to respond to market changes with speed and confidence. This transformation is not just an IT project; it is a fundamental driver of long-term competitive advantage.

Ready to turn your modernization plan into a reality? OpsMoon connects you with a curated network of elite, pre-vetted DevOps and Platform Engineering experts to execute your chosen strategy flawlessly. Schedule your free work planning session today and let us match you with the precise talent needed to accelerate your journey to the cloud.