Running PostgreSQL on Kubernetes represents a significant architectural evolution, migrating database management from static, imperative processes to a dynamic, declarative paradigm. This integration aligns the data layer with the cloud-native ecosystem housing your applications, establishing a unified, automated operational model.

Why Run PostgreSQL on Kubernetes in Production

Let's be clear: deciding to run a stateful workhorse like PostgreSQL on Kubernetes is a major architectural choice. This isn't just about containerizing a database; it's a fundamental shift in managing your data persistence layer. Before addressing the "how," you must solidify the "why," which invariably ties back to the understanding non-functional requirements of your system, such as availability, scalability, and recoverability.

This approach establishes an incredibly consistent environment from development through production. Your database lifecycle begins to adhere to the same declarative configuration and GitOps workflows as your stateless applications, eliminating operational silos.

The Rise of a Standardized Platform

Kubernetes is the de facto standard for container orchestration, with adoption rates hitting 96% and market dominance at 92%. This isn't transient hype; enterprises are standardizing on it for the automation and operational efficiencies it provides.

This widespread adoption means your engineering teams can leverage existing Kubernetes expertise to manage the database, significantly flattening the learning curve and reducing the operational burden of maintaining disparate, bespoke database management toolchains.

By treating the database as another workload within the cluster, you gain tangible benefits:

- Infrastructure Consistency: The same YAML manifests, CI/CD pipelines, and monitoring stacks (e.g., Prometheus/Grafana) used for your applications can now manage your database's entire lifecycle.

- Developer Self-Service: Developers can provision production-like database instances on-demand, within platform-defined guardrails, drastically accelerating development and testing cycles.

- Cloud Neutrality: A Kubernetes-based PostgreSQL deployment is inherently portable. You can migrate the entire application stack—services and data—between on-premise data centers and various cloud providers with minimal refactoring.

Unlocking GitOps for Databases

Perhaps the most compelling advantage is managing database infrastructure via GitOps. This paradigm replaces manual configuration tweaks and imperative scripting against production databases with a fully declarative model. Your entire PostgreSQL cluster configuration—from the Postgres version and replica count to backup schedules and pg_hba.conf rules—is defined as code within a Git repository.

This declarative approach doesn't just automate deployments. It establishes an immutable, auditable log of every change to your database infrastructure. For compliance audits (e.g., SOC 2, ISO 27001) and root cause analysis in a production environment, this is invaluable.

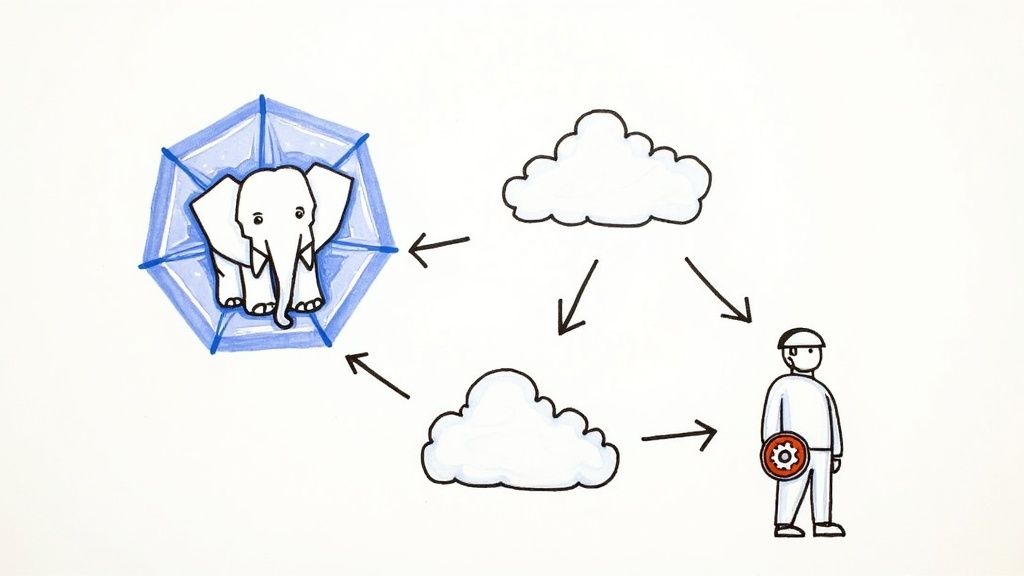

Modern Kubernetes Operators extend this concept by encapsulating the complex logic of database administration. These operators function as automated DBAs, handling mission-critical tasks like high availability (HA), automated failover, backups, and point-in-time recovery (PITR). They are the core technology that makes running PostgreSQL on Kubernetes not just feasible, but a strategically sound choice for production workloads.

Choosing Your Deployment Strategy

Selecting the right deployment strategy for PostgreSQL on Kubernetes is a foundational decision that dictates operational workload, scalability patterns, and future flexibility. This choice balances control against convenience, presenting three primary technical paths, from a completely manual implementation to a fully managed service.

Manual StatefulSets: The DIY Approach

Employing manual StatefulSets is the most direct, low-level method for running PostgreSQL on Kubernetes. This approach grants you absolute, granular control over every component of your database cluster. You are responsible for scripting all operational logic: pod initialization, primary-replica configuration, backup orchestration, and failover procedures.

This level of control allows for deep customization of PostgreSQL parameters and the implementation of bespoke high-availability topologies. However, this power comes at a significant operational cost. Your team must build and maintain the complex automation that a production-grade operator provides out-of-the-box.

StatefulSets are generally reserved for teams with deep, dual expertise in both Kubernetes internals and PostgreSQL administration. If you have a non-standard requirement—such as a unique replication topology—that off-the-shelf operators cannot satisfy, this may be a viable option. For most use cases, the required engineering investment presents a significant barrier.

Kubernetes Operators: The Automation Sweet Spot

PostgreSQL Operators represent a paradigm shift for managing stateful applications on Kubernetes. An Operator is a domain-specific controller that extends the Kubernetes API to automate complex operational tasks. It effectively encodes the knowledge of an experienced DBA into software.

With an Operator, you manage your database cluster via a Custom Resource Definition (CRD). Instead of manipulating individual Pods, Services, and ConfigMaps, you declare the desired state in a high-level YAML manifest. For example: "I require a three-node PostgreSQL 16 cluster with continuous archiving to an S3-compatible object store." The Operator then works to reconcile the cluster's current state with your declared state.

- Automated Failover: The Operator continuously monitors the primary instance's health. Upon failure detection, it orchestrates a failover by promoting a suitable replica, updating the primary service endpoint, and ensuring minimal application downtime.

- Simplified Backups: Backup schedules and retention policies are defined declaratively in the manifest. The Operator manages the entire backup lifecycle, including base backups and continuous WAL (Write-Ahead Log) archiving for Point-in-Time Recovery (PITR).

- Effortless Upgrades: To apply a minor version update (e.g., 16.1 to 16.2), you modify a single line in the CRD. The Operator executes a controlled rolling update, minimizing service disruption.

This strategy strikes an optimal balance. You retain full control over your infrastructure and data while offloading complex, error-prone database management tasks to battle-tested automation. If you're managing infrastructure as code, our guide on combining Terraform with Kubernetes can help you build a fully declarative workflow.

Managed Services: The Hands-Off Option

The third path is to use a managed Database-as-a-Service (DBaaS) built on a Kubernetes-native architecture, such as Amazon RDS on Outposts or Google Cloud's AlloyDB Omni. This is the simplest option from an operational perspective, abstracting away nearly all underlying infrastructure complexity.

You receive a PostgreSQL endpoint, and the cloud provider manages patching, backups, availability, and scaling. It’s an excellent choice for teams that want to focus exclusively on application development and have no desire to manage database infrastructure.

This convenience involves trade-offs: reduced control over specific PostgreSQL configurations, vendor lock-in, and potentially less granular control over data residency and network policies. The total cost of ownership (TCO) can also be significantly higher than a self-managed solution, particularly at scale.

The industry is clearly converging on this model. A 2023 Gartner analysis highlights a market shift toward cloud neutrality, with organizations increasingly leveraging Kubernetes with PostgreSQL for portability. Major cloud providers like Microsoft now endorse PostgreSQL operators like CloudNativePG as a standard for production workloads on Azure Kubernetes Service (AKS). This endorsement, detailed in a CNCF blog post on cloud-neutral PostgreSQL on CNCF.io, signals that Operators are a mature, production-ready standard.

To clarify the decision, here is a technical comparison of the three deployment strategies.

PostgreSQL on Kubernetes Deployment Method Comparison

| Attribute | Manual StatefulSets | PostgreSQL Operator (e.g., CloudNativePG) | Managed Cloud Service (DBaaS) |

|---|---|---|---|

| Operational Overhead | Very High. Requires deep, ongoing manual effort and custom scripting. | Low. Automates lifecycle management (failover, backups, upgrades). | Effectively Zero. Fully managed by the cloud provider. |

| Control & Flexibility | Maximum. Full control over PostgreSQL config, topology, and tooling. | High. Granular control via CRD, but within the Operator's framework. | Low to Medium. Limited to provider-exposed settings. |

| Speed of Deployment | Slow. Requires significant initial engineering to build automation. | Fast. Deploy a production-ready cluster with a single YAML manifest. | Very Fast. Provisioning via cloud console or API in minutes. |

| Required Expertise | Expert-level in both Kubernetes and PostgreSQL administration. | Intermediate Kubernetes knowledge. Operator handles DB expertise. | Minimal. Basic knowledge of the cloud provider's service is sufficient. |

| Portability | High. Can be deployed on any conformant Kubernetes cluster. | High. Operator-based; portable across any cloud or on-prem K8s. | Very Low. Tightly coupled to the specific cloud provider's ecosystem. |

| Cost (TCO) | Low to Medium. Primarily engineering and operational staff costs. | Low. Open-source options have no license fees. Staff costs are reduced. | High. Premium pricing for convenience, especially at scale. |

| Best For | Niche use cases requiring bespoke configurations; teams with deep in-house expertise. | Most production workloads seeking a balance of control and automation. | Teams prioritizing development speed over infrastructure control; smaller projects. |

Ultimately, the optimal choice is contingent on your team's skillset, application requirements, and business objectives. For most modern applications on Kubernetes, a well-supported PostgreSQL Operator provides the ideal combination of control, automation, and operational efficiency.

Let's transition from theory to practical implementation. Deploying PostgreSQL on Kubernetes with an Operator like CloudNativePG allows you to provision a production-ready database cluster from a single, declarative manifest, a stark contrast to the procedural complexity of manual StatefulSets.

The Cluster Custom Resource (CR) becomes the single source of truth for the database's entire lifecycle—its configuration, version, and architecture, making it a perfect fit for any GitOps workflow.

This decision comes down to finding the right balance. Operators are the ideal middle ground for teams who need serious automation but aren't willing to give up essential control. You get the best of both worlds—avoiding the heavy lifting of StatefulSets without being locked into the rigidity of a managed service.

Installing the Operator

Before provisioning a PostgreSQL cluster, the operator itself must be installed into your Kubernetes cluster. This is typically accomplished by applying a single manifest provided by the project maintainers.

With CloudNativePG, this one-time setup deploys the controller manager, which acts as the reconciliation loop. It continuously watches for Cluster resources and takes action to create, update, or delete PostgreSQL instances to match your desired state.

# Example command to install the CloudNativePG operator

kubectl apply -f \

https://raw.githubusercontent.com/cloudnative-pg/cloudnative-pg/release-1.23/releases/cnpg-1.23.0.yaml

Once executed, the operator pod will start in its dedicated namespace (typically cnpg-system), ready to manage PostgreSQL clusters cluster-wide. Proper cluster management is foundational; for a deeper dive, review our guide on Kubernetes cluster management tools.

Crafting a Production-Ready Cluster Manifest

With the operator running, you define your PostgreSQL cluster by creating a YAML manifest for the Cluster custom resource. This manifest is where you specify every critical parameter for a highly available, resilient production deployment.

Let's dissect a detailed manifest, focusing on production-grade fields.

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: postgres-production-db

spec:

instances: 3

imageName: ghcr.io/cloudnative-pg/postgresql:16.2

primaryUpdateStrategy: unsupervised

storage:

size: 20Gi

storageClass: "premium-iops"

postgresql:

parameters:

shared_buffers: "512MB"

max_connections: "200"

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: cnpg.io/cluster

operator: In

values:

- postgres-production-db

topologyKey: "kubernetes.io/hostname"

replication:

synchronous:

quorum: 2

This manifest is a declarative specification for a resilient database system. Let's break down the key sections.

Defining Core Cluster Attributes

The initial fields establish the cluster's fundamental characteristics.

instances: 3: This is the core of your high-availability strategy. It instructs the operator to provision a three-node cluster: one primary and two hot standbys, a standard robust configuration.imageName: ghcr.io/cloudnative-pg/postgresql:16.2: This explicitly pins the PostgreSQL container image version, preventing unintended automatic upgrades and ensuring a predictable, stable database environment.storage: We request 20Gi of persistent storage via thestorageClass: "premium-iops". This directive is crucial; it ensures the database volumes are provisioned on high-performance block storage, not a slow, defaultStorageClassthat would create an I/O bottleneck for a production workload.

Ensuring High Availability and Data Integrity

The subsequent configuration blocks build in fault tolerance and data consistency.

The affinity section is non-negotiable for a genuine HA setup. The podAntiAffinity rule instructs the Kubernetes scheduler to never co-locate two pods from this PostgreSQL cluster on the same physical node. If a node fails, this guarantees that replicas are running on other healthy nodes, ready for failover.

This

podAntiAffinityconfiguration is one of the most critical elements for eliminating single points of failure at the infrastructure level. It transforms a distributed set of pods into a truly fault-tolerant system.

Furthermore, the replication block defines the data consistency model. By setting a synchronous quorum of 2, you enforce that any transaction must be successfully written to the primary and at least one replica before returning success to the application. This configuration guarantees zero data loss (RPO=0) during a failover, as the promoted replica is confirmed to have the latest committed data. The challenge of optimizing deployment strategies often mirrors broader discussions in workflow automation, such as those found in articles on AI workflow automation tools.

Upon applying this manifest (kubectl apply -f your-cluster.yaml), the operator executes a complex workflow: it creates the PersistentVolumeClaims, provisions the underlying StatefulSet, initializes the primary database, configures streaming replication to the standbys, and creates the Kubernetes Services for application connectivity. This single command automates dozens of manual, error-prone steps, yielding a production-grade PostgreSQL cluster in minutes.

Mastering Storage and High Availability

The resilience of a stateful application like PostgreSQL is directly coupled to its storage subsystem. When running PostgreSQL on Kubernetes, data durability is contingent upon a correct implementation of Kubernetes' persistent storage and high availability mechanisms. This is non-negotiable for any production system.

You must understand three core Kubernetes concepts: PersistentVolumeClaims (PVCs), PersistentVolumes (PVs), and StorageClasses. A PVC is a PostgreSQL pod's request for storage, analogous to its requests for CPU or memory. A PV is the actual provisioned storage resource that fulfills that request, and a StorageClass defines different tiers of storage available (e.g., high-IOPS SSDs vs. standard block storage).

This declarative model abstracts storage management. Instead of manually provisioning and attaching disks to nodes, you declare your storage requirements in a manifest, and Kubernetes handles the underlying provisioning.

Choosing the Right Storage Backend

The choice of storage backend directly impacts database performance and durability. You must select a StorageClass that maps to a storage solution designed for the high I/O demands of a production database.

Common storage patterns include:

- Cloud Provider Block Storage: This is the most straightforward approach in cloud environments like AWS, GCP, or Azure. The

StorageClassprovisions services like EBS, Persistent Disk, or Azure Disk, offering high reliability and performance. - Network Attached Storage (NAS): Solutions like NFS can be viable but often become a write performance bottleneck for database workloads. Use with caution.

- Distributed Storage Systems: For maximum performance and flexibility, particularly in on-premise or multi-cloud deployments, systems like Ceph or Portworx are excellent choices. They offer advanced capabilities like storage-level synchronous replication, which can significantly reduce failover times.

A critical error is using the default

StorageClasswithout verifying its underlying provisioner. For a production PostgreSQL workload, you must explicitly select a class that guarantees the IOPS and durability required by your application's service-level objectives (SLOs).

Architecting for High Availability and Failover

With a robust storage foundation, the next challenge is ensuring the database can survive node failures and network partitions. A mature Kubernetes operator automates the complex choreography of high availability (HA).

The operator continuously monitors the health of the primary PostgreSQL instance. If it detects a failure (e.g., the primary pod becomes unresponsive), it initiates an automated failover. It manages the entire leader election process, promoting a healthy, up-to-date replica to become the new primary.

Crucially, the operator also updates the Kubernetes Service object that acts as the stable connection endpoint for your applications. When the failover occurs, the operator instantly updates the Service's selectors to route traffic to the newly promoted primary pod. From the application's perspective, the endpoint remains constant, minimizing or eliminating downtime.

Synchronous vs Asynchronous Replication Trade-Offs

A key architectural decision is the choice between synchronous and asynchronous replication, typically configured via a single field in the operator's CRD.

- Asynchronous Replication: The primary commits a transaction locally and then sends the WAL records to replicas without waiting for acknowledgement. This offers the lowest write latency but introduces a risk of data loss (RPO > 0) if the primary fails before the transaction is replicated.

- Synchronous Replication: The primary waits for at least one replica to confirm it has received and durably written the transaction to its own WAL before acknowledging the commit to the client. This guarantees zero data loss (RPO=0) at the cost of slightly increased write latency.

For most business-critical systems, synchronous replication is the recommended approach. The minor performance overhead is a negligible price for the guarantee of data integrity during a failover.

Finally, never trust an untested failover mechanism. Conduct chaos engineering experiments: delete the primary pod, cordon its node, or inject network latency to simulate a real-world outage. You must empirically validate that the operator performs the failover correctly and that your application reconnects seamlessly. This is the only way to ensure your HA architecture will function as designed when it matters most.

Implementing Backups and Performance Tuning

A PostgreSQL cluster on Kubernetes is not production-ready without a robust, tested backup and recovery strategy that stores data in a durable, off-site location. Similarly, an untuned database is a latent performance bottleneck.

Modern PostgreSQL operators have made disaster recovery (DR) a declarative process. They orchestrate scheduling, log shipping, and restoration, allowing you to manage your entire backup strategy from a YAML manifest.

Automating Backups and Point-in-Time Recovery

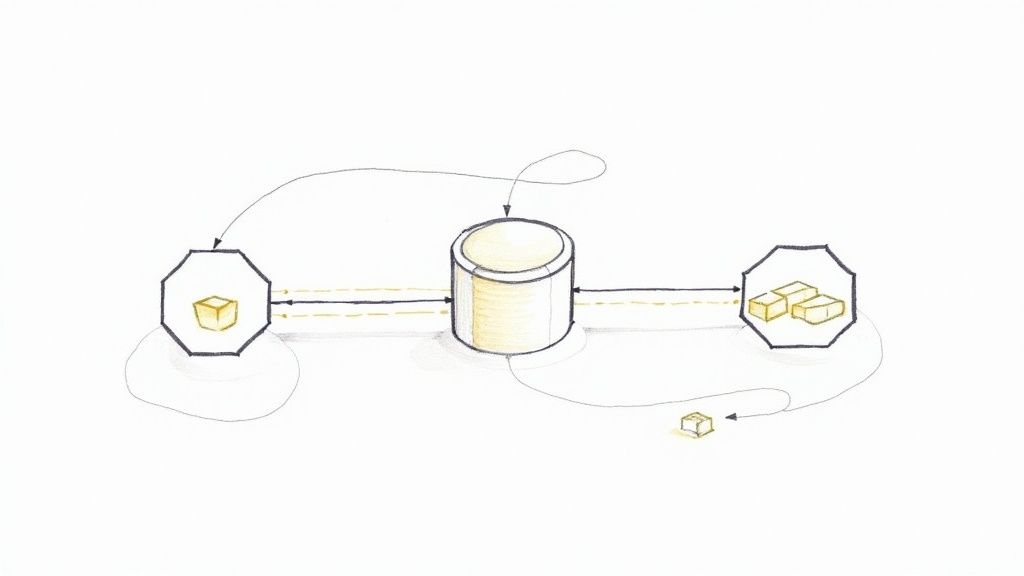

The gold standard for database recovery is Point-in-Time Recovery (PITR). Instead of being limited to restoring a nightly snapshot, PITR allows you to restore the database to a specific microsecond—for instance, just before a data corruption event. This is achieved by combining periodic full backups with a continuous archive of Write-Ahead Logs (WAL).

An operator like CloudNativePG can manage this entire workflow. You specify a destination for the backups—typically an object storage service like Amazon S3, GCS, or Azure Blob Storage—and the operator handles the rest. It schedules base backups and continuously archives every WAL segment to the object store as it is generated.

Here is a sample configuration within a Cluster manifest:

# In your Cluster CRD spec section

backup:

barmanObjectStore:

destinationPath: "s3://your-backup-bucket/production-db/"

endpointURL: "https://s3.us-east-1.amazonaws.com"

# Credentials should be managed via a Kubernetes secret

s3Credentials:

accessKeyId:

name: aws-creds

key: ACCESS_KEY_ID

secretAccessKey:

name: aws-creds

key: SECRET_ACCESS_KEY

retentionPolicy: "30d"

schedule: "0 0 4 * * *" # Daily at 4:00 AM UTC

This configuration instructs the operator to:

- Execute a full base backup daily at 4:00 AM UTC.

- Continuously stream WAL files to the specified S3 bucket.

- Enforce a retention policy, pruning backups and associated WAL files older than 30 days.

Restoration is equally declarative. You create a new

Clusterresource, reference the backup repository, and specify the target recovery timestamp. The operator then automates the entire recovery process: fetching the appropriate base backup and replaying WAL files to bring the new cluster to the desired state.

Fine-Tuning Performance for Kubernetes

Tuning PostgreSQL on Kubernetes requires a declarative mindset. Direct modification of postgresql.conf via exec is an anti-pattern. Instead, all configuration changes should be managed through the operator's CRD. This ensures settings are version-controlled and consistently applied across all cluster instances, eliminating configuration drift.

Key parameters like shared_buffers (memory for data caching) and work_mem (memory for sorting and hashing operations) can be set directly in the Cluster manifest's postgresql.parameters section.

However, the single most impactful performance optimization is connection pooling. PostgreSQL's process-per-connection model is resource-intensive. In a microservices architecture with potentially hundreds of ephemeral connections, this can lead to resource exhaustion and performance degradation.

Tools like PgBouncer are essential. A connection pooler acts as a lightweight intermediary between applications and the database. Applications connect to PgBouncer, which maintains a smaller, managed pool of persistent connections to PostgreSQL. This dramatically reduces connection management overhead, allowing the database to support a much higher number of clients efficiently. Most operators include built-in support for deploying a PgBouncer pool alongside your cluster.

The drive to optimize PostgreSQL is fueled by its expanding role in modern applications. Its dominance is supported by features critical for today's workloads, from vector data for AI/ML and JSONB for semi-structured data to time-series (via Timescale) and geospatial data (via PostGIS). These capabilities make it a cornerstone for analytics and AI, with some organizations reporting a 50% reduction in database TCO after migrating from NoSQL to open-source PostgreSQL. You can read more about PostgreSQL's growing market share at percona.com.

By combining a robust PITR strategy with systematic performance tuning and connection pooling, you can build a PostgreSQL foundation on Kubernetes that is both resilient and highly scalable.

Knowing When to Seek Expert Support

Running PostgreSQL on Kubernetes effectively requires deep expertise across two complex domains. While many teams can achieve an initial deployment, the real challenges emerge during day-two operations.

Engaging external specialists is not a sign of failure but a strategic decision to protect your team's most valuable resource: their time and focus on core product development.

Key indicators that you may need expert support include engineers being consistently diverted from feature development to troubleshoot database performance issues, or an inability to implement a truly resilient and testable high-availability and disaster recovery strategy. These are symptoms of accumulating operational risk.

The operational burden of managing a production database on Kubernetes can become a silent tax on innovation. When your platform team spends more time tuning

work_memthan shipping features that help developers, you're bleeding momentum.

Bringing in specialists provides a force multiplier. They offer deep, battle-tested expertise to solve specific, high-stakes problems efficiently, ensuring your infrastructure is stable, secure, and scalable without derailing your product roadmap. For a clearer understanding of this model, see our overview of Kubernetes consulting services.

Frequently Asked Questions

When architecting for PostgreSQL on Kubernetes, several critical questions consistently arise. Addressing these is key to a successful implementation.

Let's tackle the most common technical inquiries from engineers integrating these two powerful technologies.

Is It Really Safe to Run a Stateful Database Like PostgreSQL on Kubernetes?

Yes, provided a robust architecture is implemented. Early concerns about running stateful services on Kubernetes are largely outdated. Modern Kubernetes primitives like StatefulSets and PersistentVolumes, when combined with a mature PostgreSQL Operator, provide the necessary stability and data persistence for production databases.

The key is automation. A production-grade operator is engineered to handle failure scenarios gracefully. Its ability to automate failover and prevent data loss makes the resulting system as safe as—or arguably safer than—many traditionally managed database environments that rely on manual intervention.

Can I Expect the Same Performance as a Bare-Metal Setup?

You can achieve near-native performance, and for most applications, the operational benefits far outweigh any minor overhead. While there is a slight performance cost from containerization and network virtualization layers, modern container runtimes and high-performance CNI plugins like Calico make this impact negligible for most workloads.

In practice, performance bottlenecks are rarely attributable to Kubernetes itself. The more common culprits are a misconfigured StorageClass using slow disk tiers or, most frequently, the absence of a connection pooler. By provisioning high-IOPS block storage and implementing a tool like PgBouncer, your database can handle intensive production loads effectively.

The most significant performance gain is not in raw IOPS but in operational velocity. The ability to declaratively provision, scale, and manage database environments provides a strategic advantage that dwarfs the minor performance overhead for the vast majority of applications.

What's the Single Biggest Mistake Teams Make?

The most common and costly mistake is underestimating day-two operations. Deploying a basic PostgreSQL instance is straightforward. The true complexity lies in managing backups, implementing disaster recovery, executing zero-downtime upgrades, and performance tuning under load.

Many teams adopt a DIY approach with manual StatefulSets, only to discover they have inadvertently committed to building and maintaining a complex distributed system from scratch. A battle-tested Kubernetes Operator abstracts away 90% of this operational complexity, allowing your team to focus on application logic instead of reinventing the database-as-a-service wheel.

Let's be real: managing PostgreSQL on Kubernetes requires deep expertise in both systems. If your team is stuck chasing performance ghosts or can't nail down a reliable HA strategy, it might be time to bring in an expert. OpsMoon connects you with the top 0.7% of DevOps engineers who can stabilize and scale your database infrastructure, turning it into a rock-solid foundation for your business. Start with a free work planning session to map out your path to a production-grade setup.

Leave a Reply