To properly validate Infrastructure as Code (IaC), you must implement a multi-layered strategy that extends far beyond basic syntax checks. A robust validation process integrates static analysis, security scanning, and policy enforcement directly into the development and deployment lifecycle. The primary objective is to systematically detect and remediate misconfigurations, security vulnerabilities, and compliance violations before they reach a production environment.

Why Modern DevOps Demands Rigorous IaC Validation

In modern cloud-native environments, the declarative definition of infrastructure through IaC is standard practice. However, for DevOps and platform engineers, the critical task is ensuring that this code is secure, compliant, and cost-efficient. Deploying unvalidated IaC introduces significant risk, potentially creating security vulnerabilities, causing uncontrolled cloud expenditure, or resulting in severe compliance breaches.

This guide provides a technical, multi-layered framework for validating IaC. We will cover local validation techniques like static analysis and linting, progress to automated security and policy-as-code checks, and integrate these stages into a CI/CD pipeline for early detection. This framework is engineered to accelerate infrastructure delivery while enhancing security and reliability.

The Shift From Manual Checks to Automated Guardrails

The complexity of modern cloud infrastructure renders manual reviews insufficient and prone to error. A single misconfigured security group or an over-privileged IAM role can expose an entire organization to significant risk. Automated validation acts as a set of programmatic guardrails, ensuring every infrastructure change adheres to predefined technical and security standards.

This approach codifies an organization's operational best practices and security policies directly into the development workflow, shifting from a reactive to a proactive security posture. For a deeper analysis of foundational principles, refer to our guide on Infrastructure as Code best practices.

The core principle is to subject infrastructure code to the same rigorous validation pipeline as application code. This includes linting, static analysis, security scanning, and automated testing at every stage of its lifecycle.

Understanding the Core Components of IaC Validation

A robust IaC validation strategy is composed of several distinct, complementary layers, each serving a specific technical function:

- Static Analysis & Linting: This is the first validation gate, performed locally or in early CI stages. It identifies syntactical errors, formatting deviations, and the use of deprecated or non-optimal resource attributes before a commit.

- Security & Compliance Scanning: This layer scans IaC definitions for known vulnerabilities and configuration weaknesses. It audits the code against established security benchmarks (e.g., CIS) and internal security policies.

- Policy as Code (PaC): This layer enforces organization-specific governance rules. Examples include mandating specific resource tags, restricting deployments to approved geographic regions, or prohibiting the use of certain instance types.

- Dry Runs & Plans: This is the final pre-execution validation step. It simulates the changes that will be applied to the target environment, generating a detailed execution plan for review without modifying live infrastructure.

This screenshot from Terraform's homepage illustrates the standard write, plan, and apply workflow.

The plan stage is a critical validation step, providing a deterministic preview of the mutations Terraform intends to perform on the infrastructure state.

Implement Static Analysis for Early Feedback

The most efficient validation occurs before code is ever committed to a repository. Static analysis provides an immediate, local feedback loop by inspecting code for defects without executing it. This practice is a core tenet of the shift-left testing philosophy, which advocates for moving validation as early as possible in the development lifecycle to minimize the cost and complexity of remediation. By integrating these checks into the local development environment, you drastically reduce the likelihood of introducing trivial errors into the CI/CD pipeline. For a comprehensive overview of this approach, read our article on what is shift-left testing.

Starting with Built-in Validation Commands

Most IaC frameworks include native commands for basic validation. These should be integrated into your workflow as a pre-commit hook or executed manually before every commit.

For engineers using Terraform, the terraform validate command is the foundational check. It performs several key verifications:

- Syntax Validation: Confirms that the HCL (HashiCorp Configuration Language) is syntactically correct and parsable.

- Schema Conformance: Checks that resource blocks, data sources, and module calls conform to the expected schema.

- Reference Integrity: Verifies that all references to variables, locals, and resource attributes are valid within their scope.

A successful validation produces a concise success message.

$ terraform validate

Success! The configuration is valid.

It is critical to understand the limitations of terraform validate. It does not communicate with cloud provider APIs, so it cannot detect invalid resource arguments (e.g., non-existent instance types) or logical errors. Its sole purpose is to confirm syntactic and structural correctness.

For Pulumi users, the equivalent command is pulumi preview --diff. This command communicates with the cloud provider to generate a detailed plan, and the --diff flag provides a color-coded output highlighting the exact changes to be applied. It is an essential step for identifying logical errors and understanding the real-world impact of code modifications from the command line.

Leveling Up with Linters

To move beyond basic syntax, you must employ dedicated linters. These tools analyze code against an extensible ruleset of best practices, common misconfigurations, and potential bugs, providing a deeper level of static analysis.

Two prominent open-source linters are TFLint for Terraform and cfn-lint for AWS CloudFormation.

Using TFLint for Terraform

TFLint is specifically engineered to detect issues that terraform validate overlooks. It inspects provider-specific attributes, such as flagging incorrect instance types for an AWS EC2 resource or warning about the use of deprecated arguments.

To use it, first initialize TFLint for your project, which downloads necessary provider plugins, and then run the analysis.

# Initialize TFLint to download provider-specific rulesets

$ tflint --init

# Run the linter against the current directory

$ tflint

Example output might identify a common performance-related misconfiguration:

Warning: instance_type "t2.nano" is not recommended for production workloads. (aws_instance_invalid_type)

on main.tf line 18:

18: instance_type = "t2.nano"

Reference: https://github.com/terraform-linters/tflint-ruleset-aws/blob/v0.22.0/docs/rules/aws_instance_invalid_type.md

This type of immediate, actionable feedback is invaluable. For an optimal developer experience, integrate TFLint into your IDE using a plugin to get real-time analysis as you write code. The demand for such precision is reflected in various industries; for instance, the Idle Air Control (IAC) actuator market, valued at USD 1.04 billion in 2024, is projected to reach USD 2.36 billion by 2032 due to the need for precise engine components, as detailed by SNS Insider.

Running cfn-lint for CloudFormation

For teams standardized on AWS CloudFormation, cfn-lint is the official and essential linter. It validates templates against the official CloudFormation resource specification, detecting invalid property values, incorrect resource types, and other common errors.

Execution is straightforward:

$ cfn-lint my-template.yaml

Pro Tip: Commit a shared linter configuration file (e.g.,

.tflint.hclor.cfnlintrc) to your version control repository. This ensures that all developers and CI/CD jobs operate with a consistent, versioned ruleset, enforcing a uniform quality standard across the engineering team.

By mandating static analysis as part of the local development loop, you establish a solid foundation of code quality, catching simple errors instantly and freeing up CI/CD resources for more complex security and policy validation.

Automate Security and Compliance with Policy as Code

While static analysis addresses code quality, the next critical validation layer is enforcing security and compliance requirements. This is accomplished through Policy as Code (PaC), a practice that transforms security policies from static documents into executable code that is evaluated alongside your IaC definitions.

Instead of relying on manual pull request reviews to detect an unencrypted S3 bucket or an IAM role with excessive permissions, PaC tools function as automated security gatekeepers. They scan your Terraform, CloudFormation, or other IaC files against extensive libraries of security best practices, flagging misconfigurations before they are deployed. For a broader perspective on cloud security, review these essential cloud computing security best practices.

A Look at the Top IaC Security Scanners

The open-source ecosystem provides several powerful tools for IaC security scanning. Three of the most widely adopted are Checkov, tfsec, and Terrascan. Each has a distinct focus and set of capabilities.

Comparison of IaC Security Scanning Tools

| Tool | Primary Focus | Supported IaC | Custom Policies |

|---|---|---|---|

| Checkov | Broad security & compliance coverage | Terraform, CloudFormation, Kubernetes, Dockerfiles, etc. | Python, YAML |

| tfsec | High-speed, developer-centric Terraform security scanning | Terraform | YAML, JSON, Rego |

| Terrascan | Extensible security scanning with Rego policies | Terraform, CloudFormation, Kubernetes, Dockerfiles, etc. | Rego (OPA) |

Checkov is an excellent starting point for most teams due to its extensive rule library and broad support for numerous IaC frameworks, making it ideal for heterogeneous environments.

Installation and execution are straightforward using pip:

# Install Checkov

pip install checkov

# Scan a directory containing IaC files

checkov -d .

The tool scans all supported file types and generates a detailed report, including remediation guidance and links to relevant documentation. The output is designed to be immediately actionable for developers.

This output provides precise, actionable feedback by identifying the failed check ID, the file path, and the specific line of code, eliminating ambiguity and accelerating remediation.

Implementing Custom Policies with OPA and Conftest

While out-of-the-box security rules cover common vulnerabilities, organizations require enforcement of specific internal governance policies. These might include mandating a particular resource tagging schema, restricting deployments to certain geographic regions, or limiting the allowable sizes of virtual machines.

This is the ideal use case for Open Policy Agent (OPA) and its companion tool, Conftest.

OPA is a general-purpose policy engine that uses a declarative language called Rego to define policies. Conftest allows you to apply these Rego policies to structured data files, including IaC. This combination provides granular control to codify any custom rule. For more on integrating security into your development lifecycle, refer to our guide on DevOps security best practices.

Consider a technical example: enforcing a mandatory CostCenter tag on all AWS S3 buckets. This rule can be expressed in a Rego file:

package main

# Deny if an S3 bucket resource exists without a CostCenter tag

deny[msg] {

input.resource.aws_s3_bucket[name]

not input.resource.aws_s3_bucket[name].tags.CostCenter

msg := sprintf("S3 bucket '%s' is missing the required 'CostCenter' tag", [name])

}

Save this code as policy/tags.rego. To validate a Terraform plan against this policy, you first convert the plan to a JSON representation and then execute Conftest.

# Generate a binary plan file

terraform plan -out=plan.binary

# Convert the binary plan to JSON

terraform show -json plan.binary > plan.json

# Test the JSON plan against the Rego policy

conftest test plan.json

If any S3 bucket in the plan violates the policy, Conftest will exit with a non-zero status code and output the custom error message, effectively blocking a non-compliant change. This powerful combination enables the creation of a fully customized validation pipeline that enforces business-critical governance rules.

Build a Bulletproof IaC Validation Pipeline

Integrating static analysis and policy scanning into an automated CI/CD pipeline is the key to creating a systematic and reliable validation process. This transforms disparate checks into a cohesive quality gate that vets every infrastructure change before it reaches production. The objective is to provide developers with fast, context-aware feedback directly within their version control system, typically within a pull request. This approach programmatic enforcement of security and compliance, shifting the responsibility from individual reviewers to an automated system.

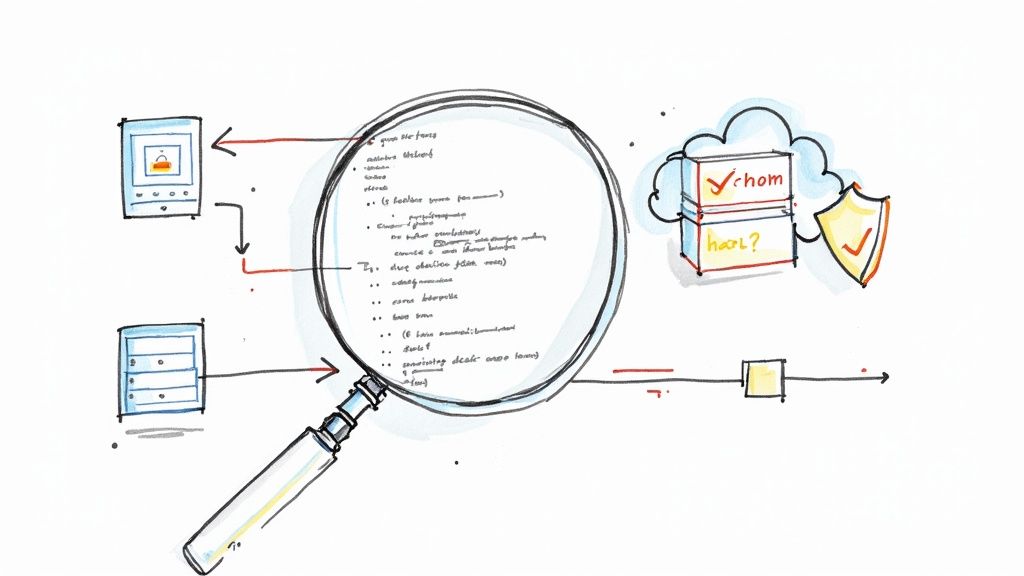

This diagram illustrates the core stages of an automated IaC validation pipeline, from code commit to policy enforcement.

This workflow exemplifies the "shift-left" principle by embedding validation directly into the development lifecycle, ensuring immediate feedback and fostering a culture of continuous improvement.

Structuring a Multi-Stage IaC Pipeline

A well-architected IaC pipeline uses a multi-stage approach to fail fast, conserving CI resources by catching simple errors before executing more time-consuming scans. Adhering to robust CI/CD pipeline best practices is crucial for building an effective and maintainable workflow.

A highly effective three-stage structure is as follows:

- Lint & Validate: This initial stage is lightweight and fast. It executes commands like

terraform validateand linters such as TFLint. Its purpose is to provide immediate feedback on syntactical and formatting errors within seconds. - Security Scan: Upon successful validation, the pipeline proceeds to deeper analysis. This stage executes security and policy-as-code tools like Checkov, tfsec, or a custom Conftest suite to identify security vulnerabilities, misconfigurations, and policy violations.

- Plan Review: With syntax and security validated, the final stage generates an execution plan using

terraform plan. This step confirms that the code is logically sound and can be successfully translated into a series of infrastructure changes, serving as the final automated sanity check.

This layered approach improves efficiency and simplifies debugging by isolating the source of failures.

Implementing the Pipeline in GitHub Actions

GitHub Actions is an ideal platform for implementing these workflows due to its tight integration with source control. A workflow can be configured to trigger on every pull request, execute the validation stages, and surface the results directly within the PR interface.

The following is a production-ready example for a Terraform project. Save this YAML configuration as .github/workflows/iac-validation.yml in your repository.

name: IaC Validation Pipeline

on:

pull_request:

branches:

- main

paths:

- 'terraform/**'

jobs:

validate:

name: Lint and Validate

runs-on: ubuntu-latest

steps:

- name: Checkout Code

uses: actions/checkout@v3

- name: Setup Terraform

uses: hashicorp/setup-terraform@v2

with:

terraform_version: 1.5.0

- name: Terraform Format Check

run: terraform fmt -check -recursive

working-directory: ./terraform

- name: Terraform Init

run: terraform init -backend=false

working-directory: ./terraform

- name: Terraform Validate

run: terraform validate

working-directory: ./terraform

security:

name: Security Scan

runs-on: ubuntu-latest

needs: validate # Depends on the validate job

steps:

- name: Checkout Code

uses: actions/checkout@v3

- name: Run Checkov Scan

uses: bridgecrewio/checkov-action@v12

with:

directory: ./terraform

soft_fail: true # Log issues but don't fail the build

output_format: cli,sarif

output_file_path: "console,results.sarif"

- name: Upload SARIF file

uses: github/codeql-action/upload-sarif@v2

with:

sarif_file: results.sarif

plan:

name: Terraform Plan

runs-on: ubuntu-latest

needs: security # Depends on the security job

steps:

- name: Checkout Code

uses: actions/checkout@v3

- name: Setup Terraform

uses: hashicorp/setup-terraform@v2

with:

terraform_version: 1.5.0

- name: Configure AWS Credentials

uses: aws-actions/configure-aws-credentials@v2

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: us-east-1

- name: Terraform Init

run: terraform init

working-directory: ./terraform

- name: Terraform Plan

run: terraform plan -no-color

working-directory: ./terraform

Key Takeaway: The

soft_fail: trueparameter for the Checkov action is a critical strategic choice during initial implementation. It allows security findings to be reported without blocking the pipeline, enabling a gradual rollout of policy enforcement. Once the team has addressed the initial findings, this can be set tofalsefor high-severity issues to enforce a hard gate.

Actionable Feedback in Pull Requests

The final step is to deliver validation results directly to the developer within the pull request. The example workflow utilizes the github/codeql-action/upload-sarif action, which ingests the SARIF (Static Analysis Results Interchange Format) output from Checkov. GitHub automatically parses this file and displays the findings in the "Security" tab of the pull request, with annotations directly on the affected lines of code.

This creates a seamless, low-friction feedback loop. A developer receives immediate, contextual feedback within minutes of pushing a change, empowering them to remediate issues autonomously. This transforms the security validation process from a bottleneck into a collaborative and educational mechanism, continuously improving the security posture of the infrastructure codebase.

Detect and Remediate Configuration Drift

Infrastructure deployment is merely the initial state. Over time, discrepancies will emerge between the infrastructure's declared state (in code) and its actual state in the cloud environment. This phenomenon, known as configuration drift, is a persistent challenge to maintaining stable and secure infrastructure.

Drift is typically introduced through out-of-band changes, such as manual modifications made via the cloud console during an incident response or urgent security patching. While often necessary, these manual interventions break the single source of truth established by the IaC repository, introducing unknown variables and risk.

Identifying Drift with Your Native Tooling

The primary tool for drift detection is often the IaC tool itself. For Terraform users, the terraform plan command is a powerful drift detector. When executed against an existing infrastructure, it queries the cloud provider APIs, compares the real-world resource state with the Terraform state file, and reports any discrepancies.

To automate this process, configure a scheduled CI/CD job to run terraform plan at regular intervals (e.g., daily or hourly for critical environments).

The command should use the -detailed-exitcode flag for programmatic evaluation:

terraform plan -detailed-exitcode -no-color

This flag provides distinct exit codes for CI/CD logic:

- 0: No changes detected; infrastructure is in sync with the state.

- 1: An error occurred during execution.

- 2: Changes detected, indicating configuration drift.

The CI job can then use this exit code to trigger automated alerts via Slack, PagerDuty, or other notification systems, transforming drift detection from a manual audit to a proactive monitoring process.

Advanced Drift Detection with Specialized Tools

Native tooling can only detect drift in resources it manages. It is blind to "unmanaged" resources created outside of its purview (i.e., shadow IT).

For comprehensive drift detection, a specialized tool like driftctl is required. It scans your entire cloud account, compares the findings against your IaC state, and categorizes resources into three buckets:

- Managed Resources: Resources present in both the cloud environment and the IaC state.

- Unmanaged Resources: Resources existing in the cloud but not defined in the IaC state.

- Deleted Resources: Resources defined in the IaC state but no longer present in the cloud.

Execution is straightforward:

driftctl scan --from tfstate://path/to/your/terraform.tfstate

The output provides a clear summary of all discrepancies, enabling you to identify and either import unmanaged resources into your code or decommission them.

The core principle here is simple yet critical: the clock starts the moment you know about a problem. Once drift is detected, you own it. Ignoring it allows inconsistencies to compound, eroding the integrity of your entire infrastructure management process.

Strategies for Remediation

Detecting drift necessitates a clear remediation strategy, which will vary based on organizational maturity and risk tolerance.

There are two primary remediation models:

- Manual Review and Reconciliation: This is the safest approach, particularly during initial adoption. Upon drift detection, the pipeline can automatically open a pull request or create a ticket detailing the changes required to bring the code back into sync. A human engineer then reviews the proposed plan, investigates the root cause of the drift, and decides whether to revert the cloud change or update the IaC to codify it.

- Automated Rollback: For highly secure or regulated environments, the pipeline can be configured to automatically apply a plan that reverts any detected drift. This enforces a strict "code is the source of truth" policy, ensuring the live environment always reflects the repository. This approach requires an extremely high degree of confidence in the validation pipeline to prevent unintended service disruptions.

Effective drift management completes the IaC validation lifecycle, extending checks from pre-deployment to continuous operational monitoring. This is the only way to ensure infrastructure remains consistent, predictable, and secure over its entire lifecycle.

Frequently Asked IaC Checking Questions

Implementing a comprehensive IaC validation strategy inevitably raises technical questions. Addressing these common challenges proactively can significantly streamline adoption and improve outcomes for DevOps and platform engineering teams.

This section provides direct, technical answers to the most frequent queries encountered when building and scaling IaC validation workflows.

How Do I Start Checking IaC in a Large Legacy Codebase?

Scanning a large, mature IaC repository for the first time often yields an overwhelming number of findings. Attempting to fix all issues at once is impractical and demoralizing. The solution is a phased, incremental rollout.

Follow this technical strategy for a manageable adoption:

- Establish a Baseline in Audit Mode: Configure your scanning tool (e.g., Checkov or tfsec) to run in your CI pipeline with a "soft fail" or "audit-only" setting. This populates a dashboard or log with all current findings without blocking builds, providing a clear baseline of your technical debt.

- Enforce a Single, High-Impact Policy: Begin by enforcing one critical policy for all new or modified code only. Excellent starting points include policies that detect publicly accessible S3 buckets or IAM roles with

*:*permissions. This demonstrates immediate value without requiring a large-scale refactoring effort. - Manage Existing Findings as Tech Debt: Triage the baseline findings and create tickets in your project management system. Prioritize these tickets based on severity and address them incrementally over subsequent sprints.

This methodical approach prevents developer friction, provides immediate security value, and makes the process of improving a legacy codebase manageable.

Which Security Tool Is Best: Checkov, tfsec, or Terrascan?

There is no single "best" tool; the optimal choice depends on your specific technical requirements and ecosystem.

Each tool has distinct advantages:

- tfsec: A high-performance scanner dedicated exclusively to Terraform. Its speed makes it ideal for local pre-commit hooks and early-stage CI jobs where rapid feedback is critical.

- Checkov: A versatile, multi-framework scanner supporting Terraform, CloudFormation, Kubernetes, Dockerfiles, and more. Its extensive policy library and broad framework support make it an excellent choice for organizations with heterogeneous technology stacks.

- Terrascan: Another multi-framework tool notable for its ability to map findings to specific compliance frameworks (e.g., CIS, GDPR, PCI DSS). This is a significant advantage for organizations operating in regulated industries.

A common and effective strategy is to use Checkov for broad coverage in a primary CI/CD security stage and empower developers with tfsec locally for faster, iterative feedback.

For maximum control and customization, the most advanced solution is to leverage Open Policy Agent (OPA) with Conftest. This allows you to write custom policies in the Rego language, enabling you to enforce any conceivable organization-specific rule, from mandatory resource tagging schemas to constraints on specific VM SKUs.

Can I Write My Own Custom Policy Rules?

Yes, and you absolutely should. While the default rulesets provided by scanning tools cover universal security best practices, true governance requires codifying your organization's specific architectural standards, cost-control measures, and compliance requirements.

Most modern tools support custom policies. Checkov, for instance, allows custom checks to be written in both YAML and Python.

This capability elevates your validation from generic security scanning to automated architectural governance. By codifying your internal engineering standards, you ensure every deployment aligns with your organization's specific technical and business objectives, enforcing consistency and best practices at scale.

Managing a secure and compliant infrastructure requires real-world expertise and the right toolkit. At OpsMoon, we connect you with the top 0.7% of DevOps engineers who live and breathe this stuff. They can build and manage these robust validation pipelines for you. Start with a free work planning session to map out your IaC strategy.

Leave a Reply