Pairing Kubernetes with Terraform delivers a powerful, declarative workflow for managing modern, cloud-native systems. The synergy is clear: Terraform excels at provisioning the foundational infrastructure—VPCs, subnets, and managed Kubernetes control planes—while Kubernetes orchestrates the containerized applications running on that infrastructure.

By combining them, you achieve a complete Infrastructure as Code (IaC) solution that covers every layer of your stack, from the physical network to the running application pod.

The Strategic Power of Combining Terraform and Kubernetes

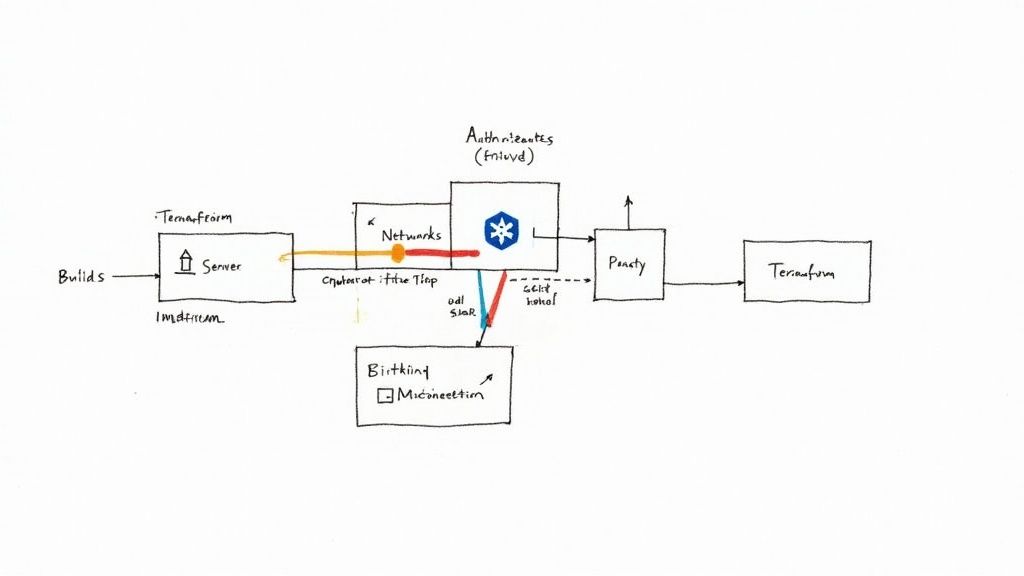

To grasp the technical synergy, consider the distinct roles in a modern cloud environment.

Terraform acts as the infrastructure provisioner. It interacts directly with cloud provider APIs (AWS, GCP, Azure) to build the static, underlying components. Its state file (terraform.tfstate) becomes the source of truth for your infrastructure's configuration. It lays down:

- Networking: VPCs, subnets, security groups, and routing tables.

- Compute: The virtual machines for Kubernetes worker nodes (e.g., EC2 instances in an ASG).

- Managed Services: The control planes for services like Amazon EKS, Google GKE, or Azure AKS.

- IAM: The specific roles and permissions required for the Kubernetes control plane and nodes to function.

Once this foundation is provisioned, Kubernetes takes over as the runtime orchestrator. It manages the dynamic, application-level resources within the cluster:

- Workloads: Deployments, StatefulSets, and DaemonSets that manage pod lifecycles.

- Networking: Services and Ingress objects that control traffic flow between pods.

- Configuration: ConfigMaps and Secrets that decouple application configuration from container images.

A Blueprint for Modern DevOps

This division of labor is the cornerstone of efficient and reliable cloud operations. It allows infrastructure teams and application teams to operate independently, using the tool best suited for their domain.

The scale of modern cloud environments necessitates this approach. By 2025, it's not uncommon for a single enterprise to be running over 20 Kubernetes clusters across multiple clouds and on-premise data centers. Managing this complexity without a robust IaC strategy is operationally infeasible.

This separation of duties yields critical technical benefits:

- Idempotent Environments: Terraform ensures that running

terraform applymultiple times results in the same infrastructure state, eliminating configuration drift across development, staging, and production. - Declarative Scaling: Scaling a node pool is a simple code change (e.g.,

desired_size = 5). Terraform calculates the delta and executes the required API calls to achieve the target state. - Reduced Manual Errors: Defining infrastructure in HCL (HashiCorp Configuration Language) minimizes the risk of human error from manual console operations, a leading cause of outages.

- Git-based Auditing: Storing infrastructure code in Git provides a complete, auditable history of every change, viewable through

git logand pull request reviews.

This layered approach is more than just a technical convenience; it's a strategic blueprint for building resilient and automated systems. By using each tool for what it does best, you get all the benefits of Infrastructure as Code at every single layer of your stack.

Ultimately, this powerful duo solves some of the biggest challenges in DevOps. Terraform provides the stable, version-controlled foundation, while Kubernetes delivers the dynamic, self-healing runtime environment your applications need to thrive. It's the standard for building cloud-native systems that are not just powerful, but also maintainable and ready to scale for whatever comes next.

Choosing Your Integration Strategy

When integrating Terraform and Kubernetes, the most critical decision is defining the boundary of responsibility. A poorly defined boundary leads to state conflicts, operational complexity, and workflow friction.

Think of it as two control loops: Terraform's reconciliation loop (terraform apply) and Kubernetes' own reconciliation loops (e.g., the deployment controller). The goal is to prevent them from fighting over the same resources.

Terraform's core strength lies in managing long-lived, static infrastructure that underpins the Kubernetes cluster:

- Networking: VPCs, subnets, and security groups.

- Identity and Access Management (IAM): The roles and permissions your cluster needs to talk to other cloud services.

- Managed Kubernetes Services: The actual control planes for Amazon EKS, Google GKE, or Azure AKS.

- Worker Nodes: The fleet of virtual machines that make up your node pools.

Kubernetes, in contrast, is designed to manage the dynamic, short-lived, and frequently changing resources inside the cluster. It excels at orchestrating application lifecycles, handling deployments, services, scaling, and self-healing.

Establishing a clear separation of concerns is fundamental to a successful integration.

The Cluster Provisioning Model

The most robust and widely adopted pattern is to use Terraform exclusively for provisioning the Kubernetes cluster and its direct dependencies. Once the cluster is operational and its kubeconfig is generated, Terraform's job is complete.

Application deployment and management are then handed off to a Kubernetes-native tool. This is the ideal entry point for GitOps tools like ArgoCD or Flux. These tools continuously synchronize the state of the cluster with declarative manifests stored in a Git repository.

This approach creates a clean, logical separation:

- The Infrastructure Team uses Terraform to manage the lifecycle of the cluster itself. The output is a

kubeconfigfile. - Application Teams commit Kubernetes YAML manifests to a Git repository, which a GitOps controller applies to the cluster.

This model is highly scalable and aligns with modern team structures, empowering developers to manage their applications without requiring infrastructure-level permissions.

The Direct Management Model

An alternative is using the Terraform Kubernetes Provider to manage resources directly inside the cluster. This provider allows you to define Kubernetes objects like Deployments, Services, and ConfigMaps using HCL, right alongside your infrastructure code.

This approach unifies the entire stack under Terraform's state management. It can be effective for bootstrapping a cluster with essential services, such as an ingress controller or a monitoring agent, as part of the initial terraform apply.

However, this model has significant drawbacks. When Terraform manages in-cluster resources, its state file becomes the single source of truth. This directly conflicts with Kubernetes' own control loops and declarative nature. If an operator uses kubectl edit deployment to make a change, Terraform will detect this as state drift on the next plan and attempt to revert it. This creates a constant tug-of-war between imperative kubectl commands and Terraform's declarative state.

This unified approach trades operational simplicity for potential complexity. It can be effective for small teams or for managing foundational cluster add-ons, but it often becomes brittle at scale when multiple teams are deploying applications.

The Hybrid Integration Model

For most production use cases, a hybrid model offers the optimal balance of stability and agility.

Here’s the typical implementation:

- Terraform provisions the cluster, node pools, and critical, static add-ons using the Kubernetes and Helm providers. These are foundational components that change infrequently, like

cert-manager, Prometheus, or a cluster autoscaler. - GitOps tools like ArgoCD or Flux are then deployed by Terraform to manage all dynamic application workloads.

This strategy establishes a clear handoff: Terraform configures the cluster's "operating system," while GitOps tools manage the "applications." This is often the most effective and scalable model, providing rock-solid infrastructure with a nimble application delivery pipeline.

Comparing Terraform and Kubernetes Integration Patterns

The right pattern depends on your team's scale, workflow, and operational maturity. Understanding the trade-offs is key.

| Integration Pattern | Primary Use Case | Advantages | Challenges |

|---|---|---|---|

| Cluster Provisioning | Managing the K8s cluster lifecycle and handing off application deployments to GitOps tools. | Excellent separation of concerns, empowers application teams, highly scalable and secure. | Requires managing two distinct toolchains (Terraform for infra, GitOps for apps). |

| Direct Management | Using Terraform's Kubernetes Provider to manage both the cluster and in-cluster resources. | A single, unified workflow for all resources; useful for bootstrapping cluster services. | Can lead to state conflicts and drift; couples infrastructure and application lifecycles. |

| Hybrid Model | Using Terraform for the cluster and foundational add-ons, then deploying a GitOps agent for apps. | Balances stability and agility; ideal for most production environments. | Slight initial complexity in setting up the handoff between Terraform and the GitOps tool. |

Ultimately, the goal is a workflow that feels natural and reduces friction. For most teams, the Hybrid Model offers the best of both worlds, providing a stable foundation with the flexibility needed for modern application development.

Let's transition from theory to practice by provisioning a production-grade Kubernetes cluster on AWS using Terraform.

This walkthrough provides a repeatable template for building an Amazon Elastic Kubernetes Service (EKS) cluster, incorporating security and scalability best practices from the start.

Provisioning a Kubernetes Cluster with Terraform

We will leverage the official AWS EKS Terraform module. Using a vetted, community-supported module like this is a critical best practice. It abstracts away immense complexity and encapsulates AWS best practices for EKS deployment, saving you from building and maintaining hundreds of lines of resource definitions.

The conceptual model is simple: Terraform is the IaC tool that interacts with cloud APIs to build the infrastructure, and Kubernetes is the orchestrator that manages the containerized workloads within that infrastructure.

This diagram clarifies the separation of responsibilities. Terraform communicates with the cloud provider's API to create resources, then configures kubectl with the necessary credentials to interact with the newly created cluster.

Setting Up the Foundation

Before writing any HCL, we must address state management. Storing the terraform.tfstate file locally is untenable for any team-based or production environment due to the risk of divergence and data loss.

We will configure a remote state backend using an AWS S3 bucket and a DynamoDB table for state locking. This ensures that only one terraform apply process can modify the state at a time, preventing race conditions and state corruption. It is a non-negotiable component of a professional Terraform workflow.

By 2025, Terraform is projected to be used by over one million organizations. Its provider-based architecture and deep integration with cloud providers like AWS, Azure, and Google Cloud make it the de facto standard for provisioning complex infrastructure like a Kubernetes cluster.

With our strategy defined, let's begin coding the infrastructure.

Step 1: Configure the AWS Provider and Remote Backend

First, we must declare the required provider and configure the remote backend. This is typically done in a providers.tf or main.tf file.

# main.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

// Configure the S3 backend for remote state storage

backend "s3" {

bucket = "my-terraform-state-bucket-unique-name" # Must be globally unique

key = "global/eks/terraform.tfstate"

region = "us-east-1"

encrypt = true

dynamodb_table = "my-terraform-locks" # For state locking

}

}

provider "aws" {

region = "us-east-1"

}

This configuration block performs two essential functions:

- The AWS Provider: It instructs Terraform to download and use the official HashiCorp AWS provider.

- The S3 Backend: It configures Terraform to store its state file in a specific S3 bucket and to use a DynamoDB table for state locking, which is critical for collaborative environments.

Step 2: Define Networking with a VPC

A Kubernetes cluster requires a robust network foundation. We will use the official AWS VPC Terraform module to create a Virtual Private Cloud (VPC). This module abstracts the creation of public and private subnets across multiple Availability Zones (AZs) to ensure high availability.

# vpc.tf

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "5.5.2"

name = "my-eks-vpc"

cidr = "10.0.0.0/16"

azs = ["us-east-1a", "us-east-1b", "us-east-1c"]

private_subnets = ["10.0.1.0/24", "10.0.2.0/24", "10.0.3.0/24"]

public_subnets = ["10.0.101.0/24", "10.0.102.0/24", "10.0.103.0/24"]

enable_nat_gateway = true

single_nat_gateway = true // For cost savings in non-prod environments

# Tags required by EKS

public_subnet_tags = {

"kubernetes.io/role/elb" = "1"

}

private_subnet_tags = {

"kubernetes.io/role/internal-elb" = "1"

}

tags = {

"Terraform" = "true"

"Environment" = "dev"

}

}

This module automates the creation of the VPC, subnets, route tables, internet gateways, and NAT gateways. It saves an incredible amount of time and prevents common misconfigurations. If you're new to HCL syntax, our Terraform tutorial for beginners is a great place to get up to speed.

Step 3: Provision the EKS Control Plane and Node Group

Now we will provision the EKS cluster itself using the official terraform-aws-modules/eks/aws module.

# eks.tf

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "20.8.4"

cluster_name = "my-demo-cluster"

cluster_version = "1.29"

vpc_id = module.vpc.vpc_id

subnet_ids = module.vpc.private_subnets

eks_managed_node_groups = {

general_purpose = {

min_size = 1

max_size = 3

desired_size = 2

instance_types = ["t3.medium"]

ami_type = "AL2_x86_64"

}

}

tags = {

Environment = "dev"

Owner = "my-team"

}

}

Key Insight: See how we're passing outputs from our VPC module (

module.vpc.vpc_id) directly as inputs to our EKS module? This is the magic of Terraform. You compose complex infrastructure by wiring together modular, reusable building blocks.

This code defines both the EKS control plane and a managed node group for our worker nodes. The eks_managed_node_groups block specifies all the details, like instance types and scaling rules.

With these files created, running terraform init, terraform plan, and terraform apply will provision the entire cluster. You now have a production-ready Kubernetes cluster managed entirely as code.

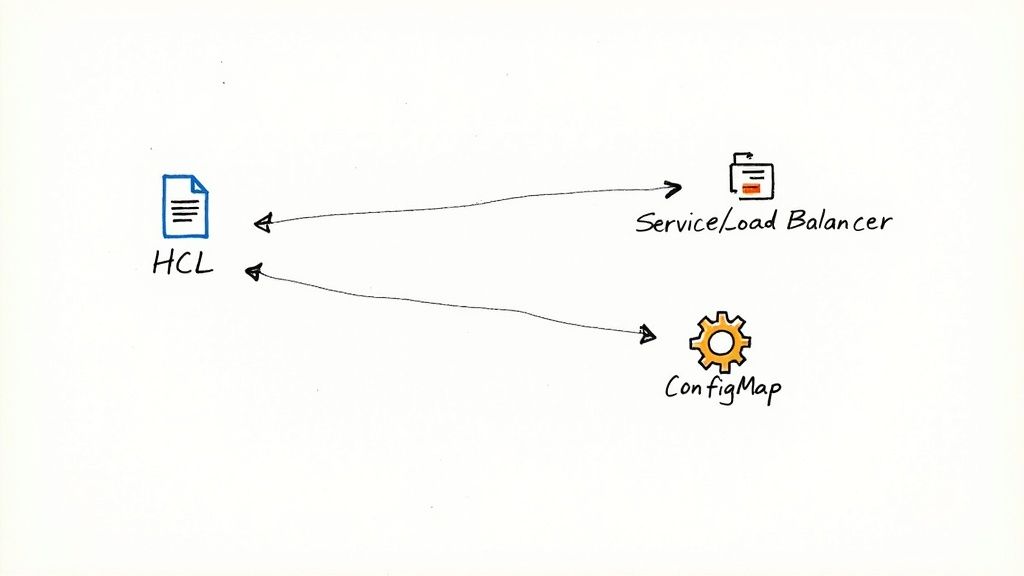

With the EKS cluster provisioned, the next step is deploying applications. While GitOps is the recommended pattern for application lifecycles, you can use Terraform to manage Kubernetes resources directly via the dedicated Kubernetes Provider.

This approach allows you to define Kubernetes objects—Deployments, Services, ConfigMaps—using HCL, integrating them into the same workflow used to provision the cluster. This is particularly useful for managing foundational cluster components or for teams standardized on the HashiCorp ecosystem.

Let's walk through the technical steps to configure this provider and deploy a sample application.

Connecting Terraform to EKS

The first step is authentication: the Terraform Kubernetes Provider needs credentials to communicate with your EKS cluster's API server.

Since we provisioned the cluster using the Terraform EKS module, we can dynamically retrieve the required authentication details from the module's outputs. This creates a secure and seamless link between the infrastructure provisioning and in-cluster management layers.

The provider configuration is as follows:

# main.tf

data "aws_eks_cluster_auth" "cluster" {

name = module.eks.cluster_name

}

provider "kubernetes" {

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

token = data.aws_eks_cluster_auth.cluster.token

}

This configuration block performs the authentication handshake:

- The

aws_eks_cluster_authdata source is a helper that generates a short-lived authentication token for your cluster using the AWS IAM Authenticator mechanism. - The

kubernetesprovider block consumes thehostendpoint, thecluster_ca_certificate, and the generatedtokento establish an authenticated session with the Kubernetes API server.

With this provider configured, Terraform can now manage resources inside your cluster.

Deploying a Sample App with HCL

To demonstrate how Kubernetes and Terraform work together for in-cluster resources, we will deploy a simple Nginx application. This requires defining three Kubernetes objects: a ConfigMap, a Deployment, and a Service.

First, the kubernetes_config_map resource to store configuration data.

# app.tf

resource "kubernetes_config_map" "nginx_config" {

metadata {

name = "nginx-config"

namespace = "default"

}

data = {

"config.conf" = "server_tokens off;"

}

}

Next, the kubernetes_deployment resource. Note how HCL allows for dependencies and references between resources.

# app.tf

resource "kubernetes_deployment" "nginx" {

metadata {

name = "nginx-deployment"

namespace = "default"

labels = {

app = "nginx"

}

}

spec {

replicas = 2

selector {

match_labels = {

app = "nginx"

}

}

template {

metadata {

labels = {

app = "nginx"

}

}

spec {

container {

image = "nginx:1.25.3"

name = "nginx"

port {

container_port = 80

}

}

}

}

}

}

Finally, a kubernetes_service of type LoadBalancer to expose the Nginx deployment. This will instruct the AWS cloud controller manager to provision an Elastic Load Balancer.

# app.tf

resource "kubernetes_service" "nginx_service" {

metadata {

name = "nginx-service"

namespace = "default"

}

spec {

selector = {

app = kubernetes_deployment.nginx.spec.0.template.0.metadata.0.labels.app

}

port {

port = 80

target_port = 80

}

type = "LoadBalancer"

}

}

After adding this code, running terraform apply will execute both the cloud infrastructure provisioning and the application deployment within Kubernetes as a single, atomic operation.

A Critical Look at This Pattern

While a unified Terraform workflow is powerful, it's essential to understand its limitations before adopting it for application deployments.

The big win is having a single source of truth and a consistent IaC workflow for everything. The major downside is the risk of state conflicts and operational headaches when application teams need to make changes quickly.

Here’s a technical breakdown:

- The Good: This approach is ideal for bootstrapping a cluster with its foundational, platform-level services like ingress controllers (e.g., NGINX Ingress), monitoring agents (e.g., Prometheus Operator), or certificate managers (e.g., cert-manager). It allows you to codify the entire cluster setup, from VPC to core add-ons, in a single repository.

- The Challenges: The primary issue is state drift. If an application developer uses

kubectl scale deployment nginx-deployment --replicas=3, the live state of the cluster now diverges from Terraform's state file. The nextterraform planwill detect this discrepancy and propose reverting the replica count to2, creating a conflict between the infrastructure tool and the application operators. This model tightly couples the application lifecycle to the infrastructure lifecycle, which can impede developer velocity.

For most organizations, a hybrid model is the optimal solution. Use Terraform for its core strength: provisioning the cluster and its stable, foundational services. Then, delegate the management of dynamic, frequently-updated applications to a dedicated GitOps tool like ArgoCD or Flux. This approach leverages the best of both tools, resulting in a robust and scalable platform.

Implementing Advanced IaC Workflows

Provisioning a single Kubernetes cluster is the first step. Building a scalable, automated infrastructure factory requires adopting advanced Infrastructure as Code (IaC) workflows. This involves moving beyond manual terraform apply commands to a system that is modular, automated, secure, and capable of managing multiple environments and teams.

The adoption of container orchestration is widespread. Projections show that by 2025, over 60% of global enterprises will rely on Kubernetes to run their applications. The Cloud Native Computing Foundation (CNCF) reports a 96% adoption rate among surveyed organizations, cementing Kubernetes as a central component of modern cloud architecture.

Structuring Projects With Terraform Modules

As infrastructure complexity grows, a monolithic Terraform configuration becomes unmaintainable. The professional standard is to adopt a modular architecture. Terraform modules are self-contained, reusable packages of HCL code that define a specific piece of infrastructure, such as a VPC or a complete Kubernetes cluster.

Instead of duplicating code for development, staging, and production environments, you create a single, well-architected module. You then instantiate this module for each environment, passing variables to customize parameters like instance sizes, CIDR blocks, or region. This approach adheres to the DRY (Don't Repeat Yourself) principle and streamlines updates. For a deeper dive, check out our guide on Terraform modules best practices.

This modular strategy is the secret to managing complexity at scale. A change to your core cluster setup is made just once—in the module—and then rolled out everywhere. This ensures consistency and drastically cuts down the risk of human error.

Automating Deployments With CI/CD Pipelines

Executing terraform apply from a local machine is a significant security risk and does not scale. For any team managing Kubernetes and Terraform, a robust CI/CD pipeline is a non-negotiable requirement. Automating the IaC workflow provides predictability, auditability, and a crucial safety net.

Tools like GitHub Actions are well-suited for building this automation. If you're looking to get started, this guide on creating reusable GitHub Actions is a great resource.

A typical CI/CD pipeline for a Terraform project includes these stages:

- Linting and Formatting: The pipeline runs

terraform fmt -checkandtflintto enforce consistent code style and check for errors. - Terraform Plan: On every pull request, a job runs

terraform plan -out=tfplanand posts the output as a comment for peer review. This ensures full visibility into proposed changes. - Manual Approval: For production environments, a protected branch or environment with a required approver ensures that a senior team member signs off before applying changes.

- Terraform Apply: Upon merging the pull request to the main branch, the pipeline automatically executes

terraform apply "tfplan"to roll out the approved changes.

Mastering State and Secrets Management

Two final pillars of an advanced workflow are state and secrets management. Using remote state backends (e.g., AWS S3 with DynamoDB) is mandatory for team collaboration. It provides a canonical source of truth and, critically, state locking. This mechanism prevents concurrent terraform apply operations from corrupting the state file.

Handling sensitive data such as API keys, database credentials, and TLS certificates is equally important. Hardcoding secrets in .tf files is a severe security vulnerability. The correct approach is to integrate with a dedicated secrets management tool.

Common strategies include:

- HashiCorp Vault: A purpose-built tool for managing secrets, certificates, and encryption keys, with a dedicated Terraform provider.

- Cloud-Native Secret Managers: Services like AWS Secrets Manager or Azure Key Vault provide tight integration with their respective cloud ecosystems and can be accessed via Terraform data sources.

By externalizing secrets, the Terraform code itself contains no sensitive information and can be stored in version control safely. The configuration fetches credentials at runtime, enforcing a clean separation between code and secrets—a non-negotiable practice for production-grade Kubernetes environments.

Navigating the integration of Kubernetes and Terraform inevitably raises critical architectural questions. Answering them correctly from the outset is key to building a maintainable and scalable system. Let's address the most common technical inquiries.

Should I Use Terraform for Application Deployments in Kubernetes?

While technically possible with the Kubernetes provider, using Terraform for frequent application deployments is generally an anti-pattern. The most effective strategy relies on a clear separation of concerns.

Use Terraform for its primary strength: provisioning the cluster and its foundational, platform-level services. This includes static components that change infrequently, such as an ingress controller, a service mesh (like Istio or Linkerd), or the monitoring and logging stack (like the Prometheus and Grafana operators).

For dynamic application deployments, Kubernetes-native GitOps tools like ArgoCD or Flux are far better suited. They are purpose-built for continuous delivery within Kubernetes and offer critical capabilities that Terraform lacks:

- Developer Self-Service: Application teams can manage their own release cycles by pushing changes to a Git repository, without needing permissions to the underlying infrastructure codebase.

- Drift Detection and Reconciliation: GitOps controllers continuously monitor the cluster's live state against the desired state in Git, automatically correcting any unauthorized or out-of-band changes.

- Advanced Deployment Strategies: They provide native support for canary releases, blue-green deployments, and automated rollbacks, which are complex to implement in Terraform.

This hybrid model—Terraform for the platform, GitOps for the applications—leverages the strengths of both tools, creating a workflow that is both robust and agile.

How Do Terraform and Helm Integrate?

Terraform and Helm integrate seamlessly via the official Terraform Helm Provider. This provider allows you to manage Helm chart releases as a helm_release resource directly within your HCL code.

This is the ideal method for deploying third-party, off-the-shelf applications that constitute your cluster's core services. Examples include cert-manager for automated TLS certificate management, Prometheus for monitoring, or Istio for a service mesh.

By managing these Helm releases with Terraform, you codify the entire cluster setup in a single, version-controlled repository. This unified workflow provisions everything from the VPC and IAM roles up to the core software stack running inside the cluster. The result is complete, repeatable consistency across all environments with every terraform apply.

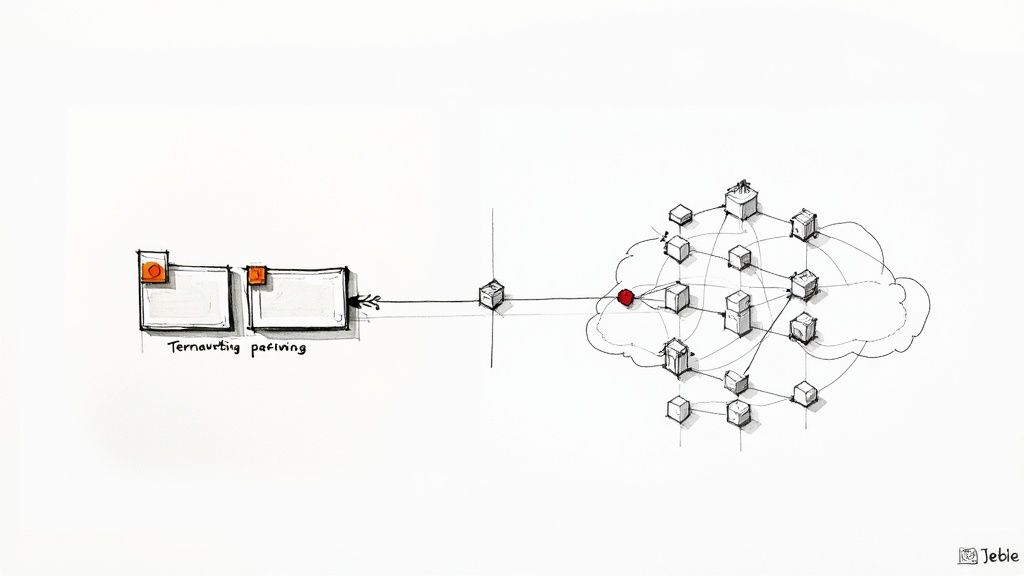

What Is the Best Way to Manage Multiple K8s Clusters?

For managing multiple clusters (e.g., for different environments or regions), a modular architecture is the professional standard. The strategy involves creating a reusable Terraform module that defines the complete configuration for a single, well-architected cluster.

This module is then instantiated from a root configuration for each environment (dev, staging, prod) or region. Variables are passed to the module to customize specific parameters for each instance, such as cluster_name, node_count, or cloud_region.

Crucial Insight: The single most important part of this strategy is using a separate remote state file for each cluster. This practice isolates each environment completely. An error in one won't ever cascade and take down another, dramatically shrinking the blast radius if something goes wrong.

This modular approach keeps your infrastructure code DRY (Don't Repeat Yourself), making it more scalable, easier to maintain, and far less prone to configuration drift over time.

Ready to implement expert-level Kubernetes and Terraform workflows but need the right talent to execute your vision? OpsMoon connects you with the top 0.7% of remote DevOps engineers to accelerate your projects. Start with a free work planning session to map out your infrastructure roadmap today.

Leave a Reply