To manage feature flags without creating unmaintainable spaghetti code, you need a full lifecycle process rooted in strict governance and automation. This process must cover everything from a flag's initial creation and rollout strategy to its mandatory cleanup. Without a disciplined approach, flags accumulate, turning into a significant source of technical debt that complicates debugging, testing, and new development. The key is implementing strict governance, clear ownership via a registry, and automated cleanup processes to maintain codebase velocity and health.

Why You Can't Afford to Ignore Feature Flag Management

Feature flags are a powerful tool in modern CI/CD, but they introduce a significant risk if managed poorly. Without a deliberate management strategy, they accumulate, creating a tangled web of conditional logic (if/else blocks) that increases the codebase's cyclomatic complexity. This makes the code brittle, exponentially harder to test, and nearly impossible for new engineers to reason about. This isn't a minor inconvenience; it's a direct path to operational chaos and crippling technical debt.

The core problem is that flags created for temporary purposes—a canary release, an A/B test, or an operational toggle—are often forgotten once their initial purpose is served. Each orphaned flag represents a dead code path, a potential security vulnerability, and another layer of cognitive load for developers. Imagine debugging a production incident when dozens of latent flags could be altering application behavior based on user attributes or environmental state.

The Hidden Costs of Poor Flag Hygiene

Unmanaged flags create significant operational risk and negate the agility they are meant to provide. Teams lacking a formal process inevitably encounter:

- Bloated Code Complexity: Every

if/elseblock tied to a flag adds to the cognitive load required to understand a function or service. This slows down development on subsequent features and dramatically increases the likelihood of introducing bugs. - Testing Nightmares: With each new flag, the number of possible execution paths grows exponentially (2^n, where n is the number of flags). It quickly becomes computationally infeasible to test every permutation, leaving critical gaps in QA coverage and opening the door to unforeseen production failures.

- Stale and Zombie Flags: Flags that are no longer in use but remain in the codebase are particularly dangerous. They can be toggled accidentally via an API call or misconfiguration, causing unpredictable behavior or, worse, re-enabling old bugs that were thought to be fixed.

A disciplined, programmatic approach to managing feature flags is the difference between a high-velocity development team and one bogged down by its own tooling. The goal is to design flags as ephemeral artifacts, ensuring they are retired as soon as they become obsolete.

From Ad-Hoc Toggles to a Governed System

Effective flag management requires shifting from using flags as simple boolean switches to treating them as managed components of your infrastructure with a defined lifecycle. Organizations that master feature flag-driven development report significant improvements, such as a 20-30% increase in deployment frequency. This is achieved by decoupling code deployment from feature release, enabling safer and more frequent production updates. You can explore more insights about feature flag-based development and its impact on CI/CD pipelines.

This transition requires a formal lifecycle for every flag, including clear ownership, standardized naming conventions, and a defined Time-to-Live (TTL). By embedding this discipline into your workflow, you transform feature flags from a potential liability into a strategic asset for continuous delivery.

Building Your Feature Flag Lifecycle Management Process

Allowing feature flags to accumulate is a direct path to technical debt and operational instability. To prevent this, you must implement a formal lifecycle management process, treating flags as first-class citizens of your codebase, not as temporary workarounds. This begins with establishing strict, non-negotiable standards for how every flag is created, documented, and ultimately decommissioned.

The first step is enforcing a strict naming convention. A vague name like new-checkout-flow is useless six months later when the original context is lost. A structured format provides immediate context. A robust convention is [team]-[ticket]-[description]. For example, payments-PROJ123-add-apple-pay immediately tells any engineer the owning team (payments), the associated work item (PROJ-123), and its explicit purpose. This simple discipline saves hours during debugging or code cleanup.

Establishing a Central Flag Registry

A consistent naming convention is necessary but not sufficient. Every flag requires standardized metadata stored in a central flag registry—your single source of truth. This should not be a spreadsheet; it must be a version-controlled file (e.g., flags.yaml in your repository) or managed within a dedicated feature flagging platform like LaunchDarkly.

This registry must track the following for every flag:

- Owner: The team or individual responsible for the flag's lifecycle.

- Creation Date: The timestamp of the flag's introduction.

- Ticket Link: A direct URL to the associated Jira, Linear, or Asana ticket.

- Expected TTL (Time-to-Live): A target date for the flag's removal, which drives accountability.

- Description: A concise, plain-English summary of the feature's function and impact.

This infographic illustrates how the absence of a structured process degrades agility and leads to chaos.

Without a formal process, initial agility quickly spirals into unmanageable complexity. A structured lifecycle is the only way to maintain predictability and control at scale.

A clean flag definition in a flags.yaml file might look like this:

flags:

- name: "payments-PROJ123-add-apple-pay"

owner: "@team-payments"

description: "Enables the Apple Pay option in the checkout flow for users on iOS 16+."

creationDate: "2024-08-15"

ttl: "2024-09-30"

ticket: "https://your-jira.com/browse/PROJ-123"

type: "release"

permanent: false

This registry serves as the foundation of your governance model, providing immediate context for audits and automated tooling. For technical implementation details, our guide on how to implement feature toggles offers a starting point.

Differentiating Between Flag Types

Not all flags are created equal, and managing them with a one-size-fits-all approach is a critical mistake. Categorizing flags by type is essential because each type has a different purpose, risk profile, and expected lifespan. This categorization should be enforced at the time of creation.

Feature Flag Type and Use Case Comparison

This table provides a technical breakdown of common flag types. Selecting the correct type from the outset defines its lifecycle and cleanup requirements.

| Flag Type | Primary Use Case | Typical Lifespan | Key Consideration |

|---|---|---|---|

| Release Toggles | Decoupling deployment from release; gradual rollouts of new functionality. | Short-term (days to weeks) | Must have an automated cleanup ticket created upon reaching 100% rollout. |

| Experiment Toggles | A/B testing, multivariate testing, or canary releases to compare user behavior. | Medium-term (weeks to months) | Requires integration with an analytics pipeline to determine a winning variant before removal. |

| Operational Toggles | Enabling or disabling system behaviors for performance (e.g., circuit breakers), safety, or maintenance. | Potentially long-term | Must be reviewed quarterly to validate continued necessity. Overuse indicates architectural flaws. |

| Permission Toggles | Controlling access to features for specific user segments based on entitlements (e.g., beta users, premium subscribers). | Long-term or permanent | Directly tied to the product's business logic and user model; should be clearly marked as permanent: true. |

By defining a flag's type upon creation, you are pre-defining its operational lifecycle.

A 'release' flag hitting 100% rollout should automatically trigger a cleanup ticket in the engineering backlog. An 'operational' flag, on the other hand, should trigger a quarterly review notification to its owning team.

This systematic approach transforms flag creation from an ad-hoc developer task into a governed, predictable engineering practice. It ensures every flag is created with a clear purpose, an owner, and a predefined plan for its eventual decommission. This is how you leverage feature flags for velocity without accumulating technical debt.

Once a robust lifecycle is established, the next step is integrating flag management directly into your CI/CD pipeline. This transforms flags from manual toggles into a powerful, automated release mechanism, enabling safe and predictable delivery at scale. The primary principle is to manage flag configurations as code (Flags-as-Code). Instead of manual UI changes, the pipeline should programmatically manage flag states for each environment via API calls or declarative configuration files. This eliminates the risk of human error, such as promoting a feature to production prematurely.

Environment-Specific Flag Configurations

A foundational practice is defining flag behavior on a per-environment basis. A new feature should typically be enabled by default in dev and staging for testing but must be disabled in production until explicitly released. This is handled declaratively, either through your feature flagging platform's API or with environment-specific config files stored in your repository.

For a new feature checkout-v2, the declarative configuration might be:

config.dev.yaml:checkout-v2-enabled: true(Always on for developers)config.staging.yaml:checkout-v2-enabled: true(On for QA and automated E2E tests)config.prod.yaml:checkout-v2-enabled: false(Safely off until release)

This approach decouples deployment from release, a cornerstone of modern DevOps. To fully leverage this model, it's crucial to understand the theories and practices of CI/CD.

Securing Flag Management with Access Controls and Audits

As flags become central to software delivery, controlling who can modify them and tracking when changes occur becomes critical. This is your primary defense against unauthorized or accidental production changes.

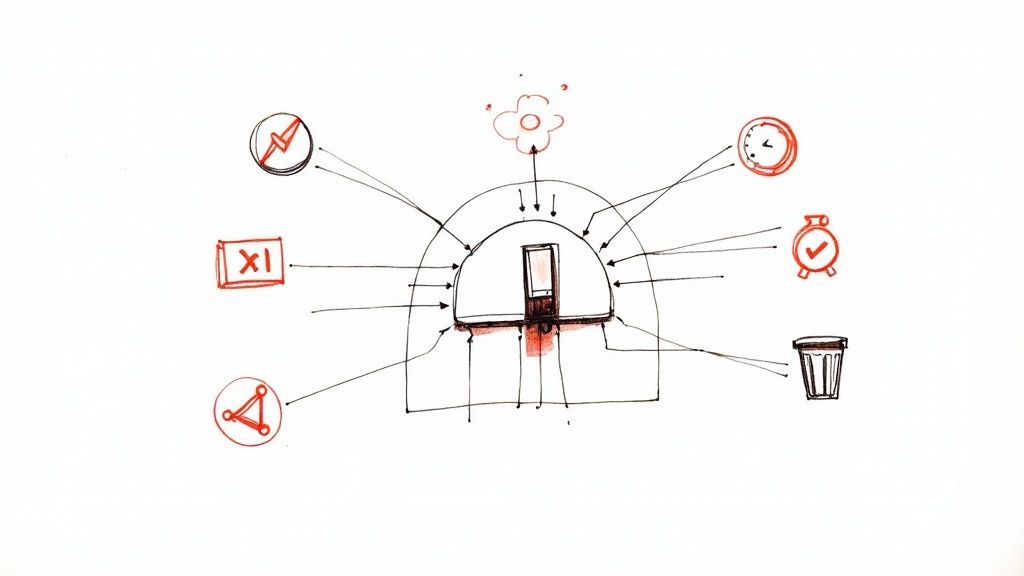

Implement Role-Based Access Control (RBAC) to define granular permissions:

- Developers: Can create flags and toggle them in

devandstaging. - QA Engineers: Can modify flags in

stagingto execute test plans. - Product/Release Managers: Are the only roles permitted to modify flag states in

production, typically as part of a planned release or incident response.

Every change to a feature flag's state, especially in production, must be recorded in an immutable audit log. This log should capture the user, the timestamp, and the exact change made. This is invaluable during incident post-mortems.

When a production issue occurs, the first question is always, "What changed?" A detailed, immutable log of flag modifications provides the answer in seconds, not hours.

Automated Smoke Testing Within the Pipeline

A powerful automation is to build a smoke test that validates code behind a disabled flag within the CI/CD pipeline. This ensures that new, unreleased code merged to your main branch doesn't introduce latent bugs.

Here is a technical workflow:

- Deploy Code: The pipeline deploys the latest build to a staging environment with the new feature flag (

new-feature-x) globallyOFF. - Toggle Flag ON (Scoped): The pipeline makes an API call to the flagging service to enable

new-feature-xonly for the test runner's session or a specific test user. - Run Test Suite: A targeted set of automated integration or end-to-end tests runs against critical application paths affected by the new feature.

- Toggle Flag OFF: Regardless of test outcome, the pipeline makes another API call to revert the flag's state, ensuring the environment is clean for subsequent tests.

- Report Status: If the smoke tests pass, the build is marked as stable. If they fail, the pipeline fails, immediately notifying the team of a breaking change in the unreleased code.

This automated validation loop provides a critical safety net, giving developers the confidence to merge feature branches frequently without destabilizing the main branch—the core tenet of continuous integration. For more on this, review our guide on CI/CD pipeline best practices.

Advanced Rollout and Experimentation Strategies

Once you have mastered basic flag management, you can leverage them for more than simple on/off toggles. This is where you unlock their true power: sophisticated deployment strategies that de-risk releases and provide invaluable product insights. By using flags for gradual rollouts and production-based experiments, you can move from "release and pray" to data-driven delivery.

These advanced techniques allow you to validate major changes with real users before committing to a full launch.

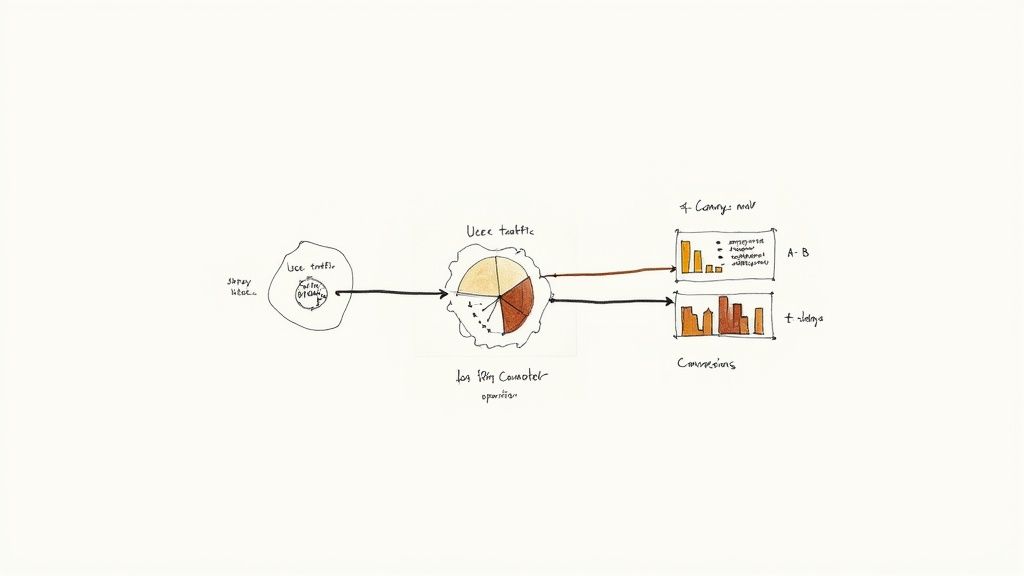

Executing a Technical Canary Release

A canary release is a technique for testing new functionality with a small subset of production traffic, serving as an early warning system for bugs or performance degradation. Managing feature flags is the mechanism that makes this process precise and controllable.

You begin by creating a feature flag with percentage-based or attribute-based targeting rules. Instead of a simple true/false state, this flag intelligently serves the new feature to a specific cohort.

A common first step is an internal-only release (dogfooding):

- Targeting Attribute:

user.email - Rule:

if user.email.endsWith('@yourcompany.com') then serve: true - Default Rule:

serve: false

After internal validation, you can progressively expand the rollout. The next phase might target 1% of production traffic. You configure the flag to randomly assign 1% of users to the true variation.

This gradual exposure is critical. You must monitor key service metrics (error rates via Sentry/Datadog, latency, CPU utilization) for any negative correlation with the rollout. If metrics remain stable, you can increase the percentage to 5%, then 25%, and eventually 100%, completing the release.

Setting Up A/B Experiments with Flags

Beyond risk mitigation, feature flags are essential tools for running A/B experiments. This allows you to test hypotheses by serving different experiences to separate user groups and measuring which variant performs better against a key business metric, such as conversion rate.

To execute this, you need a multivariate flag—one that can serve multiple variations, not just on or off.

Consider a test on a new checkout button color:

- Flag Name:

checkout-button-test-q3 - Variation A ("control"):

{"color": "#007bff"}(The original blue) - Variation B ("challenger"):

{"color": "#28a745"}(A new green)

You configure this flag to split traffic 50/50. The flagging SDK ensures each user is sticky and consistently bucketed into one variation. Critically, your application code must report which variation a user saw when they complete a goal action.

Your analytics instrumentation would look like this:

// Get the flag variation for the current user

const buttonVariation = featureFlagClient.getVariation('checkout-button-test-q3', { default: 'control' });

// When the button is clicked, fire an analytics event with the variation info

analytics.track('CheckoutButtonClicked', {

variationName: buttonVariation,

userId: user.id

});

This data stream allows your analytics platform to determine if Variation B produced a statistically significant lift in clicks.

By instrumenting your code to report metrics based on flag variations, you transition from making decisions based on intuition to making them based on empirical data. This transforms a simple toggle into a powerful business intelligence tool.

These techniques are fundamental to modern DevOps. Teams that effectively use flags for progressive delivery report up to a 30% reduction in production incidents because they can instantly disable a problematic feature without a high-stress rollback. For more, explore these feature flag benefits and best practices.

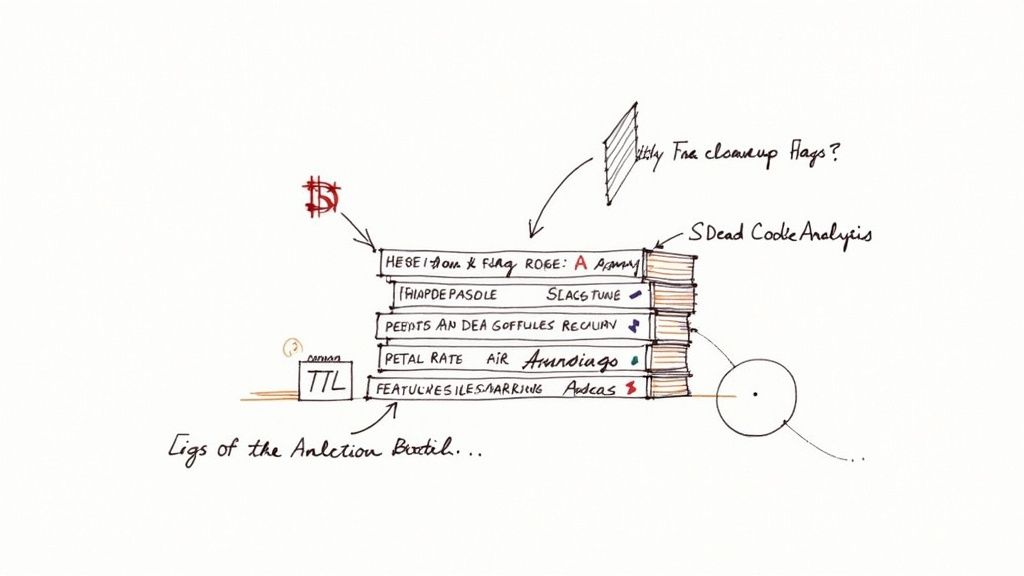

A Practical Guide To Cleaning Up Flag-Driven Tech Debt

Feature flags are intended to be temporary artifacts. Without a disciplined cleanup strategy, they become permanent fixtures, creating a significant source of technical debt that complicates the codebase and slows development. The key is to treat cleanup as a mandatory, non-negotiable part of the development lifecycle, not as a future chore.

This is a widespread problem; industry data shows that about 35% of firms struggle with cleaning up stale flags, leading directly to increased code complexity. A proactive, automated cleanup process is essential for maintaining a healthy and simple codebase.

Establish a Formal Flag Retirement Process

First, implement a formal, automated "Flag Retirement" workflow. This process begins when a flag is created by assigning it a Time-to-Live (TTL) in your flag management system. This sets the expectation from day one that the flag is temporary. As the TTL approaches, automated alerts should be sent to the flag owner's Slack channel or email, prompting them to initiate the retirement process.

The retirement workflow should consist of clear, distinct stages:

- Review: The flag owner validates that the flag is no longer needed (e.g., the feature has rolled out to 100% of users, the A/B test has concluded).

- Removal: A developer creates a pull request to remove the conditional

if/elselogic associated with the flag, deleting the now-obsolete code path. - Archiving: The flag is archived in the management platform, removing it from active configuration while preserving its history for audit purposes.

Using Static Analysis To Hunt Down Dead Code

Manual cleanup is error-prone and inefficient. Use static analysis tools to automatically identify dead code paths associated with stale flags. These tools can scan the codebase for references to flags that are permanently configured to true or false in production.

For a release flag like new-dashboard-enabled that has been at 100% rollout for months, a static analysis script can be configured to find all usages and automatically flag the corresponding else block as unreachable (dead) code. This provides developers with an actionable, low-risk list of code to remove.

Automating the detection of stale flags shifts the burden from unreliable human memory to a consistent, repeatable process, preventing the gradual accumulation of technical debt.

For more strategies on this topic, our guide on how to manage technical debt provides complementary techniques.

Scripting Your Way To a Cleaner Codebase

Further automate cleanup by writing scripts that utilize your flag management platform's API and your Git repository's history. This powerful combination helps answer critical questions like, "Which flags have a 100% rollout but still exist in the code?" or "Which flags are referenced in code but are no longer defined in our flagging platform?"

A typical cleanup script's logic would be:

- Fetch All Flags: Call the flagging service's API to get a JSON list of all defined flags and their metadata (e.g., creation date, current production rollout percentage).

- Scan Codebase: Use a tool like

grepor an Abstract Syntax Tree (AST) parser to find all references to these flags in the repository. - Cross-Reference Data: Identify flags that are set to 100%

truefor all users but still have conditional logic in the code. - Check Git History: For flags that appear stale, use

git log -S'flag-name'to find the last time the code referencing the flag was modified. A flag that has been at 100% for six months and whose code hasn't been touched in that time is a prime candidate for removal.

This data-driven approach allows you to prioritize cleanup efforts on the oldest and riskiest flags. To learn more about systematic code maintenance, explore various approaches on how to reduce technical debt. By making cleanup an active, automated part of your engineering culture, you ensure feature flags remain a tool for agility, not a long-term liability.

Common Questions on Managing Feature Flags

As your team adopts feature flags, practical questions about long-term management, testing strategies, and distributed architectures will arise. Here are technical answers to common challenges.

Handling Long-Lived Feature Flags

Not all flags are temporary. Operational kill switches, permission toggles, and architectural routing flags may be permanent. Managing them requires a different strategy than for short-lived release toggles.

- Explicitly Categorize Them: In your flag registry, mark them as permanent (e.g.,

permanent: true). This tag should exclude them from automated TTL alerts and cleanup scripts. - Mandate Periodic Reviews: Schedule mandatory quarterly or semi-annual reviews for all permanent flags. The owning team must re-validate the flag's necessity and document the review's outcome.

- Document Their Impact: For permanent flags, documentation is critical. It must clearly state the flag's purpose, the system components it affects, and the procedure for operating it during an incident.

The Best Way to Test Code Behind a Flag

Code behind a feature flag requires more rigorous testing, not less, to cover all execution paths. A multi-layered testing strategy is essential.

- Unit Tests (Mandatory): Unit tests must cover both the

onandoffstates. Mock the feature flag client to force the code down each conditional path and assert the expected behavior for both scenarios. - Integration Tests in CI: Your CI pipeline should run integration tests against the default flag configuration for that environment. This validates that the main execution path remains stable.

- End-to-End (E2E) Tests: Use frameworks like Cypress or Selenium to test full user journeys. These tools can dynamically override a flag's state for the test runner's session (e.g., via query parameters, cookies, or local storage injection), allowing you to validate the new feature's full workflow even if it is disabled by default in the test environment.

The cardinal rule is: new code behind a flag must have comprehensive test coverage for all its states. A feature flag is not an excuse to compromise on quality.

Managing Flags Across Microservices

In a distributed system, managing flags with local configuration files is an anti-pattern that leads to state inconsistencies and debugging nightmares. A centralized feature flagging service is not optional; it is a requirement for microservice architectures.

Each microservice should initialize a client SDK on startup that fetches flag configurations from the central service. The SDK should subscribe to a streaming connection (e.g., Server-Sent Events) for real-time updates. This ensures that when a flag's state is changed in the central dashboard, the change propagates to all connected services within seconds. This architecture prevents state drift and ensures consistent behavior across your entire system.

Using a dedicated service decouples feature release from code deployment, provides powerful targeting capabilities, and generates a critical audit trail—all of which are nearly impossible to achieve reliably with distributed config files in Git.

Ready to implement a robust DevOps strategy without the overhead? At OpsMoon, we connect you with the top 0.7% of remote DevOps engineers to build, scale, and manage your infrastructure. Get started with a free work planning session and let our experts map out your roadmap to success.

Leave a Reply