In today's always-on digital ecosystem, the traditional 'maintenance window' is a relic. Users expect flawless, uninterrupted service, and businesses depend on continuous availability to maintain their competitive edge. The central challenge for any modern engineering team is clear: how do you release new features, patch critical bugs, and update infrastructure without ever flipping the 'off' switch on your application? The cost of even a few minutes of downtime can be substantial, impacting revenue, user trust, and brand reputation.

This article moves beyond high-level theory to provide a technical deep-dive into ten proven zero downtime deployment strategies. We will dissect the mechanics, evaluate the specific pros and cons, and offer actionable implementation details for each distinct approach. You will learn the tactical differences between the gradual rollout of a Canary release and the complete environment swap of a Blue-Green deployment. We will also explore advanced patterns like Shadow Deployment for risk-free performance testing and Feature Flags for granular control over new functionality.

Prepare to equip your team with the practical knowledge needed to select and implement the right strategy for your specific technical and business needs. The goal is to deploy with confidence, eliminate service interruptions, and deliver a superior, seamless user experience with every single release.

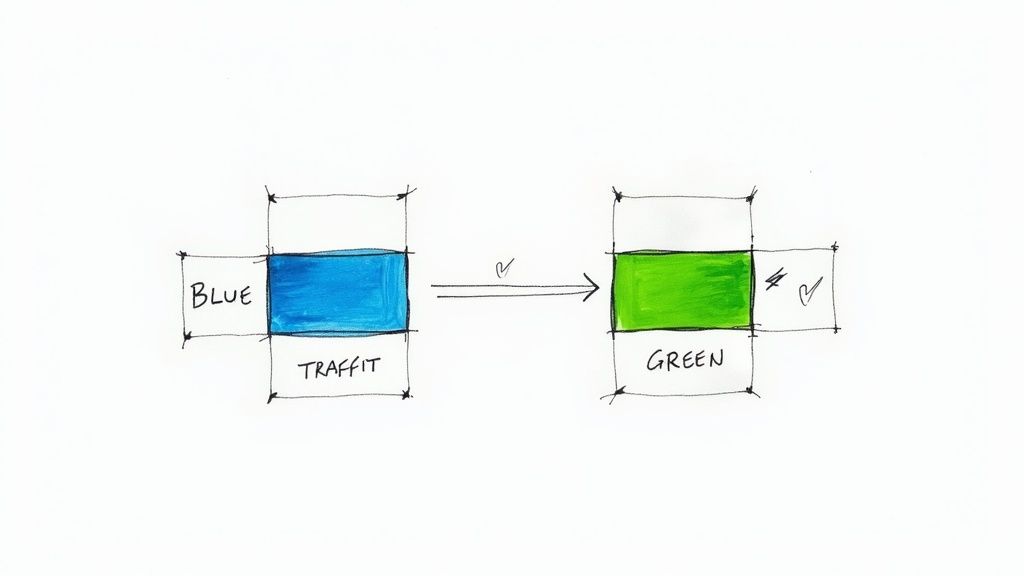

1. Blue-Green Deployment

Blue-Green deployment is a powerful zero downtime deployment strategy that minimizes risk by maintaining two identical production environments, conventionally named "Blue" and "Green." One environment, the Blue one, is live and serves all production traffic. The other, the Green environment, acts as a staging ground for the new version of the application.

The new code is deployed to the idle Green environment, where it undergoes a full suite of automated and manual tests without impacting live users. Once the new version is validated and ready, a simple router or load balancer switch directs all incoming traffic from the Blue environment to the Green one. The Green environment is now live, and the old Blue environment becomes the idle standby.

Why It's a Top Strategy

The key benefit of this approach is the near-instantaneous rollback capability. If any issues arise post-deployment, traffic can be rerouted back to the old Blue environment with the same speed, effectively undoing the deployment. This makes it an excellent choice for critical applications where downtime is unacceptable. Tech giants like Netflix and Amazon rely on this pattern to update their critical services reliably.

Actionable Implementation Tips

- Database Management is Key: Handle database schema changes with care. Use techniques like expand/contract or parallel change to ensure the new application version is backward-compatible with the old database schema and vice-versa. A shared, compatible database is often the simplest approach, but any breaking changes must be managed across multiple deployments.

- Automate the Switch: Use a load balancer (like AWS ELB, NGINX) or DNS CNAME record updates to manage the traffic switch. The switch should be a single, atomic operation executed via script to prevent manual errors during the critical cutover.

- Run Comprehensive Smoke Tests: Before flipping the switch, run a battery of automated smoke tests against the Green environment's public endpoint to verify core functionality is working as expected. These tests should simulate real user journeys, such as login, add-to-cart, and checkout.

- Handle Sessions Carefully: If your application uses sessions, ensure they are managed in a shared data store (like Redis or a database) so user sessions persist seamlessly after the switch. Avoid in-memory session storage, which would cause all users to be logged out post-deployment.

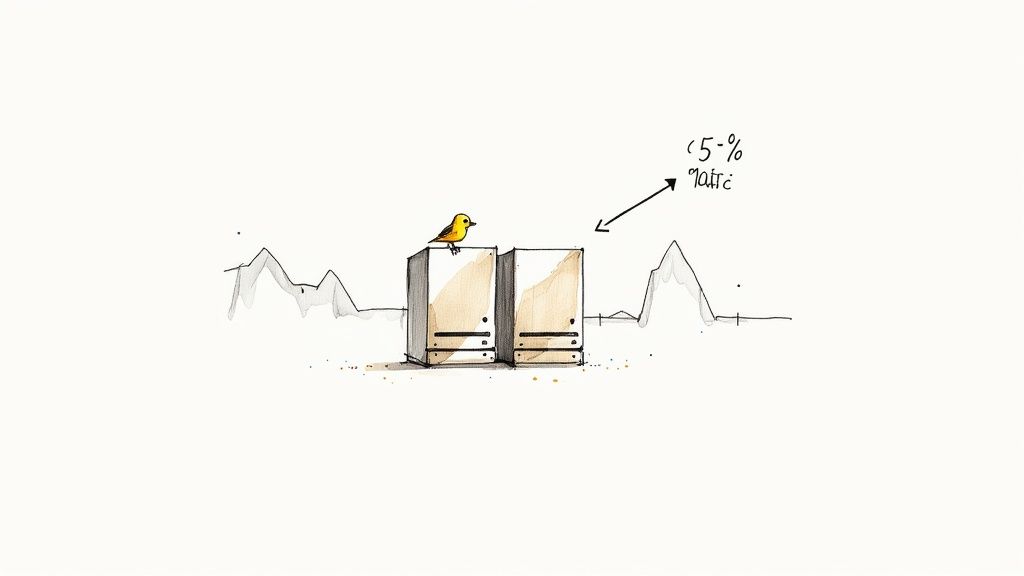

2. Canary Deployment

Canary deployment is a progressive delivery strategy that introduces a new software version to a small subset of production users before a full rollout. This initial group, the "canaries," acts as an early warning system. By closely monitoring performance and error metrics for this group, teams can detect issues and validate the new version with real-world traffic, significantly reducing the risk of a widespread outage.

If the new version performs as expected, traffic is gradually shifted from the old version to the new one in controlled increments. If any critical problems arise, the traffic is immediately routed back to the stable version, impacting only the small canary group. This methodical approach is one of the most effective zero downtime deployment strategies for large-scale, complex systems.

Why It's a Top Strategy

The core advantage of a canary deployment is its ability to test new code with live production data and user behavior while minimizing the blast radius of potential failures. This data-driven validation is far more reliable than testing in staging environments alone. This technique was popularized by tech leaders like Google and Facebook, who use it to deploy updates to their massive user bases with high confidence and minimal disruption.

Actionable Implementation Tips

- Define Clear Success Metrics: Before starting, establish specific thresholds for key performance indicators like error rates, CPU utilization, and latency in your monitoring tool (e.g., Prometheus, Datadog). For example, set a rule to roll back if the canary's p99 latency exceeds the baseline by more than 10%.

- Start Small and Increment Slowly: Begin by routing a small percentage of traffic (e.g., 1-5%) to the canary using a load balancer's weighted routing rules or a service mesh like Istio. Monitor for a stable period (at least 30 minutes) before increasing traffic in measured steps (e.g., to 10%, 25%, 50%, 100%).

- Automate Rollback Procedures: Configure your CI/CD pipeline or monitoring system (e.g., using Prometheus Alertmanager) to trigger an automatic rollback script if the defined metrics breach their thresholds. This removes human delay and contains issues instantly.

- Leverage Feature Flags for Targeting: Combine canary deployments with feature flags to control which users see new features within the canary group. You can target specific user segments, such as internal employees or beta testers, before exposing the general population.

3. Rolling Deployment

Rolling deployment is a classic zero downtime deployment strategy where instances running the old version of an application are incrementally replaced with instances running the new version. Unlike Blue-Green, which switches all traffic at once, this method updates a small subset of servers, or a "window," at a time. Traffic is gradually shifted to the new instances as they come online and pass health checks.

This process continues sequentially until all instances in the production environment are running the new code. This gradual replacement ensures that the application's overall capacity is not significantly diminished during the update, maintaining service availability. Modern orchestration platforms like Kubernetes have adopted rolling deployments as their default strategy due to this inherent safety and simplicity.

Why It's a Top Strategy

The primary advantage of a rolling deployment is its simplicity and resource efficiency. It doesn't require doubling your infrastructure, as you only need enough extra capacity to support the small number of new instances being added in each batch. The slow, controlled rollout minimizes the blast radius of potential issues, as only a fraction of users are exposed to a faulty new version at any given time, allowing for early detection and rollback.

Actionable Implementation Tips

- Implement Readiness Probes: In Kubernetes, define a

readinessProbethat checks a/healthzor similar endpoint. The orchestrator will only route traffic to a new pod after this probe passes, preventing traffic from being sent to an uninitialized instance. - Use Connection Draining: Configure your load balancer or ingress controller to use connection draining (graceful shutdown). This allows existing requests on an old instance to complete naturally before the instance is terminated, preventing abrupt session terminations for users. For example, in Kubernetes, this is managed by the

terminationGracePeriodSecondssetting. - Keep Versions Compatible: During the rollout, both old and new versions will be running simultaneously. Ensure the new code is backward-compatible with any shared resources like database schemas or message queue message formats to avoid data corruption or application errors.

- Control the Rollout Velocity: Configure the deployment parameters to control speed and risk. In Kubernetes,

maxSurgecontrols how many new pods can be created above the desired count, andmaxUnavailablecontrols how many can be taken down at once. A lowmaxSurgeandmaxUnavailablevalue results in a slower, safer rollout.

4. Feature Flags (Feature Toggle) Deployment

Feature Flags, also known as Feature Toggles, offer a sophisticated zero downtime deployment strategy by decoupling the act of deploying code from the act of releasing a feature. New functionality is wrapped in conditional logic within the codebase. This allows new code to be deployed to production in a "dark" or inactive state, completely invisible to users.

The feature is only activated when its corresponding flag is switched on, typically via a central configuration panel or API. This switch doesn't require a new deployment, giving teams granular control over who sees a new feature and when. The release can be targeted to specific users, regions, or a percentage of the user base, enabling controlled rollouts and A/B testing directly in the production environment.

Why It's a Top Strategy

This strategy is paramount for teams practicing continuous delivery, as it dramatically reduces the risk associated with each deployment. If a new feature causes problems, it can be instantly disabled by turning off its flag, effectively acting as an immediate rollback without redeploying code. Companies like Slack and GitHub use feature flags extensively to test new ideas and safely release complex features to millions of users, minimizing disruption and gathering real-world feedback.

Actionable Implementation Tips

- Establish Strong Conventions: Implement strict naming conventions (e.g.,

feature-enable-new-dashboard) and documentation for every flag, including its purpose, owner, and intended sunset date to avoid technical debt from stale flags. - Centralize Flag Management: Use a dedicated feature flag management service (like LaunchDarkly, Optimizely, or a self-hosted solution like Unleash) to control flags from a central UI, rather than managing them in config files, which would require a redeploy to change.

- Monitor Performance Impact: Keep a close eye on the performance overhead of flag evaluations. Implement client-side SDKs that cache flag states locally to minimize network latency on every check. To learn more, check out this guide on how to implement feature toggles.

- Create an Audit Trail: Ensure your flagging system logs all changes: who toggled a flag, when, and to what state. This is crucial for debugging production incidents, ensuring security, and maintaining compliance.

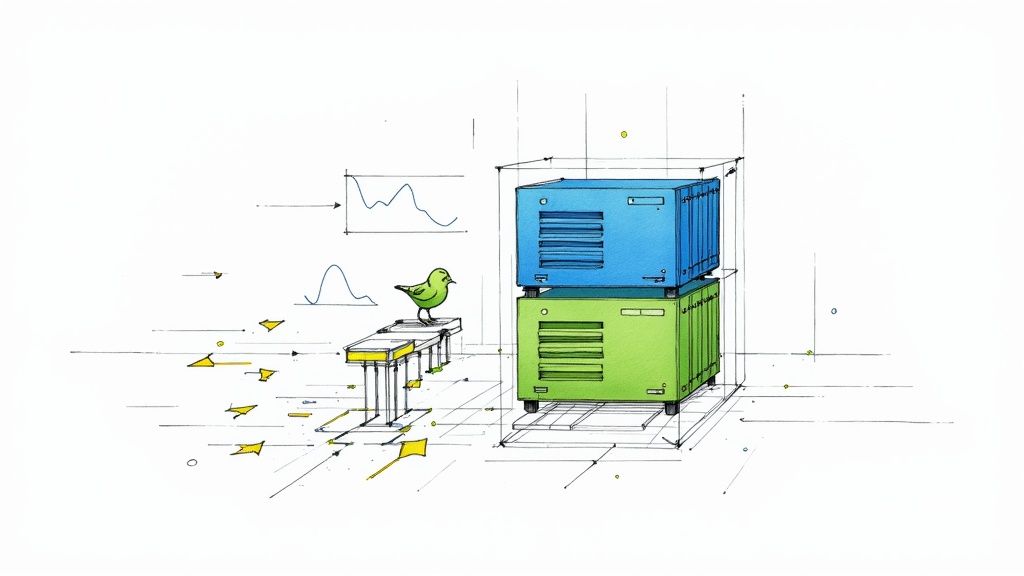

5. Shadow Deployment

Shadow Deployment is a sophisticated zero downtime deployment strategy where a new version of the application runs in parallel with the production version. It processes the same live production traffic, but its responses are not sent back to the user. Instead, the output from the "shadow" version is compared against the "production" version to identify any discrepancies or performance issues.

This technique, also known as traffic mirroring, provides a high-fidelity test of the new code under real-world load and data patterns without any risk to the end-user experience. It’s an excellent way to validate performance, stability, and correctness before committing to a full rollout. Tech giants like GitHub and Uber use shadow deployments to safely test critical API and microservice updates.

Why It's a Top Strategy

The primary advantage of shadow deployment is its ability to test new code with actual production traffic, offering the highest level of confidence before a release. It allows teams to uncover subtle bugs, performance regressions, or data corruption issues that might be missed in staging environments. Because the shadow version is completely isolated from the user-facing response path, it offers a zero-risk method for production validation.

Actionable Implementation Tips

- Implement Request Mirroring: Use a service mesh like Istio or Linkerd to configure traffic mirroring. For example, in Istio, you can define a

VirtualServicewith amirrorproperty that specifies the shadow service. This forwards a copy of the request with a "shadow" header. - Compare Outputs Asynchronously: The comparison between production and shadow responses should happen in a separate, asynchronous process or a dedicated differencing service. This prevents any latency or errors in the shadow service from impacting the real user's response time.

- Mock Outbound Calls: Ensure your shadow service does not perform write operations to shared databases or call external APIs that have side effects (e.g., sending an email, charging a credit card). Use service virtualization or mocking frameworks to intercept and stub these calls.

- Log Discrepancies: Set up robust logging and metrics to capture and analyze any differences between the two versions' outputs, response codes, and latencies. This data is invaluable for debugging and validating the new code's correctness.

6. A/B Testing Deployment

A/B Testing Deployment is a data-driven strategy where different versions of an application or feature are served to segments of users concurrently. Unlike canary testing, which gradually rolls out a new version to eventually replace the old one, A/B testing maintains both (or multiple) versions for a specific duration to compare their impact on key business metrics like conversion rates, user engagement, or revenue.

The core mechanism involves a feature flag or a routing layer that inspects user attributes (like a cookie, user ID, or geographic location) and directs them to a specific application version. This allows teams to validate hypotheses and make decisions based on quantitative user behavior rather than just technical stability. Companies like Booking.com famously run thousands of concurrent A/B tests, using this method to optimize every aspect of the user experience.

Why It's a Top Strategy

This strategy directly connects deployment activities to business outcomes. It provides a scientific method for feature validation, allowing teams to prove a new feature's value before committing to a full rollout. It's an indispensable tool for product-led organizations, as it minimizes the risk of launching features that negatively impact user behavior or key performance indicators. This method effectively de-risks product innovation while maintaining a zero downtime deployment posture.

Actionable Implementation Tips

- Define Clear Success Metrics: Before starting the test, establish a primary metric and a clear hypothesis. For example, "Version B's new checkout button will increase the click-through rate from the cart page by 5%."

- Ensure Statistical Significance: Use a sample size calculator to determine how many users need to see each version to get a reliable result. Run tests until statistical significance (e.g., a p-value < 0.05) is reached to avoid making decisions based on random noise.

- Implement Sticky Sessions: Ensure a user is consistently served the same version throughout their session and on subsequent visits. This can be achieved using cookies or by hashing the user ID to assign them to a variant, which is crucial for a consistent user experience and accurate data collection.

- Isolate Your Tests: When running multiple A/B tests simultaneously, ensure they are orthogonal (independent) to avoid one test's results polluting another's. For example, don't test a new headline and a new button color in the same user journey unless you are explicitly running a multivariate test.

7. Red-Black Deployment

Red-Black deployment is a sophisticated variant of the Blue-Green strategy, often favored in complex, enterprise-level environments. It also uses two identical production environments, but instead of "Blue" and "Green," they are designated "Red" (live) and "Black" (new). The new application version is deployed to the Black environment for rigorous testing and validation.

Once the Black environment is confirmed to be stable, traffic is switched over. Here lies the key difference: the Black environment is formally promoted to become the new Red environment. The old Red environment is then decommissioned or repurposed. This "promotion" model is especially effective for managing intricate deployments with many interdependent services and maintaining clear audit trails, making it one of the more robust zero downtime deployment strategies.

Why It's a Top Strategy

This strategy excels in regulated industries like finance and healthcare, where a clear, auditable promotion process is mandatory. By formally redesignating the new environment as "Red," it simplifies configuration management and state tracking over the long term. Companies like Atlassian leverage this pattern for their complex product suites, ensuring stability and traceability with each update.

Actionable Implementation Tips

- Implement Automated Health Verification: Before promoting the Black environment, run automated health checks that validate not just the application's status but also its critical downstream dependencies using synthetic monitoring or end-to-end tests.

- Use Database Replication: For stateful applications, use database read replicas to allow the Black environment to warm its caches and fully test against live data patterns without performing write operations on the production database.

- Create Detailed Rollback Procedures: While the old Red environment exists, have an automated and tested procedure to revert traffic. Once it's decommissioned, rollback means redeploying the previous version, so ensure your artifact repository (e.g., Artifactory, Docker Hub) is versioned and reliable.

- Monitor Both Environments During Transition: Use comprehensive monitoring dashboards that display metrics from both Red and Black environments side-by-side during the switchover, looking for anomalies in performance, error rates, or resource utilization.

8. Recreate Deployment with Load Balancer

The Recreate Deployment strategy, also known as "drain and update," is a practical approach that leverages a load balancer to achieve zero user-perceived downtime. In this model, individual instances of an application are systematically taken out of the active traffic pool, updated, and then reintroduced. The load balancer is the key component, intelligently redirecting traffic away from the node undergoing maintenance.

While the specific instance is temporarily offline for the update, the remaining active instances handle the full user load, ensuring the service remains available. This method is often used in environments where creating entirely new, parallel infrastructure (like in Blue-Green) is not feasible, such as with legacy systems or on-premise data centers. It offers a balance between resource efficiency and deployment safety.

Why It's a Top Strategy

This strategy is highly effective for its simplicity and minimal resource overhead. Unlike Blue-Green, it doesn't require doubling your infrastructure. It's a controlled, instance-by-instance update process that minimizes the blast radius of potential issues. If an updated instance fails health checks upon restart, it is simply not added back to the load balancer pool, preventing a faulty update from impacting users. This makes it a reliable choice for stateful applications or systems with resource constraints.

Actionable Implementation Tips

- Utilize Connection Draining: Before removing an instance from the load balancer, enable connection draining (or connection termination). This allows existing connections to complete gracefully while preventing new ones, ensuring no user requests are abruptly dropped. In AWS, this is a setting on the Target Group.

- Automate Health Checks: Implement comprehensive, automated health checks (e.g., an HTTP endpoint returning a 200 status code) that the load balancer uses to verify an instance is fully operational before it's allowed to receive production traffic again.

- Maintain Sufficient Capacity: Ensure your cluster maintains

N+1orN+2redundancy, where N is the minimum number of instances required to handle peak traffic. This prevents performance degradation for your users while one or more instances are being updated. - Update Sequentially: Update one instance at a time or in small, manageable batches. This sequential process limits risk and makes it easier to pinpoint the source of any new problems. For a deeper dive, learn more about load balancing configuration on opsmoon.com.

9. Strangler Pattern Deployment

The Strangler Pattern is a specialized zero downtime deployment strategy designed for incrementally migrating a legacy monolithic application to a microservices architecture. Coined by Martin Fowler, the approach involves creating a facade that intercepts incoming requests. This "strangler" facade routes traffic to either the existing monolith or a new microservice that has replaced a piece of the monolith's functionality.

Over time, more and more functionality is "strangled" out of the monolith and replaced by new, independent services. This gradual process continues until the original monolith has been fully decomposed and can be safely decommissioned. This method avoids the high risk of a "big bang" rewrite by allowing for a phased, controlled transition, ensuring the application remains fully operational throughout the migration.

Why It's a Top strategy

This pattern is invaluable for modernizing large, complex systems without introducing significant downtime or risk. It allows teams to deliver new features and value in the new architecture while the old one still runs. Companies like Etsy and Spotify have famously used this pattern to decompose their monolithic backends into more scalable and maintainable microservices, providing a proven path for large-scale architectural evolution.

Actionable Implementation Tips

- Identify Clear Service Boundaries: Before writing any code, carefully analyze the monolith to identify logical, loosely coupled domains that can be extracted as the first microservices. Use domain-driven design (DDD) principles to define these boundaries.

- Start with Low-Risk Functionality: Begin by strangling a less critical or read-only part of the application, such as a user profile page or a product catalog API. This minimizes the potential impact if issues arise and allows the team to learn the process.

- Implement a Robust Facade: Use an API Gateway (like Kong or AWS API Gateway) or a reverse proxy (like NGINX) as the strangler facade. Configure its routing rules to direct specific URL paths or API endpoints to the new microservice.

- Maintain Data Consistency: Develop a clear strategy for data synchronization. Initially, the new service might read from a replica of the monolith's database. For write operations, techniques like the Outbox Pattern or Change Data Capture (CDC) can be used to ensure data consistency between the old and new systems.

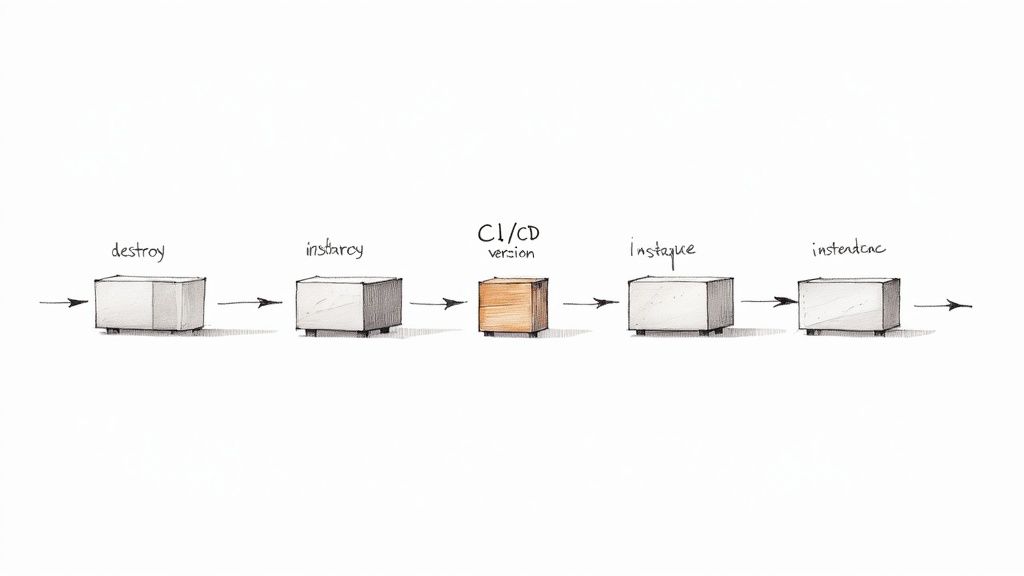

10. Immutable Infrastructure Deployment

Immutable Infrastructure Deployment is a transformative approach where servers or containers are never modified after they are deployed. Instead of patching, updating, or configuring existing instances, a completely new set of instances is created from a common image with the updated application code or configuration. Once the new infrastructure is verified, it replaces the old, which is then decommissioned.

This paradigm treats infrastructure components as disposable assets. If a change is needed, you replace the asset entirely rather than altering it. This eliminates configuration drift, where manual changes lead to inconsistencies between environments, making deployments highly predictable and reliable. This approach is fundamental to modern cloud-native systems and is used extensively by companies like Google and Netflix.

Why It's a Top Strategy

The primary advantage of immutability is the extreme consistency it provides across all environments, from testing to production. It drastically simplifies rollbacks, as reverting a change is as simple as deploying the previous, known-good image. This strategy significantly reduces deployment failures caused by environment-specific misconfigurations, making it one of the most robust zero downtime deployment strategies available.

Actionable Implementation Tips

- Embrace Infrastructure-as-Code (IaC): Use tools like Terraform or AWS CloudFormation to define and version your entire infrastructure in Git. This is the cornerstone of immutability, allowing you to programmatically create and destroy environments. For more insights, explore the benefits of infrastructure as code.

- Use Containerization: Package your application and its dependencies into container images (e.g., Docker). Containers are inherently immutable and provide a consistent artifact that can be promoted through environments without modification.

- Automate Image Baking: Integrate the creation of machine images (AMIs) or container images directly into your CI/CD pipeline using tools like Packer or Docker build. Each code commit should trigger the build of a new, versioned image artifact.

- Leverage Orchestration: Use a container orchestrator like Kubernetes or Amazon ECS to manage the lifecycle of your immutable instances. Configure the platform to perform a rolling update, which automatically replaces old containers with new ones, achieving a zero downtime transition.

Zero-Downtime Deployment: 10-Strategy Comparison

| Strategy | Implementation complexity | Resource requirements | Expected outcome / risk | Ideal use cases | Key advantages |

|---|---|---|---|---|---|

| Blue-Green Deployment | Low–Medium: simple concept but needs environment orchestration | High: full duplicate production environments | Zero-downtime cutover, instant rollback; DB migrations require care | Apps needing instant rollback and full isolation | Instant rollback, full-system testing, simple traffic switch |

| Canary Deployment | High: requires traffic control and observability | Medium: small extra capacity for initial canary | Progressive rollout, reduced blast radius; slower full rollout | Production systems requiring risk mitigation and validation | Real-world validation, minimizes impact, gradual increase |

| Rolling Deployment | Medium: orchestration and health checks per batch | Low–Medium: no duplicate environments | Gradual replacement with version coexistence; longer deployments | Long-running services where cost efficiency matters | Lower infra cost than blue-green, gradual safe updates |

| Feature Flags (Feature Toggle) | Medium: code-level changes and flag management | Low: no duplicate infra but needs flag system | Decouples deploy & release, instant feature toggle rollback; complexity accrues | Continuous deployment, A/B testing, targeted rollouts | Fast rollback, targeted releases, supports experiments |

| Shadow Deployment | High: complex request mirroring and comparison logic | High: duplicate processing of real traffic | Full production validation with zero user impact; costly and side-effect risks | Mission-critical systems needing production validation | Real-world testing without affecting users, performance benchmarking |

| A/B Testing Deployment | Medium–High: traffic split and statistical analysis | Medium–High: needs sizable traffic and variant support | Simultaneous variants to measure business metrics; longer analysis | Product teams optimizing UX and business metrics | Data-driven decisions, direct measurement of user impact |

| Red-Black Deployment | Low–Medium: similar to blue-green with role swap | High: duplicate environments required | Instant switchover and rollback; DB sync challenges | Complex systems with strict uptime and predictable state needs | Clear active/inactive state, predictable fallback |

| Recreate Deployment with Load Balancer | Low: simple remove-update-restore flow | Low–Medium: no duplicate envs, needs capacity on remaining instances | Brief instance-level downtime mitigated by LB routing; not true full zero-downtime if many updated | Legacy apps and on-premise systems behind load balancers | Simple to implement, works with traditional applications |

| Strangler Pattern Deployment | High: complex routing and incremental extraction | Medium: parallel operation during migration | Gradual monolith decomposition, reduced migration risk but long timeline | Organizations migrating from monoliths to microservices | Incremental, low-risk migration path, testable in production |

| Immutable Infrastructure Deployment | Medium–High: requires automation, image pipelines and IaC | Medium–High: create new instances per deploy, image storage | Consistent, reproducible deployments; higher cost and build overhead | Cloud-native/containerized apps with mature DevOps | Eliminates configuration drift, easy rollback, reliable consistency |

Choosing Your Path to Continuous Availability

Navigating the landscape of modern software delivery reveals a powerful truth: application downtime is no longer an unavoidable cost of innovation. It is a technical problem with a diverse set of solutions. As we've explored, the journey toward continuous availability isn't about finding a single, magical "best" strategy. Instead, it's about building a strategic toolkit and developing the wisdom to select the right tool for each specific deployment scenario. The choice between these zero downtime deployment strategies fundamentally hinges on your risk tolerance, architectural complexity, and user impact.

A simple, stateless microservice might be perfectly served by the efficiency of a Rolling deployment, offering a straightforward path to incremental updates with minimal overhead. In contrast, a mission-critical, customer-facing system like an e-commerce checkout or a financial transaction processor demands the heightened safety and immediate rollback capabilities inherent in a Blue-Green or Canary deployment. Here, the ability to validate new code with a subset of live traffic or maintain a fully functional standby environment provides an indispensable safety net against catastrophic failure.

Synthesizing Strategy with Technology

Mastering these techniques requires more than just understanding the concepts; it demands a deep integration of automation, observability, and infrastructure management.

- Automation is the Engine: Manually executing a Blue-Green switch or a phased Canary rollout is not only slow but also dangerously error-prone. Robust CI/CD pipelines, powered by tools like Jenkins, GitLab CI, or GitHub Actions, are essential for orchestrating these complex workflows with precision and repeatability.

- Observability is the Compass: Deploying without comprehensive monitoring is like navigating blind. Your team needs real-time insight into application performance metrics (latency, error rates, throughput) and system health (CPU, memory, network I/O) to validate a deployment's success or trigger an automatic rollback at the first sign of trouble.

- Infrastructure is the Foundation: Strategies like Immutable Infrastructure and Shadow Deployment rely on the ability to provision and manage infrastructure as code. Tools like Terraform and CloudFormation, combined with containerization platforms like Docker and Kubernetes, make it possible to create consistent, disposable environments that underpin the reliability of your chosen deployment model.

Ultimately, the goal is to transform deployments from high-anxiety events into routine, low-risk operations. A critical, often overlooked component in this ecosystem is the data layer. Deploying a new application version is futile if it corrupts or cannot access its underlying database. For applications demanding absolute consistency, understanding concepts like real-time database synchronization is paramount to ensure data integrity is maintained seamlessly across deployment boundaries, preventing data-related outages.

By weaving these zero downtime deployment strategies into the fabric of your engineering culture, you empower your team to ship features faster, respond to market changes with agility, and build a reputation for unwavering reliability that becomes a true competitive advantage.

Ready to eliminate downtime but need the expert talent to build your resilient infrastructure? OpsMoon connects you with a global network of elite, pre-vetted DevOps and Platform Engineers who specialize in designing and implementing sophisticated CI/CD pipelines. Find the perfect freelance expert to accelerate your journey to continuous availability at OpsMoon.

Leave a Reply