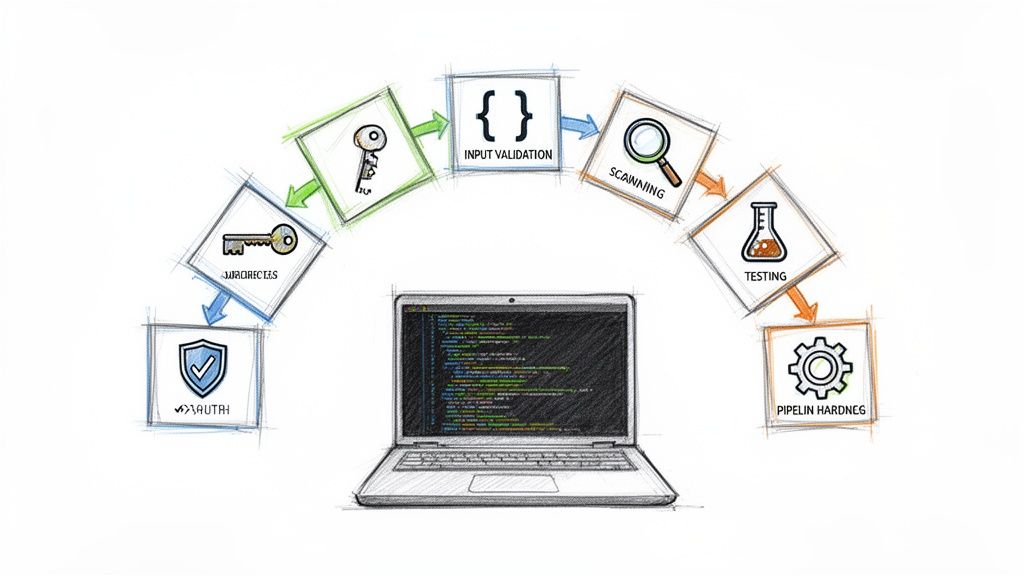

In modern, high-velocity DevOps environments, security cannot be an afterthought or a final compliance gate. It must be a continuous, integrated discipline woven directly into the Software Development Lifecycle (SDLC). Moving past generic checklists, this guide provides a deep, technical exploration of the most critical secure coding best practices that engineering leaders must embed into their development and operational workflows.

We will dissect ten foundational pillars of application and infrastructure security, offering actionable code snippets, specific tool recommendations, and concrete implementation strategies tailored for today's engineering challenges. This is not a theoretical overview; it's a practical framework for building resilient systems. Our focus remains on the "how" rather than just the "what," providing detailed guidance that developers can immediately apply. For specific examples of how these principles translate into a popular framework, many of the concepts we discuss are reflected in detailed guides on Laravel security best practices.

From hardening your CI/CD pipeline against common injection attacks to implementing zero-trust principles within Kubernetes clusters, this roundup is designed to equip your team with the technical knowledge needed to build secure and compliant systems at scale. The goal is to move beyond merely reacting to vulnerabilities and toward architecting a proactive security posture. By integrating these secure coding best practices from the start, you can ensure that robust security accelerates, rather than hinders, your team's development velocity and innovation. This article will show you how to build security into your code, your tools, and your culture.

1. Input Validation and Sanitization

Input validation is a foundational secure coding best practice that serves as the first line of defense against a wide array of attacks. It involves rigorously checking all incoming data to ensure it conforms to expected formats, types, and values before it is processed by the application. This principle extends beyond user-facing forms; it applies to API endpoints, configuration files, environment variables, and even parameters passed between microservices. By rejecting malformed or malicious data at the earliest entry point, you effectively neutralize threats like SQL injection, Cross-Site Scripting (XSS), and command injection before they can reach vulnerable code.

Sanitization is the complementary process of cleaning or modifying input to make it safe. While validation rejects bad data outright, sanitization attempts to convert it into a valid format. For example, it might involve stripping HTML tags to prevent XSS or escaping special characters before they are passed to a database query. Together, validation and sanitization create a robust barrier against injection attacks.

Why It's a Top Priority

Untrusted input is the root cause of many of the most critical vulnerabilities listed in the OWASP Top 10. Failing to validate data from users, APIs, or even internal systems creates opportunities for attackers to manipulate application behavior. In automated DevOps environments, this risk is amplified. A CI/CD pipeline that accepts unvalidated parameters could be tricked into executing arbitrary commands, leaking secrets, or deploying malicious infrastructure-as-code templates.

Key Insight: Treat all incoming data as untrusted, regardless of its source. This "zero-trust" approach to data is a cornerstone of a defense-in-depth security strategy and one of the most effective secure coding best practices.

Implementation and Actionable Tips

- Server-Side First: While client-side validation provides a good user experience by giving immediate feedback, it can be easily bypassed. Always perform authoritative validation on the server side. For example, in a Java Spring application, use

@Validannotations in your controller and define constraints on your DTOs. - Use Allow-Lists (Whitelisting): Instead of trying to block a list of known "bad" inputs (blacklisting), define a strict set of rules for what is "good" and reject everything else. For example, a username field might only allow alphanumeric characters and underscores within a specific length, enforced with a regular expression like

^[a-zA-Z0-9_]{4,16}$. - Leverage Frameworks and Libraries: Don't write validation logic from scratch. Use battle-tested libraries built into your framework, like Spring Validation for Java or by integrating third-party tools like the OWASP Java Encoder Project for contextual output encoding.

- Validate CI/CD Inputs: In your pipelines (e.g., GitHub Actions, GitLab CI), explicitly validate all inputs and secrets used in workflow files. This prevents pipeline injection, where an attacker could execute malicious commands by manipulating a pull request title or commit message that is used as a script parameter.

2. Secure Secrets Management

Secure secrets management is the practice of securely storing, rotating, and controlling access to sensitive credentials like API keys, database passwords, and private certificates. Instead of hardcoding secrets in source code, configuration files, or environment variables, this approach utilizes dedicated, centralized "vaults." These vaults, such as HashiCorp Vault or AWS Secrets Manager, provide encryption at rest and in transit, fine-grained access control, and comprehensive audit trails for all secret interactions. This is a non-negotiable secure coding best practice in modern DevOps, where automated systems require programmatic, yet secure, access to sensitive resources.

This methodology decouples secrets from the application lifecycle, allowing them to be managed independently by security teams. Applications authenticate to the vault using a trusted identity (like an IAM role or Kubernetes service account) and retrieve secrets dynamically at runtime. This eliminates the risk of secrets being accidentally exposed in version control, container images, or log files, which are common and devastating security failures.

Why It's a Top Priority

Hardcoded secrets are a primary target for attackers. A single leaked credential in a public GitHub repository can grant an adversary immediate access to production databases, cloud infrastructure, or third-party services. In an automated CI/CD environment, this risk is magnified. A pipeline script with embedded credentials can become a gateway for an attacker to compromise the entire software delivery process, inject malicious code, or exfiltrate sensitive data. Centralized secrets management contains this blast radius by ensuring credentials are never directly exposed in code or build artifacts.

Key Insight: Treat secrets as dynamic, ephemeral data, not static configuration. Applications should fetch secrets on-demand from a trusted source, rather than storing them locally, which dramatically reduces their exposure window.

Implementation and Actionable Tips

- Never Commit Secrets to Version Control: This is the cardinal rule. Use pre-commit hooks (like

git-secretsortruffleHog) to automatically scan for credentials before they are committed. - Embrace Dynamic and Short-Lived Secrets: Instead of static, long-lived passwords, use a vault to generate dynamic, just-in-time credentials that expire automatically after use. For example, HashiCorp Vault can create a unique database user for each application instance with a short time-to-live (TTL).

- Use Cloud-Native IAM Roles: In cloud environments like AWS, GCP, or Azure, use Identity and Access Management (IAM) roles or managed identities to grant applications permission to access secrets. This eliminates the need to manage long-lived API keys for the application itself.

- Enforce Strict Separation and Rotation: Isolate secrets by environment (dev, staging, production) and implement automated rotation policies. Mandating a 90-day rotation for all database credentials, for instance, limits the value of any single compromised secret.

- Audit Everything: Leverage the detailed audit logs provided by secrets management tools. Regularly monitor who or what is accessing secrets, from where, and when. Set up alerts for anomalous access patterns, such as a secret being accessed from an unexpected IP range.

3. Principle of Least Privilege (PoLP)

The Principle of Least Privilege (PoLP) is a foundational security concept dictating that any user, program, or process should have only the minimum permissions necessary to perform its intended function. This secure coding best practice acts as a crucial containment mechanism; it drastically reduces the potential damage from a security breach. If an attacker compromises an application or a user account, they are confined to the minimal permissions granted to that entity, preventing lateral movement and privilege escalation across the system.

In a modern DevOps context, PoLP applies to everything from developer access to source code repositories to the permissions granted to a microservice's runtime environment. It means a CI/CD pipeline stage responsible for building a Docker image should not have permissions to deploy to production, and a container running a web server should not have root access to its host node.

Why It's a Top Priority

Over-privileged accounts and services are a primary target for attackers. A single compromised component with excessive permissions can unravel an entire system's security posture. In distributed, cloud-native environments, the "blast radius" of such a compromise is significantly larger. An exploited EC2 instance with broad IAM permissions could lead to data exfiltration from S3, unauthorized database modifications in RDS, or even a full infrastructure takeover. Enforcing least privilege is a core tenet of Zero Trust architecture, which assumes breaches will happen and focuses on containing them.

Key Insight: Treat permissions as a finite, critical resource. Grant them explicitly and sparingly based on a "deny by default" model, rather than starting with broad access and revoking it. Every unnecessary permission is a potential attack vector.

Implementation and Actionable Tips

- Scope CI/CD Service Accounts: Configure your CI/CD service accounts (e.g., in GitLab, Jenkins, or GitHub Actions) with tightly scoped, short-lived credentials for each specific stage. For example, a "build" stage's token should expire quickly and lack deployment permissions.

- Use Non-Root Containers: Never run your containers as the

rootuser. Define a dedicated, unprivileged user in your Dockerfile (RUN adduser --disabled-password --gecos "" myuserandUSER myuser) and use security contexts in Kubernetes to drop unnecessary Linux capabilities (e.g.,NET_RAW,SYS_ADMIN). - Implement Just-in-Time (JIT) Access: For high-privilege operations like production database access or infrastructure changes, use JIT systems that grant temporary, audited, and approval-based access instead of maintaining standing permissions.

- Apply Granular IAM and RBAC: Use cloud-native identity and access management (IAM) and role-based access control (RBAC) to define fine-grained policies. Restrict a service to

s3:GetObjecton a specific bucket prefix or limit a Kubernetes pod's API access to onlygetandlistsecrets within its own namespace.

4. Secure Dependencies Management

Modern software is rarely built from scratch; it's assembled using a vast ecosystem of open-source libraries, frameworks, and third-party components. Secure dependencies management is the critical practice of tracking, monitoring, and maintaining these external components to protect against supply chain vulnerabilities. It involves a systematic approach to identify all dependencies in your codebase, container images, and infrastructure-as-code, scan them for known security issues (CVEs), and apply updates or patches in a timely manner. This discipline is essential for mitigating the risk of being compromised through a vulnerability in a component you didn't write but implicitly trust.

From a single vulnerable npm package in a web application to an outdated OS library in a Docker base image, compromised dependencies provide a direct and often easy entry point for attackers. By integrating automated scanning and management into the software development lifecycle, teams can proactively address these risks before they are exploited. This practice is a cornerstone of modern application security and a key component of secure coding best practices.

Why It's a Top Priority

Software supply chain attacks have become a primary vector for widespread breaches. An attacker who compromises a popular open-source library can inject malicious code that gets distributed to thousands of downstream applications, as seen in incidents like the Log4Shell vulnerability. In a DevOps context, insecure dependencies in CI/CD plugins or infrastructure-as-code modules can lead to a full compromise of the build and deployment environment. Failing to manage dependencies is equivalent to leaving a known, documented backdoor open in your application.

Key Insight: Your application's security is only as strong as its weakest dependency. Maintaining a complete and up-to-date inventory, often via a Software Bill of Materials (SBOM), is no longer optional; it's a fundamental requirement for secure software delivery.

Implementation and Actionable Tips

- Automate Scanning in CI/CD: Integrate Software Composition Analysis (SCA) tools directly into your CI/CD pipeline. Tools like GitHub Dependabot, Snyk, or Trivy can scan code repositories and container images on every commit or build, failing the pipeline if critical vulnerabilities are found.

- Maintain a Software Bill of Materials (SBOM): Generate and maintain an SBOM for every application using formats like CycloneDX or SPDX. This detailed inventory lists all components and their versions, providing the visibility needed to quickly respond when a new vulnerability is disclosed.

- Use Dependency Pinning: Pin dependency versions in your project files (e.g.,

package-lock.json,requirements.txt,go.sum). This ensures reproducible builds and prevents a dependency from being automatically updated to a new, potentially vulnerable or breaking version without explicit review. - Establish a Patching Policy: Define clear Service Level Agreements (SLAs) for remediating vulnerabilities based on severity. For example, mandate that critical vulnerabilities must be patched within 72 hours, while high-severity ones must be addressed within 14 days. Automate ticket creation to track this process.

5. Secure Code Review and Testing

Secure code review and testing is the practice of systematically identifying vulnerabilities before code is deployed. This goes beyond traditional QA by integrating security-specific analysis directly into the development lifecycle. It combines human-driven peer reviews with a suite of automated tools, including Static Application Security Testing (SAST) to analyze source code, Dynamic Application Security Testing (DAST) to probe running applications, and Software Composition Analysis (SCA) to find vulnerabilities in third-party libraries. These practices serve as critical quality gates within a CI/CD pipeline, preventing insecure code from ever reaching production.

The goal is to shift security left, making it an integral part of the development process rather than an afterthought. By automating tools like SonarQube for code smells or Checkmarx for injection flaws, teams can catch issues early when they are cheapest and easiest to fix. This proactive approach ensures that secure coding best practices are not just guidelines but are actively enforced throughout the software development lifecycle.

Why It's a Top Priority

Writing secure code is one half of the equation; verifying it is the other. Without dedicated security testing, vulnerabilities will inevitably slip through, exposing the organization to breaches, data loss, and reputational damage. In a fast-paced DevOps environment, manual reviews alone cannot keep up. Automating security scanning within CI/CD pipelines is essential for maintaining both velocity and security. This also extends to Infrastructure-as-Code (IaC), where tools like Checkov can validate the security of Terraform or CloudFormation templates before they provision insecure infrastructure.

Key Insight: Treat security testing as a non-negotiable quality gate, just like unit or integration testing. A failed security scan should be a build-breaker, compelling developers to address vulnerabilities immediately.

Implementation and Actionable Tips

- Automate in CI/CD: Integrate SAST, DAST, and SCA tools directly into your CI/CD pipeline. Configure them to run automatically on every pull request or merge, blocking the build if critical or high-severity vulnerabilities are found.

- Start with High-Confidence Rules: To avoid overwhelming developers and causing alert fatigue, begin by enabling a small set of high-confidence, low-false-positive rules in your scanning tools. Gradually tighten policies as the team's security maturity grows.

- Combine Automated and Manual Reviews: Tools are powerful but cannot understand business logic or find complex design flaws. Supplement automated scans with manual, security-focused peer code reviews for critical features or changes to authentication and authorization logic.

- Scan Everything as Code: Apply the same security scanning rigor to your IaC (Terraform, Kubernetes manifests) as you do to your application code. Use specialized tools like Trivy or TFLint to prevent cloud misconfigurations.

- Use Findings as Teaching Moments: Frame vulnerability reports not as failures but as opportunities for learning. Provide developers with clear guidance and training on how to remediate the specific issues identified by the tools, including secure code examples.

6. Secure Configuration Management

Secure configuration management is the practice of establishing, maintaining, and auditing the configurations of both applications and the underlying infrastructure to ensure they meet security standards. This process prevents insecure defaults from being deployed and guards against "configuration drift," where systems deviate from their secure baseline over time due to manual changes. In modern DevOps, this is primarily achieved through Infrastructure as Code (IaC) and policy-as-code, which enforce consistent, version-controlled, and auditable security settings across all environments.

This practice extends beyond server settings to encompass every configurable component in the delivery pipeline. This includes Kubernetes deployments, cloud resource definitions, and application-level settings stored in ConfigMaps or parameter stores. By treating configurations as code, teams can apply the same rigorous review, testing, and automated deployment practices used for application code, making security an integral part of the infrastructure lifecycle.

Why It's a Top Priority

Misconfiguration is a leading cause of cloud security breaches and system vulnerabilities. An exposed S3 bucket, a firewall rule that is too permissive (0.0.0.0/0), or an application running with excessive privileges can create critical security gaps. Without a systematic approach to management, these errors are almost inevitable, especially in complex, rapidly changing environments. Automating configuration enforcement is a key secure coding best practice that scales security alongside development, preventing costly and reputation-damaging incidents.

Key Insight: Your security posture is only as strong as your configurations. Treat configuration files with the same level of scrutiny as application code by storing them in version control, requiring peer reviews for changes, and automating their deployment.

Implementation and Actionable Tips

- Use Infrastructure as Code (IaC): Leverage tools like Terraform or AWS CloudFormation to define and manage your infrastructure. This makes configurations repeatable, auditable, and easy to roll back. Store your IaC state files securely, for example, by enabling encryption for Terraform state in an S3 bucket.

- Enforce Baselines with Automation: Use configuration management tools like Ansible or Chef to enforce security baselines, such as those defined by the Center for Internet Security (CIS), across your server fleet. Run these tools periodically to detect and correct any configuration drift.

- Implement Policy-as-Code: Integrate tools like Open Policy Agent (OPA) to create automated guardrails. For instance, use OPA Gatekeeper in Kubernetes to block deployments that don't specify resource limits or that request insecure container capabilities.

- Create Hardened "Golden Images": Use tools like HashiCorp Packer to build standardized, pre-hardened virtual machine images or container base images. This ensures all new instances start from a known secure state, minimizing the attack surface from day one.

7. Vulnerability Disclosure and Patch Management

Vulnerability disclosure and patch management are critical operational practices that address security weaknesses discovered after deployment. This discipline involves establishing clear processes to safely receive vulnerability reports from security researchers, followed by a systematic approach to identify, verify, and remediate these issues in a timely manner. It’s a proactive stance that acknowledges no software is perfect and prepares the organization to respond effectively when flaws are found.

In a modern DevOps context, this extends far beyond the application code. It encompasses the entire software supply chain, including operating systems, container base images, third-party libraries, and infrastructure-as-code components. A robust patch management strategy ensures that when a major vulnerability like Log4Shell (CVE-2021-44228) emerges, teams can rapidly assess their exposure, test patches, and deploy fixes across their entire stack without causing widespread disruption.

Why It's a Top Priority

An unpatched vulnerability is an open invitation for attackers. The time between a vulnerability's public disclosure and its exploitation is often measured in hours, not weeks. Without a formal process, teams can be slow to react, leaving critical systems exposed. Effective patch management is a core tenet of secure coding best practices because it completes the security lifecycle, ensuring that code remains secure long after its initial deployment. This rapid response capability is essential for maintaining customer trust and regulatory compliance.

Key Insight: Your security posture is only as strong as your ability to respond to new threats. A mature patch management program turns a reactive fire drill into a predictable, measurable, and efficient operational process.

Implementation and Actionable Tips

- Establish Patching SLAs: Define and enforce Service Level Agreements (SLAs) for applying patches based on vulnerability severity (e.g., using CVSS scores). For example, critical vulnerabilities might require a 72-hour remediation window, while low-risk ones can be bundled into a monthly maintenance cycle.

- Automate Where Possible: Use tools like Dependabot or Snyk to automatically scan dependencies and create pull requests for updates. Implement automated patching for low-risk, non-breaking updates to reduce manual toil and accelerate response times.

- Test Patches Rigorously: Never deploy patches directly to production. Use a dedicated staging environment that mirrors production to test for regressions or performance issues before a full rollout. Always have a documented rollback plan in case a patch causes unintended problems.

- Subscribe to Security Advisories: Monitor security mailing lists and feeds relevant to your technology stack, such as the Kubernetes Security Announce group or vendor-specific alerts. This ensures you are among the first to know when a new vulnerability is disclosed.

8. Security Logging and Monitoring

Security logging and monitoring is the practice of systematically recording and analyzing security-relevant events across an entire technology stack. It's not just about collecting data; it's about transforming raw logs into actionable intelligence that enables real-time threat detection, forensic investigation, and compliance auditing. In a modern DevOps environment, this means centralizing logs from applications, Kubernetes clusters, cloud infrastructure like AWS CloudTrail, and network systems to create a unified view of security posture. Effective logging serves as your digital evidence trail, making it possible to reconstruct events after a security incident.

This practice is critical for moving from a reactive to a proactive security stance. By capturing events like authentication failures, authorization changes, and unusual API calls, teams can establish a baseline of normal activity. Deviations from this baseline, identified through automated analysis and alerting, often represent the earliest indicators of an attack in progress. Effective Event Logging for Cybersecurity is a foundational capability that enables this proactive detection and rapid response.

Why It's a Top Priority

Without comprehensive logging and monitoring, you are effectively blind to security threats. An attacker could be performing reconnaissance, escalating privileges, or exfiltrating data, and you would have no record of their activity until it's too late. Logging provides the necessary visibility to not only detect breaches but also to understand their scope and impact, which is essential for remediation and regulatory reporting. In ephemeral, containerized environments, centralized logging is the only way to preserve event data after a container is terminated, making it a non-negotiable secure coding best practice.

Key Insight: Treat logs as a critical security asset, not just a debugging tool. They should be protected from tampering, retained according to policy, and actively monitored for signs of malicious activity.

Implementation and Actionable Tips

- Log What Matters: Focus on high-value, security-relevant events. Key events include authentication success/failure, authorization denials, changes to permissions (e.g., IAM role modifications), sensitive data access, and critical application errors.

- Establish a Standard Log Format: Use a structured logging format like JSON. Include essential context in every log entry: a precise timestamp (UTC with timezone), source IP, user identity (or service identity), the action performed, and the outcome. This vastly simplifies automated parsing and analysis.

- Centralize and Protect Logs: Aggregate logs from all sources into a centralized Security Information and Event Management (SIEM) system like Splunk or an ELK Stack. Protect these logs using immutable storage and access controls to prevent tampering.

- Configure Real-Time Alerts: Don't wait to review logs manually. Create automated alerts for high-severity events, such as multiple failed logins from a single IP address in a short period (e.g., 5 failures in 1 minute) or a user attempting to access a resource they are not authorized for.

9. Container and Orchestration Security

Container and orchestration security addresses the unique challenges of modern, cloud-native environments. This practice involves securing every layer of the container lifecycle, from the base images and build process to runtime execution and the orchestration platform, such as Kubernetes, that manages it all. It is a critical extension of secure coding best practices, acknowledging that application code does not run in a vacuum. A vulnerability in a base image or a misconfiguration in a Kubernetes manifest can undermine even the most securely written application.

The scope is comprehensive, including scanning container images for known vulnerabilities, enforcing policies on what can be deployed, and monitoring container behavior for anomalies at runtime. For example, using a tool like Sigstore to cryptographically sign and verify container images ensures integrity from build to deployment, preventing tampering. Meanwhile, platforms like Falco can detect suspicious runtime behavior, such as a container unexpectedly spawning a shell (exec) or writing to a sensitive directory like /etc.

Why It's a Top Priority

As containerization and Kubernetes become the de facto standard for deploying applications, they also introduce a new and complex attack surface. A single vulnerable container image can be replicated hundreds of times across a production environment, creating a widespread security incident. Misconfigured orchestrators can expose sensitive credentials, allow for privilege escalation, or enable lateral movement across the entire cluster. In a DevOps context, insecure container practices can lead to compromised CI/CD pipelines and a complete loss of infrastructure control.

Key Insight: Your application's security posture is only as strong as the container it runs in and the orchestrator that manages it. Shifting security left into the container build process is essential for cloud-native security.

Implementation and Actionable Tips

- Use Minimal, Hardened Base Images: Start with the smallest possible base images, such as Alpine or "distroless" images from Google. A smaller attack surface means fewer packages, libraries, and potential vulnerabilities.

- Scan Everything, Everywhere: Integrate container scanning tools like Aqua Security or Trivy directly into your CI/CD pipeline. Scan base images, application layers, and final images for known vulnerabilities before they are ever pushed to a registry.

- Enforce Least Privilege in Containers: Run containers as non-root users. Use AppArmor or seccomp profiles to drop unnecessary Linux capabilities, and make the root filesystem read-only (

readOnlyRootFilesystem: truein Kubernetes) to prevent modifications. - Implement Kubernetes Security Policies: Use Kubernetes Network Policies to create a "zero-trust" network model that strictly controls which pods can communicate with each other. Enforce deployment policies using tools like OPA/Gatekeeper to block containers that don't meet security criteria, such as running as root or using an untrusted image.

10. Compliance and Security Audit Readiness

Compliance and security audit readiness is the practice of building and operating systems in a way that continuously satisfies regulatory and industry standards. This goes beyond a one-time check; it involves designing processes that inherently generate the evidence needed for audits like SOC 2, HIPAA, or PCI-DSS. Instead of scrambling before an audit, readiness means your development lifecycle, from code commit to deployment, is already aligned with required security controls. In modern DevOps environments, this is achieved by codifying compliance rules into the CI/CD pipeline and infrastructure definitions.

This approach treats compliance as an engineering problem. For instance, rather than manually checking if S3 buckets are private, you enforce it with Infrastructure-as-Code (IaC) policies. Rather than gathering access logs from multiple systems, you centralize logging and automate evidence collection. This integration of compliance into the engineering workflow makes it a natural byproduct of development, not a separate, burdensome task.

Why It's a Top Priority

In today's market, compliance certifications are often non-negotiable for enterprise sales, government contracts (FedRAMP), or handling sensitive data (HIPAA, GDPR). Failing an audit can lead to significant financial penalties, reputational damage, and lost business opportunities. Proactively building for audit readiness demonstrates maturity and trustworthiness to customers and partners. By embedding compliance into the SDLC, you shift from a reactive, high-stress audit preparation cycle to a continuous, predictable state of compliance, which is a key tenet of secure coding best practices.

Key Insight: Treat compliance as a feature, not an afterthought. Design your systems and pipelines with audit evidence generation built-in. This "compliance-as-code" mindset turns a major business risk into a manageable, automated engineering task.

Implementation and Actionable Tips

- Automate Evidence Collection: Configure your CI/CD pipeline and infrastructure monitoring tools to automatically collect and store evidence. For example, log all IAM policy changes, failed deployment attempts, and security scan results (SAST/DAST) in a tamper-evident logging system like AWS CloudTrail.

- Map Controls to Code: Translate compliance requirements from frameworks like SOC 2 or the NIST Cybersecurity Framework directly into technical controls. For example, a requirement for "change management" can be mapped to a mandatory pull request review process in Git, enforced by branch protection rules.

- Use Policy-as-Code Tools: Implement tools like Open Policy Agent (OPA) or Sentinel to enforce compliance rules directly within your IaC (Terraform, CloudFormation) and CI/CD pipelines. This can prevent non-compliant infrastructure from ever being deployed.

- Conduct Regular Self-Assessments: Don't wait for external auditors. Use automated tools and internal reviews to continuously assess your posture against your chosen frameworks (e.g., CIS Benchmarks). This helps identify and remediate gaps before they become critical audit findings.

10-Point Secure Coding Best Practices Comparison

| Item | Implementation complexity | Resource requirements | Expected outcomes | Ideal use cases | Key advantages |

|---|---|---|---|---|---|

| Input Validation and Sanitization | Low–Medium; straightforward per-entry, harder for unstructured data | Dev effort, validation libraries, CI checks | Prevents injection attacks, improves data quality | Web forms, APIs, IaC templates, CI/CD parameters | Reduces common vulnerabilities, low performance overhead |

| Secure Secrets Management | Medium–High; vault setup and integration | Secret vaults, rotation automation, IAM, team training | Eliminates hardcoded creds, audit trails, reduced exposure time | Cloud credentials, CI/CD secret injection, multi-environment deployments | Centralized secure storage, automatic rotation, compliance support |

| Principle of Least Privilege (PoLP) | Medium; planning and continual review required | RBAC/IAM policies, role design, periodic audits | Reduced blast radius, minimized insider risk | Service accounts, Kubernetes, multi-tenant systems, CI/CD access | Limits compromise impact, aligns with Zero Trust |

| Secure Dependencies Management | Medium; automation plus triage workflows | SBOM, vulnerability scanners, update pipelines, maintainer time | Faster response to disclosed CVEs, supply-chain visibility | Projects with third-party libs, container images, IaC modules | Detects known vulnerabilities, improves supply-chain security |

| Secure Code Review and Testing | Medium–High; tool integration and rules tuning | SAST/DAST/SCA tools, security reviewers, CI gates | Early vulnerability detection, developer feedback loops | Application development pipelines, IaC scanning, pre-merge gates | Shift-left security, automates detection before deploy |

| Secure Configuration Management | Medium; codify and enforce configs | IaC, policy-as-code, drift detection, version control | Consistent secure configurations, rapid remediation | Infrastructure provisioning, server hardening, Kubernetes | Enforces secure defaults, reduces manual errors |

| Vulnerability Disclosure and Patch Management | Medium; process and emergency readiness | Tracking systems, staging, automated patching, on-call | Reduced exposure window, documented remediation evidence | OS/dep patching, critical CVE responses, container rebuilds | Timely remediation, auditability, reduced exploit risk |

| Security Logging and Monitoring | Medium–High; ingestion, correlation, alerting | Centralized logging, SIEM, storage, analysts | Rapid detection/response, forensic evidence, compliance logs | Incident detection, threat hunting, regulatory reporting | Improves detection and response, supports investigations |

| Container and Orchestration Security | High; platform-specific complexity | Image scanners, runtime defense, network policies, expertise | Isolated workloads, runtime protection, supply-chain checks | Kubernetes clusters, containerized microservices, private registries | Controls container risk, enforces runtime and image policies |

| Compliance and Security Audit Readiness | High; policy mapping and sustained effort | Documentation automation, evidence collection, audits | Regulatory compliance, audit-ready evidence, customer trust | Regulated industries, SOC 2/HIPAA/PCI/ISO programs | Enables audits, reduces legal/regulatory risk, builds trust |

Integrating Security into Your Engineering DNA

The journey through the landscape of secure coding best practices reveals a fundamental truth: security is not a feature, a final checkpoint, or a separate phase. It is a foundational principle that must be woven into the very fabric of your development lifecycle. We've explored a comprehensive set of strategies, from the granular details of input validation and sanitization to the high-level orchestration of container security and compliance readiness. Each practice, whether it's rigorously managing dependencies, implementing the Principle of Least Privilege (PoLP), or establishing robust security logging and monitoring, serves as a critical layer in a defense-in-depth strategy.

Treating these practices as a mere checklist to be ticked off misses the point entirely. The true objective is to foster a cultural shift, moving from a reactive, vulnerability-patching cycle to a proactive culture of security-by-design. This is where security transcends documentation and becomes an integral part of your team's daily workflow, a shared responsibility, and a source of professional pride. It's about empowering every developer to think like an adversary and build defenses from the first line of code.

From Theory to Actionable Implementation

Making this cultural shift a reality requires more than just good intentions; it demands a strategic, systematic approach. The most impactful changes often come from making the secure path the easiest path for your engineers. By embedding security directly into the tools and processes they use every day, you reduce friction and transform best practices from abstract ideals into concrete, automated habits.

Key takeaways to prioritize for immediate action include:

- Automate Everything: Leverage SAST, DAST, and SCA tools directly within your CI/CD pipelines. This provides immediate, contextual feedback to developers, allowing them to fix vulnerabilities when they are cheapest and easiest to address, right at the source.

- Centralize and Control Secrets: Move away from hardcoded credentials and configuration files immediately. Implementing a dedicated secrets management solution like HashiCorp Vault or AWS Secrets Manager is a high-impact project that dramatically reduces your attack surface.

- Standardize Secure Patterns: Create and document reusable, pre-vetted code libraries and modules for common tasks like database access, authentication, and input handling. This ensures that security isn't left to individual interpretation and helps developers build securely by default.

- Enhance Observability: You cannot protect what you cannot see. Investing in robust logging and monitoring not only aids in incident response but also provides invaluable data for proactively identifying anomalous behavior and potential threats before they escalate.

The Lasting Value of a Security-First Mindset

Adopting these secure coding best practices is not about slowing down innovation; it's about enabling sustainable, resilient growth. In today's digital economy, security is a powerful competitive differentiator. It builds customer trust, protects brand reputation, and prevents the catastrophic financial and operational costs associated with a major breach. For engineering leaders and CTOs, cultivating a security-first engineering culture is one of the most significant investments you can make in your product's long-term viability and your company's success.

The path forward begins with a single, deliberate step. Don't aim to boil the ocean. Instead, choose one high-impact area from this guide, such as automating dependency scanning or implementing a more secure configuration management process. Secure a small win, demonstrate its value, and use that momentum to drive the next initiative. By consistently applying these principles, you will transform your organization’s approach to software development, building a resilient engineering culture that ships secure, high-quality code with confidence and velocity.

Ready to embed these secure coding best practices into your DevOps pipeline but need the specialized talent to execute? OpsMoon connects you with a global network of elite, pre-vetted DevOps and SRE experts who can architect and implement secure, scalable, and compliant infrastructure. Accelerate your security transformation by hiring the right expertise on-demand at OpsMoon.

Leave a Reply