A modern process for software development is not a static checklist. It is a dynamic, integrated system engineered to ship high-quality, secure software with maximum velocity. We've moved beyond rigid, sequential planning by fusing the foundational stages of the Software Development Life Cycle (SDLC) with the iterative nature of Agile and the automation-centric culture of DevOps.

This synthesis prioritizes programmatic feedback loops, tight cross-functional collaboration, and aggressive automation. The core objective is to minimize the lead time from code commit to production value, transforming ideas into customer-facing features at the speed of the market.

Framing Your Modern Development Process

Traditional development methodologies operated like a factory assembly line. Each specialized team—development, QA, operations—completed its task and passed the artifact "over the wall." This model created functional silos, communication friction, and significant integration challenges, often resulting in systemic blame games.

A modern process dismantles these silos by applying DevOps principles directly to the SDLC framework. This is not a superficial adjustment; it is a fundamental re-architecture of the software delivery engine. It shifts the entire organization from a project-based mindset to a product-centric one, focused on continuous value delivery.

By merging the SDLC structure with DevOps practices, you ensure engineering output is continuously aligned with real-time business objectives. This is the operational difference between following a static architectural blueprint and managing a dynamic, adaptive system capable of responding to market shifts in real-time.

Key Pillars of a Modern Process

A high-velocity software development process is built on several interdependent technical pillars that collectively enhance speed, quality, and reliability.

- Automation First: Every manual, repeatable task is an attack surface for human error and a source of latency. Automating build compilation, unit testing, integration testing, security scanning (SAST/DAST), and infrastructure provisioning is no longer a best practice; it is the baseline for predictable, reliable software delivery.

- Cross-Functional Collaboration: Developers, QA engineers, security analysts (Sec), and Site Reliability Engineers (SREs) must collaborate from the initial design phase. Shared ownership, facilitated by common tooling (e.g., a unified Git repository, a single CI/CD platform), eliminates communication gaps and resolves the "it works on my machine" anti-pattern.

- Continuous Feedback Loops: The objective is to shorten the feedback cycle at every stage. This encompasses more than just end-user input. It requires programmatic feedback from static code analysis (e.g., SonarQube), automated unit and integration tests, performance monitoring, and security vulnerability scans integrated directly into the development workflow.

A modern software development process is a cultural and engineering paradigm shift. The delivery pipeline itself must be treated as a product—versioned, monitored, and continuously optimized.

To fully grasp the paradigm shift, a direct comparison is necessary. The following table contrasts the key operational characteristics of traditional versus modern approaches.

Traditional vs Modern Software Development Processes at a Glance

| Characteristic | Traditional Process (e.g., Waterfall) | Modern Process (e.g., Agile/DevOps) |

|---|---|---|

| Release Cycle | Long, infrequent (months or years) | Short, frequent (days or weeks) |

| Team Structure | Siloed teams (Dev, QA, Ops) | Cross-functional, collaborative teams |

| Planning | Rigid, upfront planning for the entire project | Adaptive planning, accommodates changes |

| Feedback | Gathered at the end of the project | Continuous loops throughout the cycle |

| Risk | High-risk, "big bang" deployments | Low-risk, incremental updates |

| Automation | Minimal, often manual processes | Extensive automation (CI/CD, IaC) |

| Customer Involvement | Limited, primarily during requirements gathering | Actively involved throughout development |

Understanding these distinctions is the foundational step toward engineering a process that provides a significant competitive advantage. It represents a strategic move from large, high-risk deployments to small, frequent, and low-risk updates that deliver continuous value.

Architecting Your Software Development Life Cycle

Every robust software system originates from a well-defined blueprint: the Software Development Life Cycle (SDLC). This is the structured process that governs the journey from concept to production. Treat the SDLC not as a rigid protocol, but as a flexible, disciplined framework that imposes predictability and quality controls on the inherently creative act of software engineering.

The analogy of constructing a skyscraper is apt. You don't begin by pouring concrete. Engineers and architects execute meticulous planning, from geotechnical surveys for the foundation to aerodynamic modeling for the facade. The SDLC provides this same level of engineering rigor, preventing catastrophic and costly failures downstream.

Stage 1: Planning and Requirements Analysis

This initial phase aligns business objectives with technical feasibility. It begins with high-level planning, defining project scope, conducting feasibility studies, and allocating resources (budget, personnel, compute). The primary goal is to answer the fundamental question: "What is the precise business problem, and what are the technical and financial constraints of solving it?"

Following this, requirements analysis translates high-level business needs into granular, testable technical specifications. This involves creating detailed user stories in a format like Gherkin (Given-When-Then), defining precise acceptance criteria, and architecting comprehensive use-case diagrams. Insufficient detail in this phase is a primary cause of project failure, leading to products that are technically sound but commercially irrelevant.

Truncating requirements analysis to accelerate coding is a critical error. Industry data indicates that defects introduced during the requirements phase can cost 10 to 200 times more to remediate post-deployment than if they were caught early. Rigorous upfront specification is a direct investment in future stability.

Stage 2: System Design and Development

With clearly defined requirements, the system design phase commences. This is where senior architects and engineers make foundational architectural decisions, such as choosing between a monolithic or microservices architecture. This single decision has profound, long-term implications for scalability, maintainability, team structure (Conway's Law), and operational complexity.

The design phase also produces critical technical artifacts:

- API Contracts: Formal specifications (e.g., OpenAPI/Swagger for REST, GraphQL Schema for GraphQL) defining inter-service communication protocols.

- Data Schemas: Logical and physical data models defining the structure, constraints, and relationships for databases.

- Component Diagrams: UML or C4 model diagrams illustrating the system's components, containers, and their interactions.

Only after a robust design is approved does the development phase begin. Engineers write code that implements the design specifications, adhering to established coding standards, design patterns, and architectural constraints. The objective is to produce clean, efficient, and testable code.

Stage 3: Testing, Deployment, and Maintenance

A comprehensive, automated testing strategy must run in parallel with development. This is not a final, pre-release quality gate but an integrated, continuous process. A modern process for software development implements a multi-layered testing pyramid.

- Unit Tests: Verify the functional correctness of individual methods or classes in isolation, using frameworks like JUnit or PyTest.

- Integration Tests: Ensure that different components or microservices interact correctly according to their API contracts.

- End-to-End (E2E) Tests: Automate user scenarios from the user interface down to the database, validating the system as a whole with tools like Cypress or Selenium.

Upon successful completion of all automated test suites, the code is ready for deployment. The goal is a zero-touch, fully automated deployment pipeline that makes releases a low-risk, repeatable, and non-disruptive event.

Finally, the maintenance phase begins immediately upon production deployment. This is a continuous operational loop involving proactive system health monitoring, applying security patches, resolving bugs, and iterating on features based on user feedback and performance data. For a more detailed breakdown of these stages, explore our guide on the software development cycle stages.

Choosing the Right Development Methodology

After defining the SDLC stages, the next critical decision is selecting a development methodology. This choice dictates the operational tempo of the team, communication protocols, and the capacity to respond to changing requirements.

This is analogous to selecting a navigation strategy. A detailed, turn-by-turn printed map is effective for a known, unchanging route. A dynamic GPS that provides real-time rerouting is superior for navigating unpredictable, complex environments. The optimal choice depends entirely on the project's context and uncertainty level.

The Waterfall Model The Linear Blueprint

Waterfall is the traditional, sequential methodology. Each SDLC phase must be fully completed and formally signed off before the next phase can begin. The process flows unidirectionally from requirements through design, development, testing, and deployment.

This rigidity is its defining characteristic. Waterfall is highly effective in environments where requirements are completely understood, stable, and unlikely to change.

- Best For: Projects with stringent regulatory compliance requirements, such as medical device software (FDA) or avionics (DO-178C), where exhaustive documentation and formal phase-gate reviews are mandatory.

- Key Characteristic: Change is considered a deviation and is managed through a formal, and often costly, change control process.

Agile Frameworks Embracing Change

Agile methodologies, such as Scrum and Kanban, were developed specifically to manage the uncertainty inherent in most modern software projects. Instead of a single, monolithic release, Agile decomposes work into small, iterative cycles (sprints in Scrum) or a continuous flow of tasks (Kanban).

The entire framework is optimized for rapid feedback and adaptability. Teams deliver small increments of working software frequently, enabling them to pivot based on validated customer feedback rather than initial assumptions. This is the de facto standard for product development in dynamic markets.

Agile is a mindset articulated in the Agile Manifesto, prioritizing individuals and interactions over processes and tools. It values customer collaboration over contract negotiation. This philosophy is optimal for projects where the final requirements are expected to evolve through discovery and feedback.

The Lean Methodology Maximizing Value

Lean development applies principles from lean manufacturing to software engineering. Its singular focus is the relentless elimination of "waste" to maximize the delivery of customer value. Waste is defined as any activity that does not directly contribute to solving the customer's problem, including building unneeded features, excessive documentation, and idle time between process steps.

Lean emphasizes principles like building a Minimum Viable Product (MVP) to validate core hypotheses with minimal investment and using a "pull" system (like Kanban) to optimize workflow. It is ideal for startups and organizations focused on extreme operational efficiency and achieving a state of continuous delivery.

How do you select the appropriate model? The decision is strategic, not merely technical. This comparison table breaks down the models against critical operational criteria.

Comparison of Software Development Process Models

| Criterion | Waterfall | Agile (Scrum/Kanban) | Lean |

|---|---|---|---|

| Flexibility | Extremely low; changes are costly and disruptive. | High; designed to embrace and adapt to change. | Very high; focused on continuous improvement and flow. |

| Feedback Loop | Very slow; feedback is only gathered at the end. | Fast; feedback is collected at the end of each sprint. | Extremely fast; aims for continuous, real-time feedback. |

| Risk Profile | High-risk; issues are often discovered late. | Low-risk; risks are identified and mitigated iteratively. | Lowest risk; minimizes waste and validates value early. |

| Ideal Project | Fixed scope, stable requirements, low uncertainty. | Evolving requirements, high uncertainty, dynamic market. | Startups, MVPs, and projects focused on pure efficiency. |

The optimal methodology is one that aligns with your project's technical and market reality, your engineering culture, and your strategic business goals.

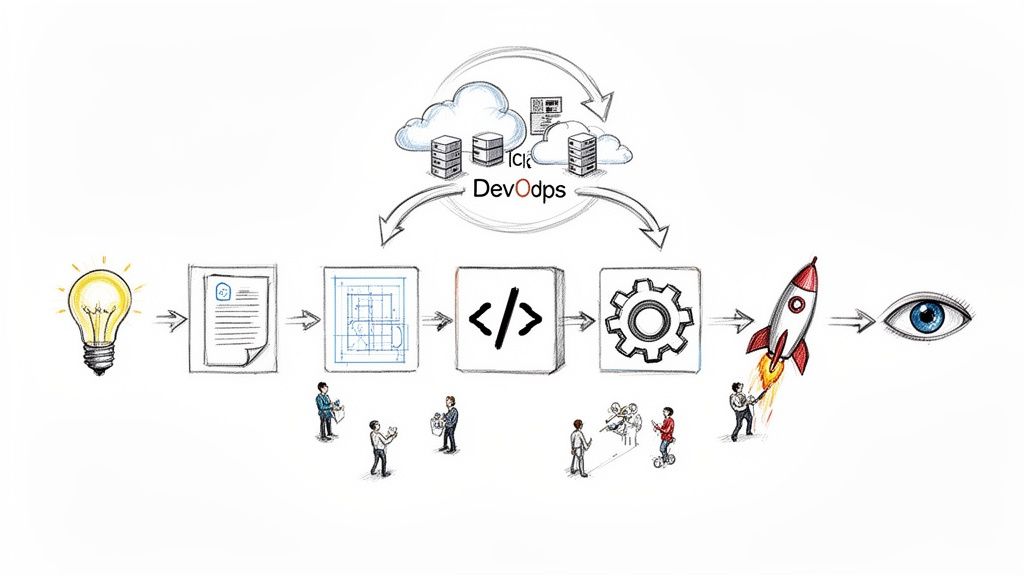

Selecting a methodology is foundational, but true velocity is unlocked by integrating DevOps automation. This is a comprehensive cultural and engineering shift that dissolves inter-team barriers and automates the entire software delivery pipeline.

The goal is to engineer a high-speed, low-friction conduit from a developer's IDE to the production environment.

If the SDLC is the engine, DevOps automation is the turbocharger. By systematically eliminating manual handoffs and repetitive tasks, a slow, error-prone process is transformed into a reliable, high-velocity delivery mechanism built on four key technical pillars.

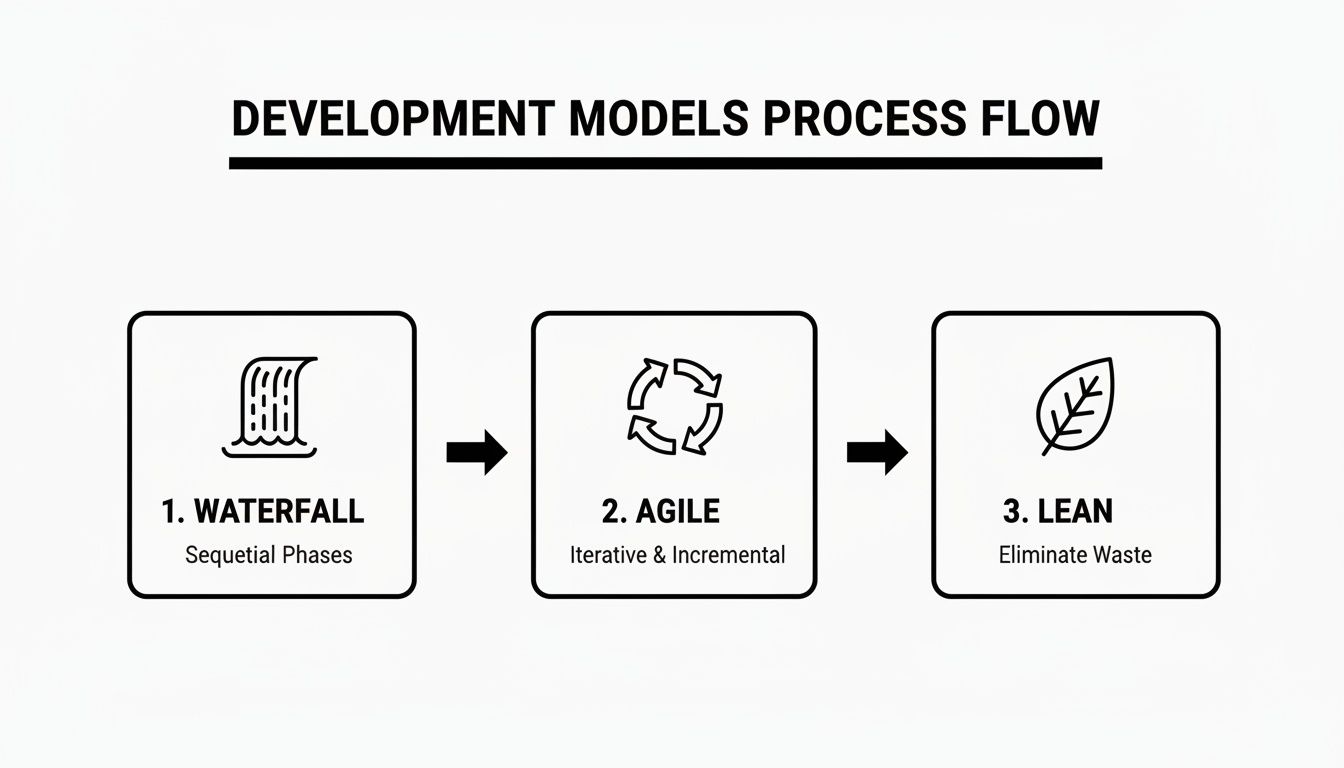

This diagram illustrates the evolution from rigid, sequential models to the flexible, iterative frameworks that underpin the DevOps philosophy.

The trajectory clearly shows a move toward faster feedback loops and continuous delivery—the core tenets of effective automation.

Pillar 1: Continuous Integration and Continuous Delivery (CI/CD)

The CI/CD pipeline is the automated core of DevOps. It's a series of orchestrated steps that automatically build, test, and deploy code changes, significantly reducing manual effort and the risk of human error.

- Continuous Integration (CI): Developers merge code into a central repository (e.g., Git) frequently. Each merge triggers an automated build and a suite of tests (unit, integration, static analysis) to detect integration errors immediately. Key tools include Jenkins, GitLab CI, and GitHub Actions.

- Continuous Delivery/Deployment (CD): After passing all CI stages, the application artifact is automatically deployed to a staging environment. Continuous Delivery ensures the code is always in a deployable state. Continuous Deployment automates the final push to production for every validated build.

The strategic objective of CI/CD is to make deployments a "non-event." A production release should be a routine, low-risk operation, not a high-stress, all-hands emergency.

Pillar 2: Infrastructure as Code (IaC)

Infrastructure as Code (IaC) is the practice of managing and provisioning infrastructure (servers, networks, databases) through machine-readable definition files, rather than manual configuration. These files are version-controlled, tested, and integrated into the CI/CD pipeline.

Using tools like Terraform (declarative) or Ansible (procedural), you can programmatically create identical, ephemeral environments. This eliminates "configuration drift" between development, staging, and production environments, ensuring absolute consistency. For a deeper technical analysis, review our article on combining DevOps and Agile development.

Pillar 3: Container Orchestration

Modern microservices architectures introduce significant operational complexity. Containers, primarily Docker, solve this by packaging an application with all its dependencies into a standardized, portable unit.

Container orchestrators like Kubernetes then automate the deployment, scaling, and management of these containerized applications at scale. Kubernetes handles critical functions like service discovery, load balancing, automated rollouts, and self-healing, enabling resilient, highly available systems with minimal manual intervention.

Pillar 4: Observability

In a complex, distributed system, traditional monitoring is insufficient. Observability provides deep, actionable insights into system behavior by analyzing three key data types:

- Logs: Granular, timestamped records of discrete events.

- Metrics: Aggregated, numerical data about system performance (e.g., CPU utilization, latency, error rates).

- Traces: A detailed, end-to-end view of a single request as it propagates through all services in the system.

An observability stack composed of tools like Prometheus (metrics), Grafana (visualization), and the ELK Stack (Elasticsearch, Logstash, Kibana) enables engineers to move from reactive troubleshooting to proactive performance optimization and rapid root cause analysis.

This technical foundation is crucial, as the global developer population is projected to reach 20.8 million by 2025. This talent expects to operate within a highly automated, transparent, and instrumented engineering environment.

Measuring and Improving Process Maturity

A high-performance software development process is not a static endpoint; it is a dynamic system requiring continuous measurement and optimization. Transitioning from qualitative assessments ("I think we're slowing down") to quantitative analysis ("our metrics show a bottleneck here") is the hallmark of an elite engineering organization.

A data-driven approach provides an objective framework for systematically improving development process maturity.

For this purpose, the industry standard is the DORA metrics, developed by the DevOps Research and Assessment team. These four key indicators provide a balanced view of both development velocity and operational stability, preventing teams from optimizing one at the expense of the other.

The Four Key DORA Metrics

These metrics function as a balanced scorecard, offering a holistic view of engineering effectiveness. They prevent the common pitfall of sacrificing quality for speed.

Deployment Frequency: Measures how often code is successfully deployed to production. Elite performers deploy on-demand, multiple times per day, while low performers may deploy monthly or quarterly. This is a direct indicator of team throughput and agility.

Lead Time for Changes: Measures the time from a code commit to that code running successfully in production. This metric exposes the end-to-end efficiency of the entire delivery pipeline, including code review, testing, and deployment stages. Shorter lead times indicate faster value delivery.

Change Failure Rate: The percentage of production deployments that result in a degraded service and require remediation (e.g., a hotfix, rollback). A low change failure rate is a strong signal of a high-quality, reliable delivery process.

Time to Restore Service: Measures the time it takes to restore service after a production failure or incident. This is the ultimate measure of system resilience and incident response capability. Elite teams can often restore service in under an hour.

Getting the Data: Instrumenting Your Pipelines

Tracking these metrics requires instrumenting your CI/CD pipelines and version control systems. Platforms like GitLab, GitHub Actions, and Jenkins can be configured to emit events for commits, builds, and deployments.

This data is then aggregated in observability platforms or specialized DevOps intelligence tools for analysis.

With this data, you can precisely identify process bottlenecks. Is Lead Time for Changes increasing? The data may point to a slow code review process or an inefficient test suite. Is the Change Failure Rate climbing? This could indicate inadequate testing environments or insufficient automated quality gates.

Using DORA metrics transforms conversations from subjective opinions to objective, data-backed analysis. Instead of "I feel like we're slowing down," you can state, "Our Lead Time for Changes has increased by 15% this quarter, and the data points to a bottleneck in our integration testing stage."

This quantitative approach is essential for justifying investments in new tools, processes, or personnel, as it directly links engineering improvements to measurable business outcomes.

AI is rapidly becoming integral to optimizing these workflows. 84% of developers are currently using or plan to use AI tools by 2025. Among professional developers, 51% already leverage AI daily. Integrating AI into the software lifecycle—from code generation and automated testing to intelligent deployment strategies—is projected to yield productivity gains of 30% to 35%. These trends are detailed in the 2025 Stack Overflow developer survey.

Bringing Your Development Process to Life with OpsMoon

Designing a technically sound process is one challenge; implementing it as a high-performing engineering function is another entirely. This requires deep technical expertise and the right personnel. A strategic partner can provide the necessary leverage to navigate the complexities of implementation and optimization.

At OpsMoon, we provide a structured, actionable roadmap to engineer a world-class development practice. Our methodology is designed to produce measurable improvements at each stage of implementation.

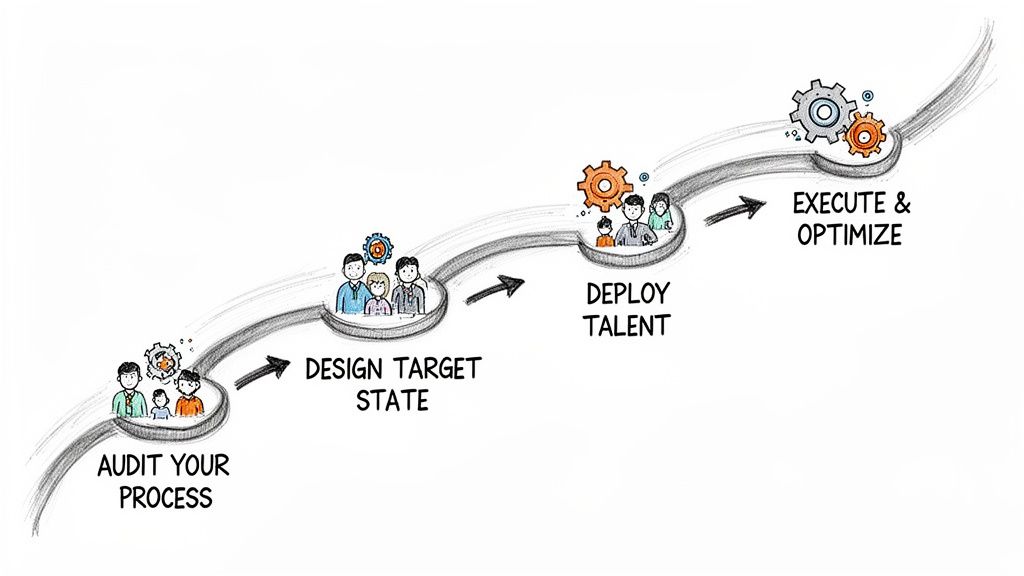

Our Four-Step Implementation Roadmap

Our process is engineered to transition your organization from its current state to a state of continuous, data-driven improvement, ensuring your new software development process delivers tangible results.

1. Audit Your Current Process

We begin with a deep technical audit of your existing workflows. This involves mapping the entire value stream, identifying specific bottlenecks and sources of friction, and establishing a quantitative performance baseline using metrics like DORA. This data-driven audit informs all subsequent decisions.

2. Design Your Target State

Based on the audit, we architect a future-state process tailored to your technical stack and business objectives. This could involve designing a declarative CI/CD pipeline, developing a phased Kubernetes adoption strategy, or implementing a comprehensive observability stack. The design is a practical, executable blueprint, not a theoretical framework.

A common failure mode is adopting new tools without an underlying process to support them. The goal is not simply to install Kubernetes or Terraform; it is to engineer a system where these tools solve specific, identified problems and measurably improve delivery speed and reliability.

3. Deploy Expert Talent

A modern process for software development requires highly specialized engineers who are difficult to source and retain. We bridge this talent gap by connecting you with the top 0.7% of remote DevOps engineers. Whether you need a Terraform expert to implement IaC, an AWS solutions architect, or an SRE to engineer reliability, we provide the precise talent required for critical roles.

4. Execute and Optimize

Our experts embed directly with your teams to execute the implementation plan. We don't deliver a slide deck and depart; we actively build the CI/CD pipelines, configure the infrastructure using IaC, and set up the monitoring and alerting systems. Using DORA metrics as our guide, we continuously track performance and iterate on the process to drive sustained improvement.

Our dedicated DevOps services are specifically designed to help your team master these technical challenges.

Frequently Asked Questions

When implementing a modern process for software development, several key technical questions consistently arise. Here are concise answers to the most common queries from engineering leaders.

What Is the Most Critical Stage of the SDLC?

While all stages are integral, the Requirements Analysis and System Design phase has the highest leverage. Errors introduced here are the most costly and difficult to remediate.

A bug in application code is analogous to a cosmetic flaw in a building—it is visible but often simple to fix. An architectural flaw is akin to a faulty foundation; its downstream consequences are systemic and exponentially more expensive to correct.

An incorrect architectural decision, such as choosing the wrong database technology or service boundary, can easily cost 10 to 200 times more to fix post-launch than a coding defect. Rigorous upfront design is the most effective form of risk mitigation.

How Do You Introduce DevOps to an Existing Team?

Avoid a "big bang" transformation. The optimal strategy is to start with a small, high-impact pilot project.

Identify a single, universally acknowledged pain point—for example, a manual, error-prone deployment process for a specific service. Automate that single workflow. The immediate, visible improvement will build the political capital and team buy-in required for broader adoption.

From there, introduce core practices incrementally:

- Implement a CI pipeline with automated unit testing for a single repository.

- Define a non-critical piece of infrastructure as code using Terraform.

- Establish a shared on-call rotation to foster a culture of collective ownership.

Can You Combine Agile and Waterfall Methodologies?

Yes, this hybrid model is common in large enterprises, particularly in cyber-physical systems involving both hardware and software development.

For example, a hardware engineering team might follow a rigid Waterfall model due to long procurement lead times and fixed physical design constraints.

In parallel, the software team can operate using an Agile framework, delivering features in iterative sprints. The key to success in this hybrid model is establishing well-defined, formally managed integration points and API contracts between the two streams. Dependency management becomes the critical path to ensuring the project does not stall.

Ready to build a high-velocity engineering team? OpsMoon connects you with the top 0.7% of remote DevOps talent to implement a process that delivers. Start with a free work planning session and let our experts build your roadmap. Learn more at OpsMoon.

Leave a Reply