A Kubernetes service mesh is a dedicated, programmable infrastructure layer that handles inter-service communication. It operates by deploying a lightweight proxy, known as a sidecar, alongside each application container. This proxy intercepts all ingress and egress network traffic, allowing for centralized control over reliability, security, and observability features without modifying application code. This architecture decouples operational logic from business logic.

Why Do We Even Need a Service Mesh?

In a microservices architecture, as the service count grows from a handful to hundreds, the complexity of inter-service communication explodes. Without a dedicated management layer, this results in significant operational challenges: increased latency, cascading failures, and a lack of visibility into traffic flows.

While Kubernetes provides foundational networking capabilities like service discovery and basic load balancing via kube-proxy and CoreDNS, it operates primarily at L3/L4 (IP/TCP). A service mesh elevates this control to L7 (HTTP, gRPC), providing sophisticated traffic management, robust security postures, and deep observability that vanilla Kubernetes lacks.

The Mess of Service-to-Service Complexity

The unreliable nature of networks in distributed systems necessitates robust handling of failures, security, and monitoring. On container orchestration platforms like Kubernetes, these challenges manifest as specific technical problems that application-level libraries alone cannot solve efficiently or consistently.

- Unreliable Networking: How do you implement consistent retry logic with exponential backoff and jitter for gRPC services written in Go and REST APIs written in Python? How do you gracefully handle a

503 Service Unavailableresponse from a downstream dependency? - Security Gaps: How do you enforce mutual TLS (mTLS) for all pod-to-pod communication, rotate certificates automatically, and define fine-grained authorization policies (e.g.,

service-Acan onlyGETfrom/metricsonservice-B)? - Lack of Visibility: When a user request times out after traversing five services, how do you trace its exact path, view the latency at each hop, and identify the failing service without manually instrumenting every application with distributed tracing libraries like OpenTelemetry?

A service mesh injects a transparent proxy sidecar into each application pod. This proxy intercepts all TCP traffic, giving platform operators a central control plane to declaratively manage service-to-service communication.

To understand the technical uplift, let's compare a standard Kubernetes environment with one augmented by a service mesh.

Kubernetes With and Without a Service Mesh

| Operational Concern | Challenge in Vanilla Kubernetes | Solution with a Service Mesh |

|---|---|---|

| Traffic Management | Basic kube-proxy round-robin load balancing. Canary releases require complex Ingress controller configurations or manual Deployment manipulations. |

L7-aware routing. Define traffic splitting via weighted rules (e.g., 90% to v1, 10% to v2), header-based routing, and fault injection. |

| Security | Requires application-level TLS implementation. Kubernetes NetworkPolicies provide L3/L4 segmentation but not identity or encryption. | Automatic mTLS encrypts all pod-to-pod traffic. Service-to-service authorization is based on cryptographic identities (SPIFFE). |

| Observability | Relies on manual instrumentation (e.g., Prometheus client libraries) in each service. Tracing requires code changes and library management. | Automatic, uniform L7 telemetry. The sidecar generates metrics (latency, RPS, error rates), logs, and distributed traces for all traffic. |

| Reliability | Developers must implement retries, timeouts, and circuit breakers in each service's code, leading to inconsistent behavior. | Centralized configuration for retries (with per_try_timeout), timeouts, and circuit breaking (consecutive_5xx_errors), enforced by the sidecar. |

This table highlights the fundamental shift: a service mesh moves complex, cross-cutting networking concerns from the application code into a dedicated, manageable infrastructure layer.

This isn't just a niche technology; it's becoming a market necessity. The global service mesh market, currently valued around USD 516 million, is expected to skyrocket to USD 4,287.51 million by 2032. This growth is running parallel to the Kubernetes boom, where over 70% of organizations are already running containers and desperately need the kind of sophisticated traffic management a mesh provides. You can find more details on this market growth at hdinresearch.com.

Understanding the Service Mesh Architecture

The architecture of a Kubernetes service mesh is logically split into a Data Plane and a Control Plane. This separation of concerns is critical: the data plane handles the packet forwarding, while the control plane provides the policy and configuration.

This model is analogous to an air traffic control system. The services are aircraft, and the network of sidecar proxies that carry their communications forms the Data Plane. The central tower that dictates flight paths, enforces security rules, and monitors all aircraft is the Control Plane.

This diagram visualizes the transition from an unmanaged mesh of service interactions ("Chaos") to a structured, observable, and secure system ("Order") managed by a service mesh on Kubernetes.

The Data Plane: Where the Traffic Lives

The Data Plane consists of a network of high-performance L7 proxies deployed as sidecars within each application's Pod. This injection is typically automated via a Kubernetes Mutating Admission Webhook.

When a Pod is created in a mesh-enabled namespace, the webhook intercepts the API request and injects the proxy container and an initContainer. The initContainer configures iptables rules within the Pod's network namespace to redirect all inbound and outbound traffic to the sidecar proxy.

- Traffic Interception: The

iptablesrules ensure that the application container is unaware of the proxy. It sends traffic tolocalhost, where the sidecar intercepts it, applies policies, and then forwards it to the intended destination. - Local Policy Enforcement: Each sidecar proxy enforces policies locally. This includes executing retries, managing timeouts, performing mTLS encryption/decryption, and collecting detailed telemetry data (metrics, logs, traces).

- Popular Proxies: Envoy is the de facto standard proxy, used by Istio and Consul. It's a CNCF graduated project known for its performance and dynamic configuration API (xDS). Linkerd uses a purpose-built, ultra-lightweight proxy written in Rust for optimal performance and resource efficiency.

This decentralized model ensures that the data plane remains operational even if the control plane becomes unavailable. Proxies continue to route traffic based on their last known configuration.

The Control Plane: The Brains of the Operation

The Control Plane is the centralized management component. It does not touch any data packets. Its role is to provide a unified API for operators to define policies and to translate those policies into configurations that the data plane proxies can understand and enforce.

The Control Plane is where you declare your intent. For example, you define a policy stating, "split traffic for

reviews-service95% to v1 and 5% to v2." The control plane translates this intent into specific Envoy route configurations and distributes them to the sidecars via the xDS API.

Key functions of the Control Plane include:

- Service Discovery: Aggregates service endpoints from the underlying platform (e.g., Kubernetes Endpoints API).

- Configuration Distribution: Pushes routing rules, security policies, and telemetry configurations to all sidecar proxies.

- Certificate Management: Acts as a Certificate Authority (CA) to issue and rotate X.509 certificates for workloads, enabling automatic mTLS.

Putting It All Together: A Practical Example

Let's implement a retry policy for a service named inventory-service. If a request fails with a 503 status, we want to retry up to 3 times with a 25ms delay between retries.

Without a service mesh, developers would need to implement this logic in every client service using language-specific libraries, leading to code duplication and inconsistency.

With a Kubernetes service mesh like Istio, the process is purely declarative:

- Define the Policy: You create an Istio

VirtualServiceYAML manifest.apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: inventory-service spec: hosts: - inventory-service http: - route: - destination: host: inventory-service retries: attempts: 3 perTryTimeout: 2s retryOn: 5xx - Apply to Control Plane: You apply this configuration using

kubectl apply -f inventory-retry-policy.yaml. - Configuration Push: The Istio control plane (Istiod) translates this policy into an Envoy configuration and pushes it to all relevant sidecar proxies via xDS.

- Execution in Data Plane: The next time a service calls

inventory-serviceand receives a503error, its local sidecar proxy intercepts the response and automatically retries the request according to the defined policy.

The application code remains completely untouched. This decoupling is the primary architectural benefit, enabling platform teams to manage network behavior without burdening developers. This also enhances other tools; the rich, standardized telemetry from the mesh provides a perfect data source for monitoring Kubernetes with Prometheus.

Unlocking Zero-Trust Security and Deep Observability

While the architecture is technically elegant, the primary drivers for adopting a Kubernetes service mesh are the immediate, transformative gains in zero-trust security and deep observability. These capabilities are moved from the application layer to the infrastructure layer, where they can be enforced consistently.

This shift is critical. The service mesh market is projected to grow from USD 925.95 million in 2026 to USD 11,742.9 million by 2035, largely driven by security needs. With the average cost of a data breach at USD 4.45 million, implementing a zero-trust model is no longer a luxury. This has driven service mesh demand up by 35% since 2023, according to globalgrowthinsights.com.

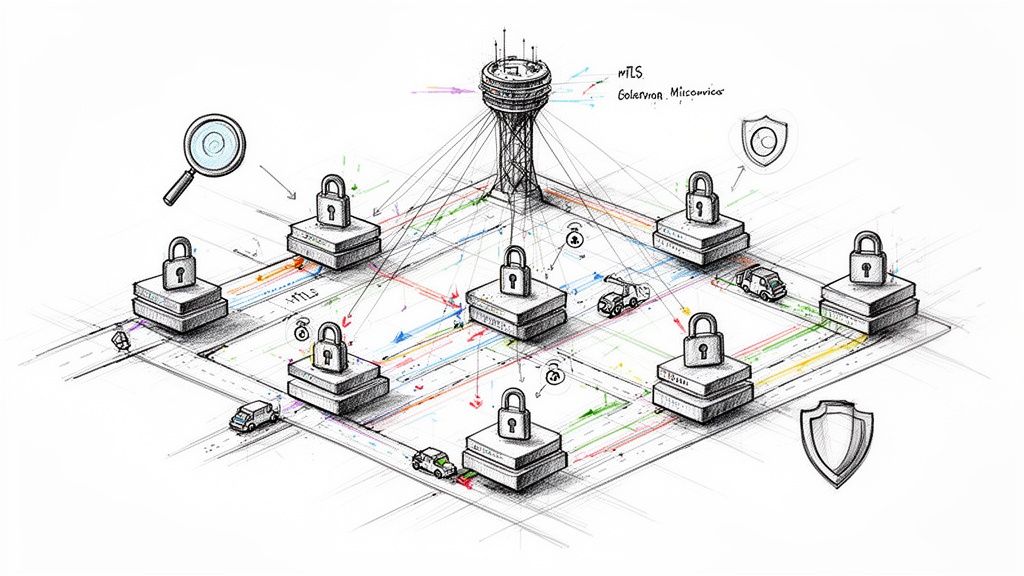

Achieving Zero-Trust with Automatic mTLS

Traditional perimeter-based security ("castle-and-moat") is ineffective for microservices. A service mesh implements a zero-trust network model where no communication is trusted by default. Identity is the new perimeter.

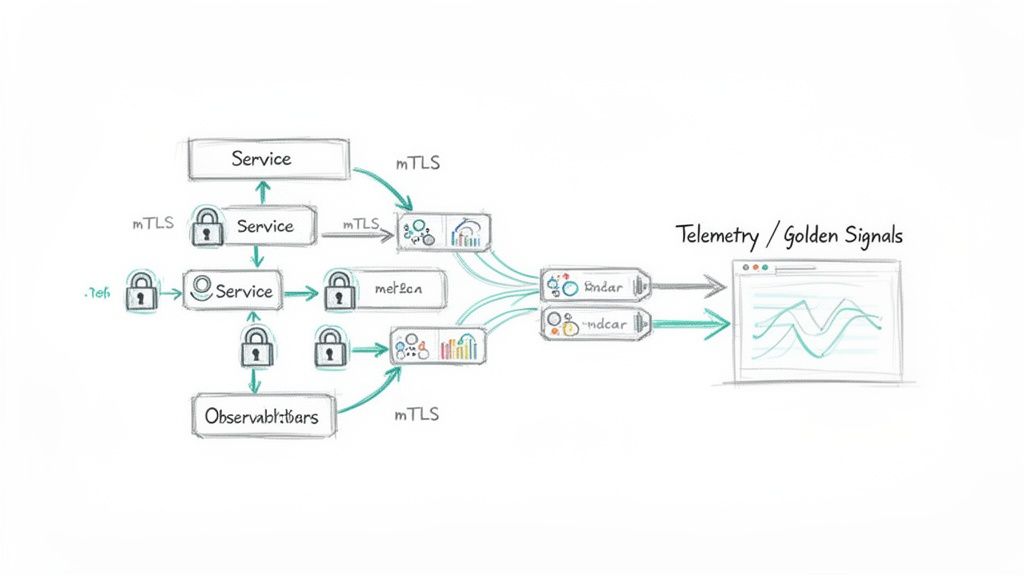

This is achieved through automatic mutual TLS (mTLS), which provides authenticated and encrypted communication channels between every service, without developer intervention.

The technical workflow is as follows:

- Certificate Authority (CA): The control plane includes a built-in CA.

- Identity Provisioning: When a new pod is created, its sidecar proxy generates a private key and sends a Certificate Signing Request (CSR) to the control plane. The control plane validates the pod's identity (via its Kubernetes Service Account token) and issues a short-lived X.509 certificate. This identity is often encoded in a SPIFFE-compliant format (e.g.,

spiffe://cluster.local/ns/default/sa/my-app). - Encrypted Handshake: When Service A calls Service B, their respective sidecar proxies perform a TLS handshake. They exchange certificates and validate each other's identity against the root CA.

- Secure Tunnel: Upon successful validation, an encrypted TLS tunnel is established for all subsequent traffic between these two specific pods.

This process is entirely transparent to the application. The

checkout-servicecontainer makes a plaintext HTTP request topayment-service, but the sidecar intercepts it, wraps it in mTLS, sends it securely over the network, where the receiving proxy unwraps it and forwards the plaintext request to thepayment-servicecontainer.

This single feature hardens the security posture by default, preventing lateral movement and man-in-the-middle attacks within the cluster. This cryptographic identity layer is a powerful complement to the role of the Kubernetes audit log in creating a comprehensive security strategy.

Gaining Unprecedented Observability

Troubleshooting a distributed system without a service mesh involves instrumenting dozens of services with disparate libraries for metrics, logs, and traces. A service mesh provides this "for free." Because the sidecar proxy sits in the request path, it can generate uniform, high-fidelity telemetry for all traffic. This data is often referred to as the "Golden Signals":

- Latency: Request processing time, including percentiles (p50, p90, p99).

- Traffic: Request rate, measured in requests per second (RPS).

- Errors: The rate of server-side (5xx) and client-side (4xx) errors.

- Saturation: A measure of service load, often derived from CPU/memory utilization and request queue depth.

The sidecar proxy emits this telemetry in a standardized format (e.g., Prometheus exposition format). This data can be scraped by Prometheus and visualized in Grafana to create real-time dashboards of system-wide health. For tracing, the proxy generates and propagates trace headers (like B3 or W3C Trace Context), enabling distributed traces that show the full lifecycle of a request across multiple services. This dramatically reduces Mean Time to Detection (MTTD) and Mean Time to Resolution (MTTR).

Choosing the Right Service Mesh Implementation

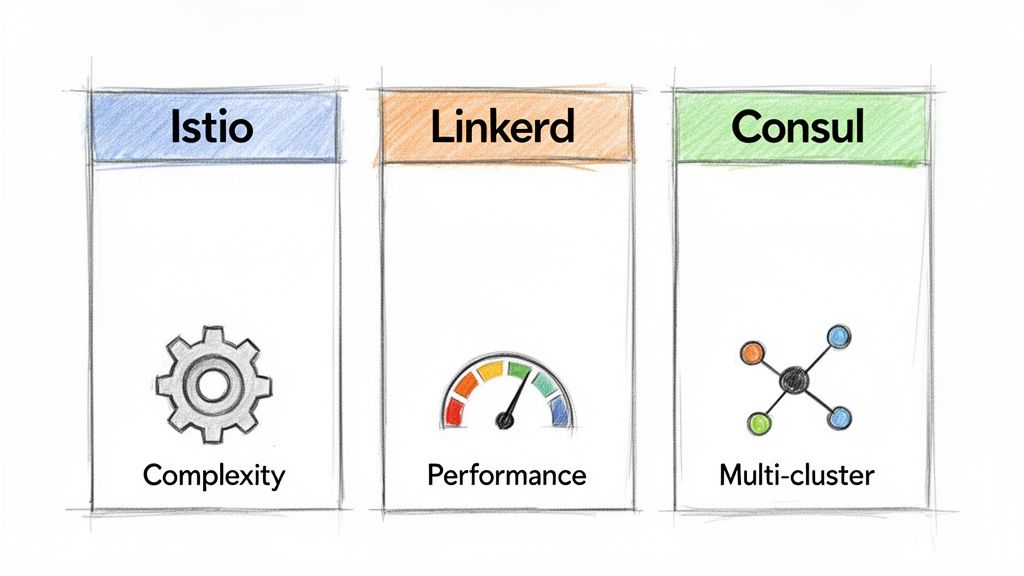

Selecting a Kubernetes service mesh is a strategic decision based on operational maturity, performance requirements, and architectural needs. The three leading implementations—Istio, Linkerd, and Consul Connect—offer different philosophies and trade-offs.

This decision is increasingly critical as the market is projected to expand from USD 838.1 million in 2026 to USD 22,891.85 million by 2035. With Kubernetes adoption nearing ubiquity, choosing a mesh that aligns with your long-term operational strategy is paramount.

Istio: The Feature-Rich Powerhouse

Istio is the most comprehensive and feature-rich service mesh. Built around the highly extensible Envoy proxy, it provides unparalleled control over traffic routing, security policies, and telemetry.

- Feature Depth: Istio excels at complex use cases like multi-cluster routing, fine-grained canary deployments with header-based routing, fault injection for chaos engineering, and WebAssembly (Wasm) extensibility for custom L7 protocol support.

- Operational Complexity: This power comes at the cost of complexity. Istio has a steep learning curve and a significant operational footprint, requiring expertise in its extensive set of Custom Resource Definitions (CRDs) like

VirtualService,DestinationRule, andGateway.

Istio is best suited for large organizations with mature platform engineering teams that require its advanced feature set to solve complex networking challenges.

Linkerd: The Champion of Simplicity and Performance

Linkerd adopts a minimalist philosophy, prioritizing simplicity, performance, and low operational overhead. It aims to provide the essential service mesh features that 80% of users need, without the complexity.

It uses a custom-built, ultra-lightweight "micro-proxy" written in Rust, which is optimized for speed and minimal resource consumption.

- Performance Overhead: Benchmarks consistently show Linkerd adding lower latency (sub-millisecond p99) and consuming less CPU and memory per pod compared to Envoy-based meshes. This makes it ideal for latency-sensitive or resource-constrained environments.

- Ease of Use: Installation is typically a two-command process (

linkerd install | kubectl apply -f -). Its dashboard and CLI provide immediate, actionable observability out of the box. The trade-off is a more focused, less extensible feature set compared to Istio.

Our technical breakdown of Istio vs. Linkerd provides deeper performance metrics and configuration examples.

Consul Connect: The Multi-Platform Integrator

Consul has long been a standard for service discovery. Consul Connect extends it into a service mesh with a key differentiator: first-class support for hybrid and multi-platform environments.

While Istio and Linkerd are Kubernetes-native, Consul was designed from the ground up to connect services across heterogeneous infrastructure, including virtual machines, bare metal, and multiple Kubernetes clusters.

- Multi-Cluster Capabilities: Consul provides out-of-the-box solutions for transparently connecting services across different data centers, cloud providers, and runtime environments using components like Mesh Gateways.

- Ecosystem Integration: For organizations already invested in the HashiCorp stack, Consul offers seamless integration with tools like Vault for certificate management and Terraform for infrastructure as code.

The right choice depends on your team's priorities and existing infrastructure.

Service Mesh Comparison: Istio vs. Linkerd vs. Consul

This table provides a technical comparison of the three leading service meshes across key decision-making dimensions.

| Dimension | Istio | Linkerd | Consul Connect |

|---|---|---|---|

| Primary Strength | Unmatched feature depth and traffic control | Simplicity, performance, and low overhead | Multi-cluster and hybrid environment support |

| Operational Cost | High; requires significant team expertise | Low; designed for ease of use and maintenance | Moderate; familiar to users of HashiCorp tools |

| Ideal Use Case | Complex, large-scale enterprise deployments | Teams prioritizing speed and developer experience | Hybrid environments with VMs and Kubernetes |

| Underlying Proxy | Envoy | Linkerd2-proxy (Rust) | Envoy |

Ultimately, your decision should be based on a thorough evaluation of your technical requirements against the operational overhead each tool introduces.

Developing Your Service Mesh Adoption Strategy

Implementing a Kubernetes service mesh is a significant architectural change, not a simple software installation. A premature or poorly planned adoption can introduce unnecessary complexity and performance overhead. A successful strategy begins with identifying clear technical pain points that a mesh is uniquely positioned to solve.

Identifying Your Adoption Triggers

A service mesh is not a day-one requirement. Its value emerges as system complexity grows. Look for these specific technical indicators:

- Growing Service Count: Once your cluster contains 10-15 interdependent microservices, the "n-squared" problem of communication paths makes manual management of security and reliability untenable. The cognitive load becomes too high.

- Inconsistent Security Policies: If your teams are implementing mTLS or authorization logic within application code, you have a clear signal. This leads to CVE-ridden dependencies, inconsistent enforcement, and high developer toil. A service mesh centralizes this logic at the platform level.

- Troubleshooting Nightmares: If your Mean Time to Resolution (MTTR) is high because engineers spend hours correlating logs across multiple services to trace a single failed request, the automatic distributed tracing and uniform L7 metrics provided by a service mesh will deliver immediate ROI.

The optimal time to adopt a service mesh is when the cumulative operational cost of managing reliability, security, and observability at the application level exceeds the operational cost of managing the mesh itself.

Analyzing the Real Costs of Implementation

Adopting a service mesh involves clear trade-offs. A successful strategy must account for these costs.

Here are the primary technical costs to consider:

- Operational Overhead: You are adding a complex distributed system to your stack. Your platform team must be prepared to manage control plane upgrades, debug proxy configurations, and monitor the health of the mesh itself. This requires dedicated expertise.

- Resource Consumption: Sidecar proxies consume CPU and memory in every application pod. While modern proxies are highly efficient, at scale this resource tax is non-trivial and will impact cluster capacity planning and cloud costs. You must budget for this overhead. For example, an Envoy proxy might add 50m CPU and 50Mi memory per pod.

- Team Learning Curve: Engineers must learn new concepts like

VirtualServiceorServiceProfile, new debugging workflows (e.g., usingistioctl proxy-configorlinkerd tap), and how to interpret the new telemetry data. This requires an investment in training and documentation.

By identifying specific technical triggers and soberly assessing the implementation costs, you can formulate a strategic, value-driven adoption plan rather than a reactive one.

Partnering for a Successful Implementation

Deploying a Kubernetes service mesh like Istio or Linkerd is a complex systems engineering task. It requires deep expertise in networking, security, and observability to avoid common pitfalls like misconfigured proxies causing performance degradation, incomplete mTLS leaving security gaps, or telemetry overload that obscures signals with noise.

This is where a dedicated technical partner provides critical value. At OpsMoon, we specialize in DevOps and platform engineering, ensuring your service mesh adoption is successful from architecture to implementation. We help you accelerate the process and achieve tangible ROI without the steep, and often painful, learning curve.

Your Strategic Roadmap to a Service Mesh

We begin with a free work planning session to develop a concrete, technical roadmap. Our engineers will analyze your current Kubernetes architecture, identify the primary drivers for a service mesh, and help you select the right implementation—Istio for its feature depth or Linkerd for its operational simplicity.

Our mission is simple: connect complex technology to real business results. We make sure your service mesh isn't just a cool new tool, but a strategic asset that directly boosts your reliability, security, and ability to scale.

Access to Elite Engineering Talent

Through our exclusive Experts Matcher, we connect you with engineers from the top 0.7% of global talent. These are seasoned platform engineers and SREs who have hands-on experience integrating service meshes into complex CI/CD pipelines, configuring advanced traffic management policies, and building comprehensive observability stacks for production systems.

Working with OpsMoon means gaining a strategic partner dedicated to your success. We mitigate risks, accelerate adoption, and empower your team with the skills and confidence needed to operate your new infrastructure effectively.

Common Questions About Kubernetes Service Meshes

Here are answers to some of the most common technical questions engineers have when considering a service mesh.

What Is the Performance Overhead of a Service Mesh?

A service mesh inherently introduces latency and resource overhead. Every network request is now intercepted and processed by two sidecar proxies (one on the client side, one on the server side).

Modern proxies like Envoy (Istio) and Linkerd's Rust proxy (Linkerd) are highly optimized. The additional latency is typically in the low single-digit milliseconds at the 99th percentile (p99). The resource cost is usually around 0.1 vCPU and 50-100MB of RAM per proxy. However, the exact impact depends heavily on your workload (request size, traffic volume, protocol). You must benchmark this in a staging environment that mirrors production traffic patterns.

Always measure the overhead against your application's specific SLOs. A few milliseconds might be negligible for a background job service but critical for a real-time bidding API.

Linkerd is often chosen for its focus on minimal overhead, while Istio offers more features at a potentially higher resource cost.

Can I Adopt a Service Mesh Gradually?

Yes, and this is the recommended approach. A "big bang" rollout is extremely risky. A phased implementation allows you to de-risk the process and build operational confidence.

Most service meshes support incremental adoption by enabling sidecar injection on a per-namespace basis. You can achieve this by adding a label to the namespace (e.g., istio-injection: enabled or linkerd.io/inject: enabled).

- Start Small: Choose a non-critical development or testing namespace. Apply the label and restart the pods in that namespace to inject the sidecars.

- Validate and Monitor: Verify that core functionality like mTLS and basic routing is working. Use the mesh's dashboards and metrics to analyze the performance overhead. Test your observability stack integration.

- Expand Incrementally: Once validated, proceed to other staging namespaces and, finally, to production namespaces, potentially on a per-service basis.

This methodical approach allows you to contain any issues to a small blast radius before they can impact production workloads.

Does a Service Mesh Replace My API Gateway?

No, they are complementary technologies that solve different problems. An API Gateway manages north-south traffic (traffic entering the cluster from external clients). A service mesh manages east-west traffic (traffic between services within the cluster).

A robust architecture uses both:

- The API Gateway (e.g., Kong, Ambassador, or Istio's own Ingress Gateway) serves as the entry point. It handles concerns like external client authentication (OAuth/OIDC), global rate limiting, and routing external requests to the correct internal service.

- The Kubernetes Service Mesh takes over once the traffic is inside the cluster. It provides mTLS for internal communication, implements fine-grained traffic policies between services, and collects detailed telemetry for every internal hop.

Think of it this way: the API Gateway is the security guard at the front door of your building. The service mesh is the secure, keycard-based access control system for all the internal rooms and hallways.

Do I Need a Mesh for Only a Few Microservices?

Probably not. For applications with fewer than 5-10 microservices, the operational complexity and resource cost of a service mesh usually outweigh the benefits.

In smaller systems, you can achieve "good enough" reliability and security using native Kubernetes objects and application libraries. Use Kubernetes Services for discovery, Ingress for routing, NetworkPolicies for L3/L4 segmentation, and language-specific libraries for retries and timeouts. A service mesh becomes truly valuable when the number of services and their interconnections grows to a point where manual management is no longer feasible.

Ready to implement a service mesh without the operational headaches? OpsMoon connects you with the world's top DevOps engineers to design and execute a successful adoption strategy. Start with a free work planning session to build your roadmap today.

Leave a Reply